And now comes the best part 🙂 As you have seen, the installation with Easy Installer is not complicated, the upgrade requires more attention.

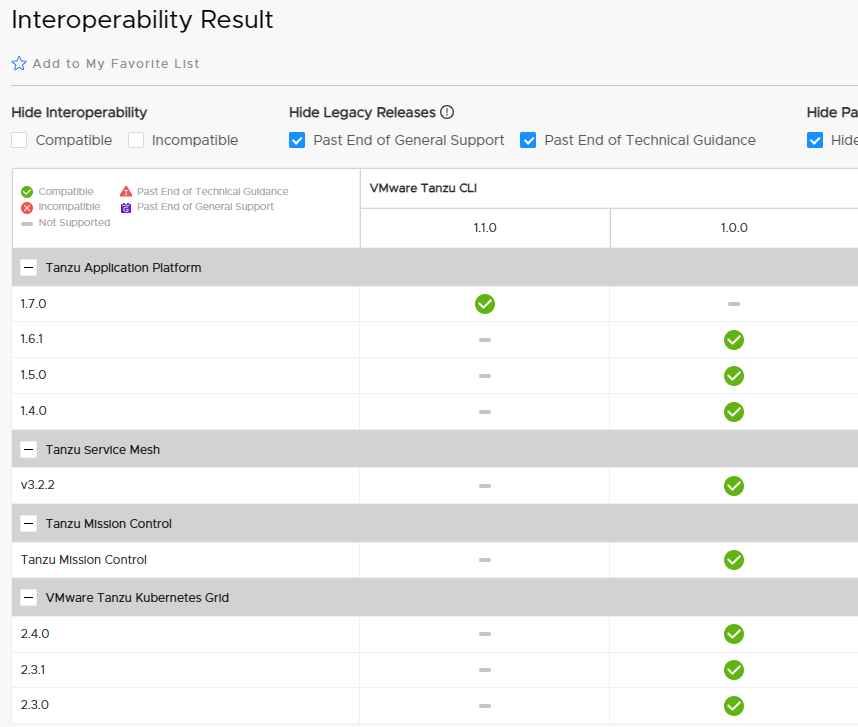

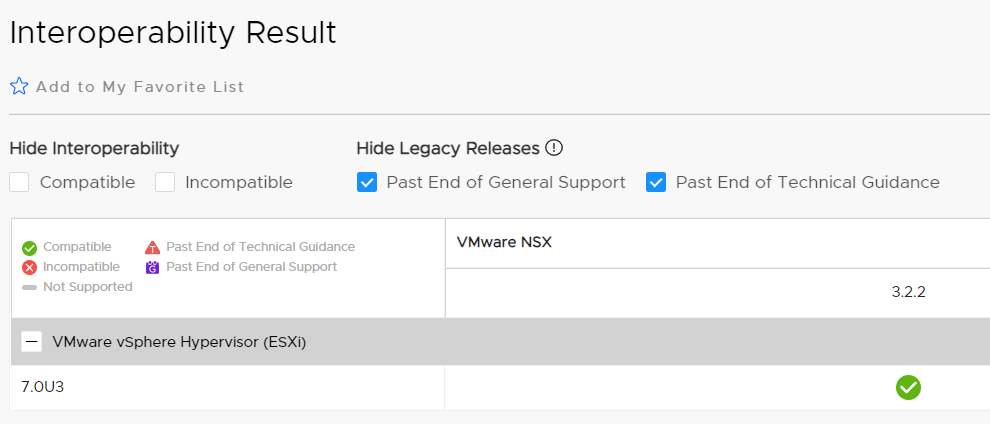

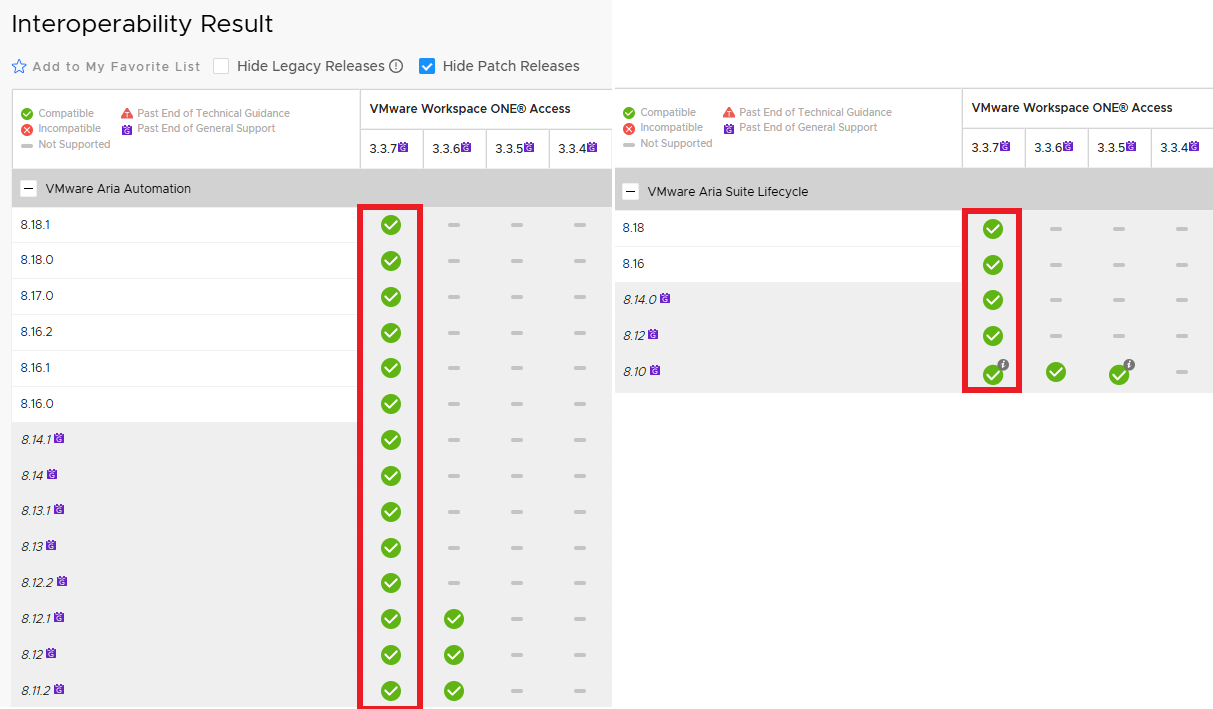

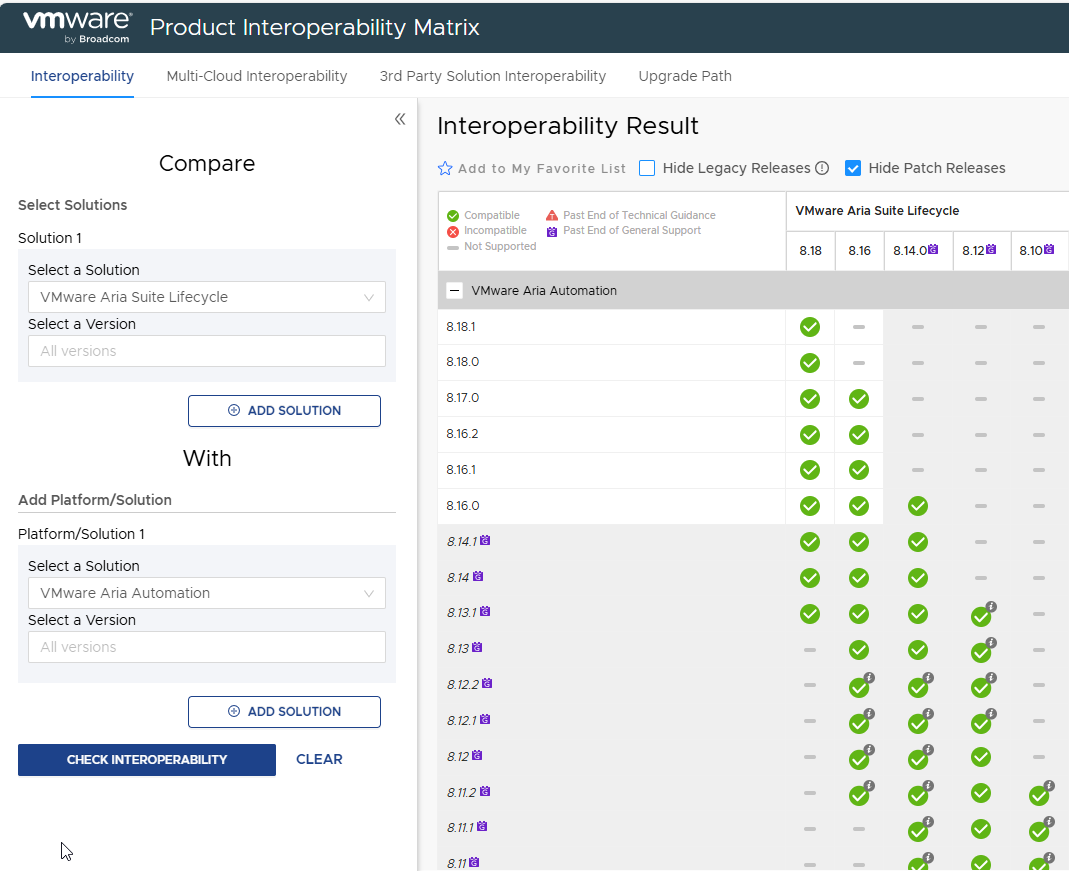

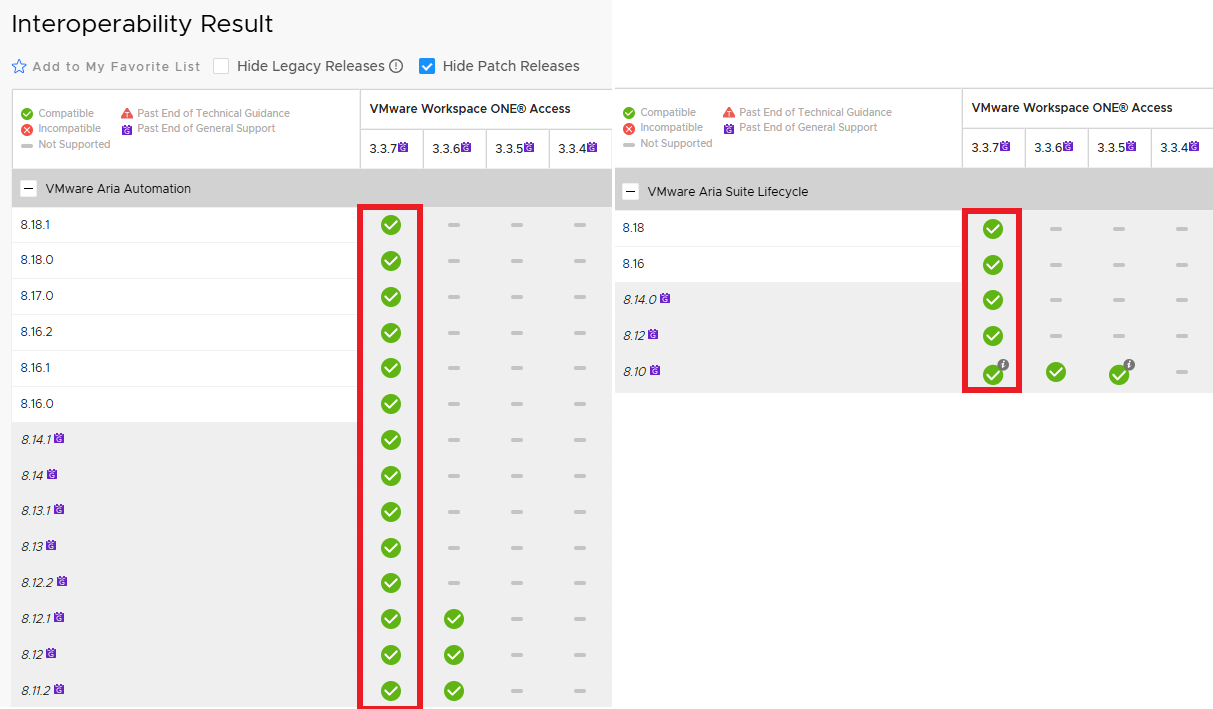

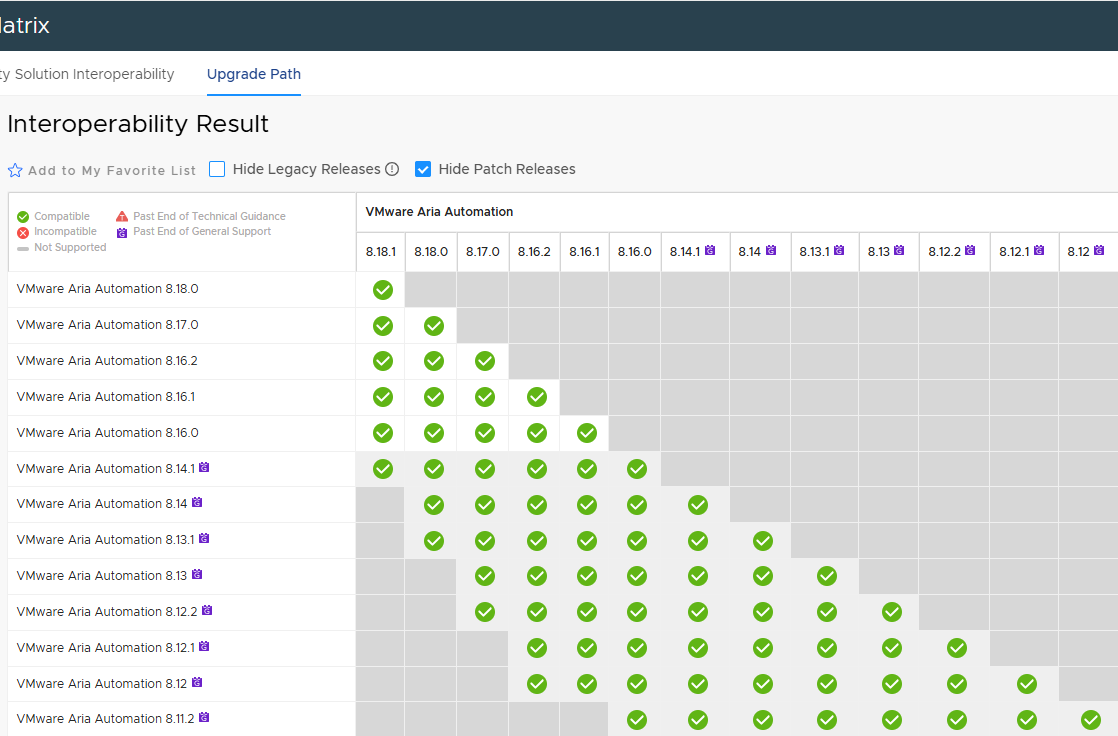

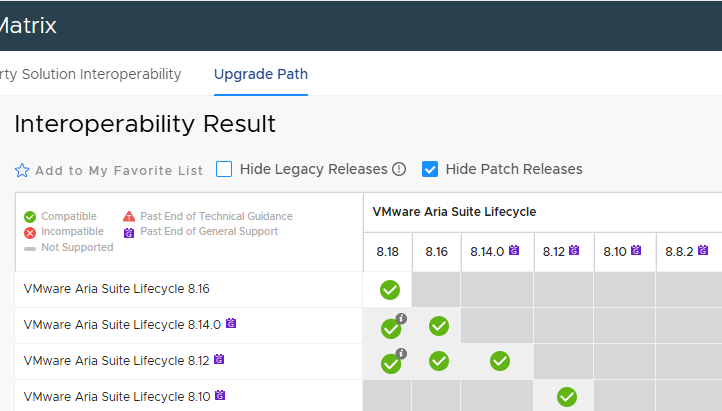

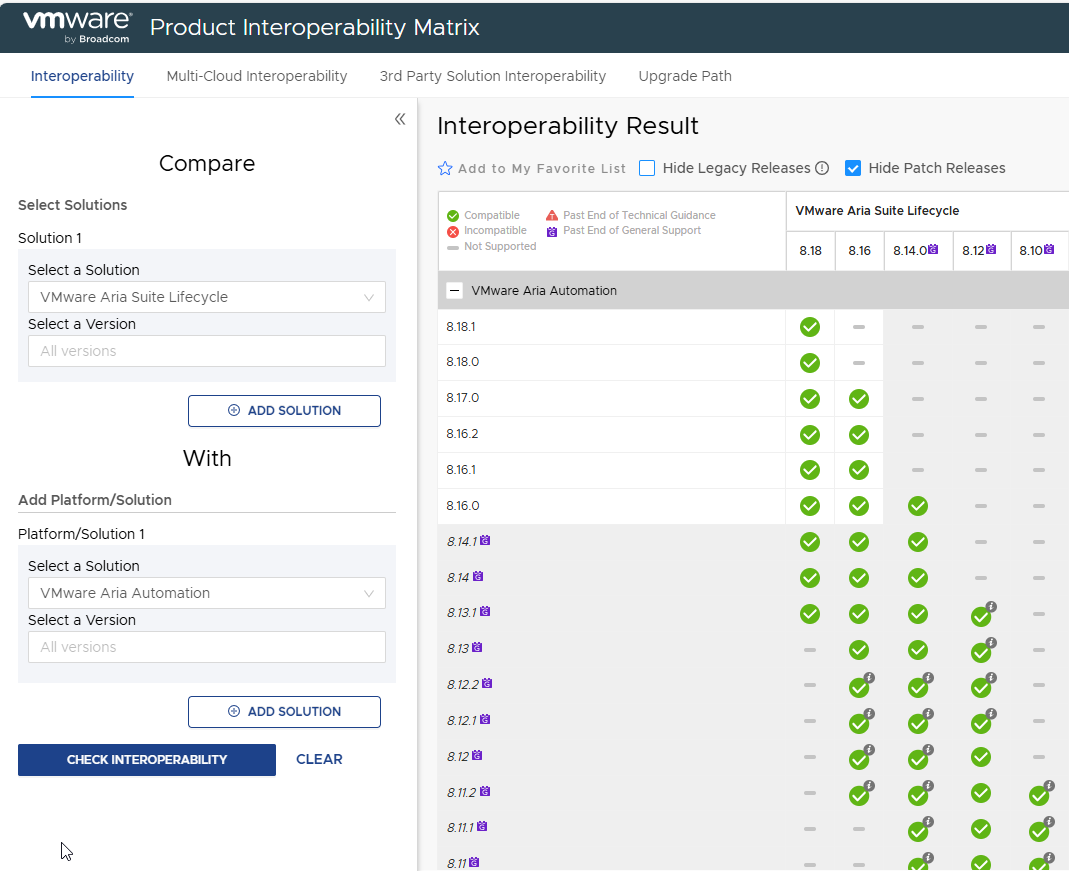

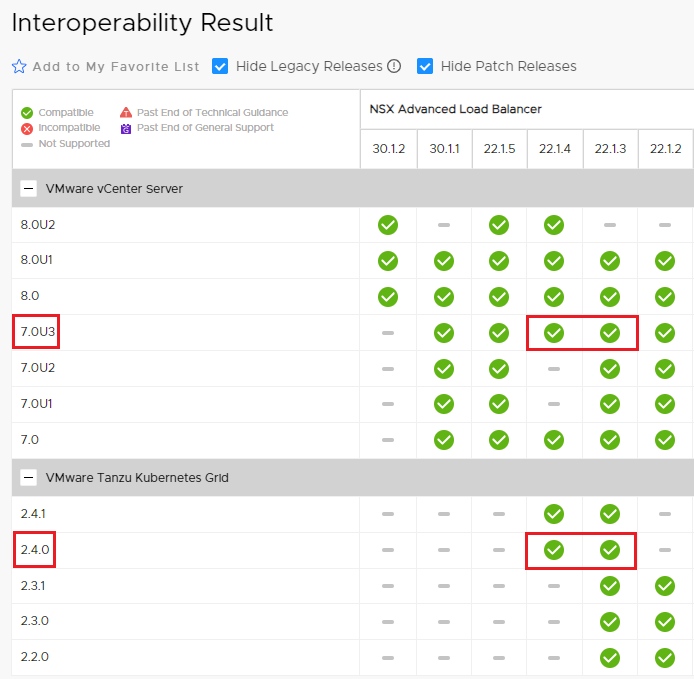

First you need to check the upgrade paths of the products involved and the relative compatibility matrix. During the entire upgrade process we must guarantee full interoperability between Lifecycle managers, Workspace ONE and Aria Automation.

For Workspace ONE (for simplicity IDM) it is simple, the current version is in matrix with Lifecycle manager and Automation.

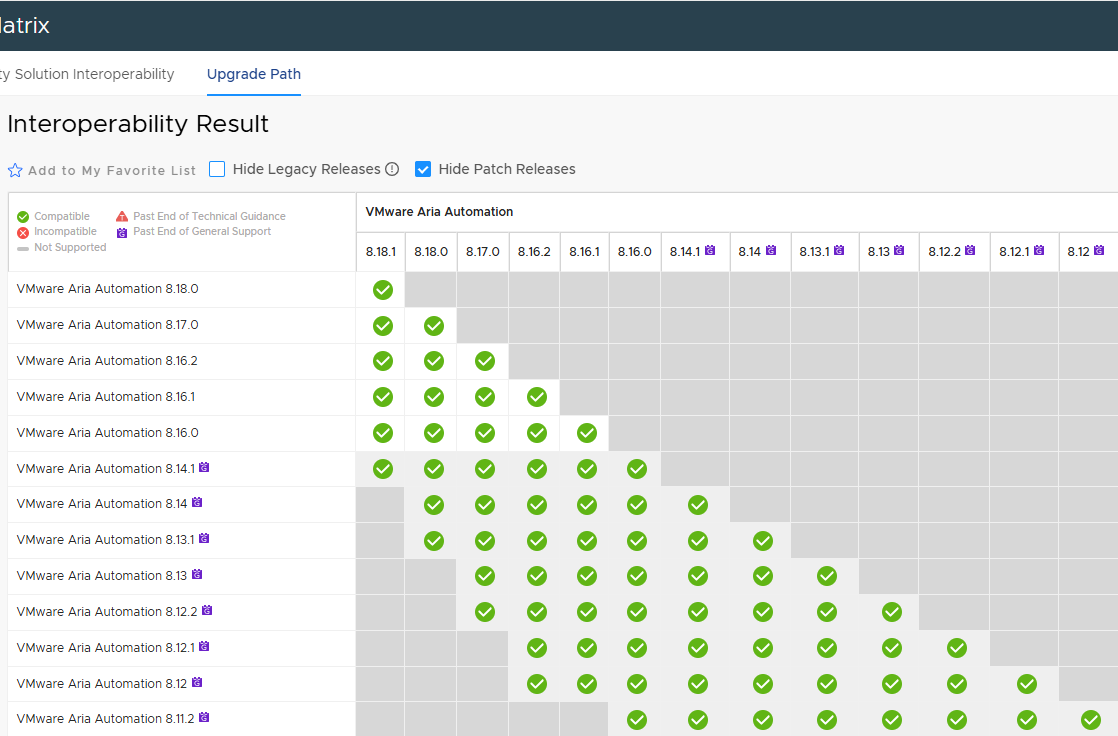

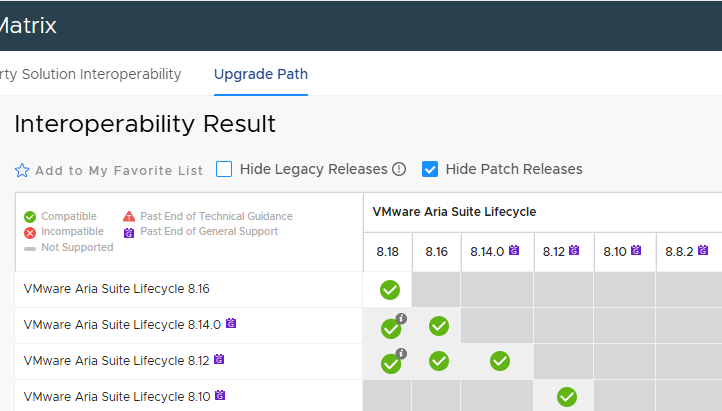

Things get a little more complicated with LCM and Automation.

Let’s remember that the various versions must be compatible with each other!

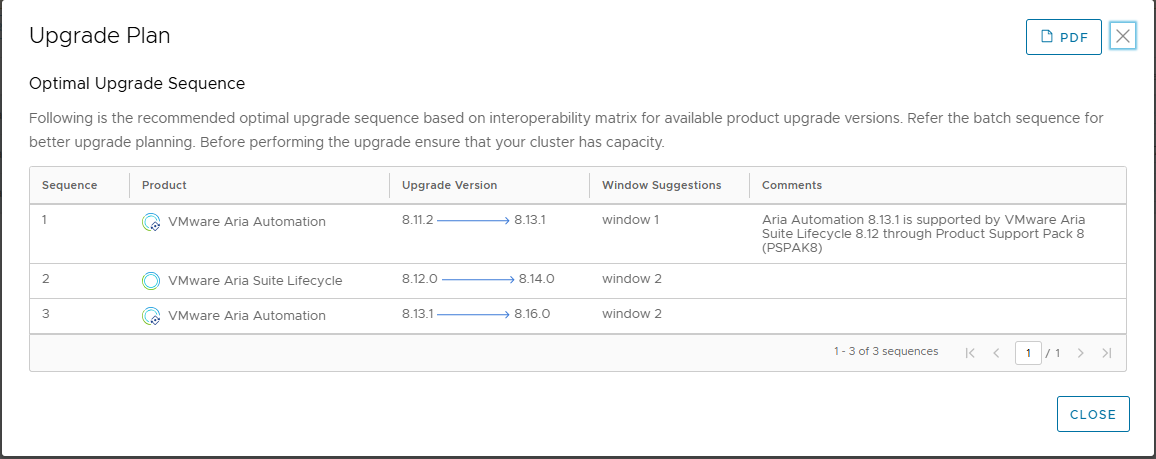

Cross-referencing everything, and checking the various steps in the lab, I obtained the following upgrade path

| Upgrade order | Product | Source Release | Destination Release |

| 1 | LCM | 8.10 | 8.12 |

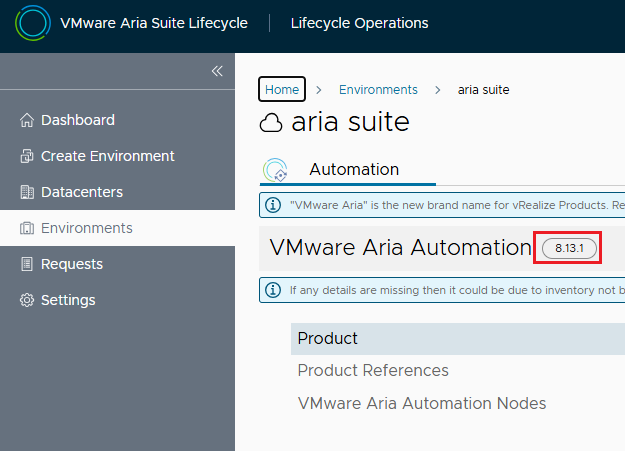

| 2 | Automation | 8.11.2 | 8.13.1 |

| 3 | LCM | 8.12 | 8.14 |

| 4 | Automation | 8.13.1 | 8.16 |

| 5 | LCM | 8.14 | 8.16 |

| 6 | LCM | 8.16 | 8.18 |

| 7 | Automation | 8.16 | 8.18 |

The resulting upgrade path is not simple, in some steps I had to solve some blocking problems. As always it is worth keeping the infrastructure updated periodically, having to make so many jumps in a short time becomes much more risky.

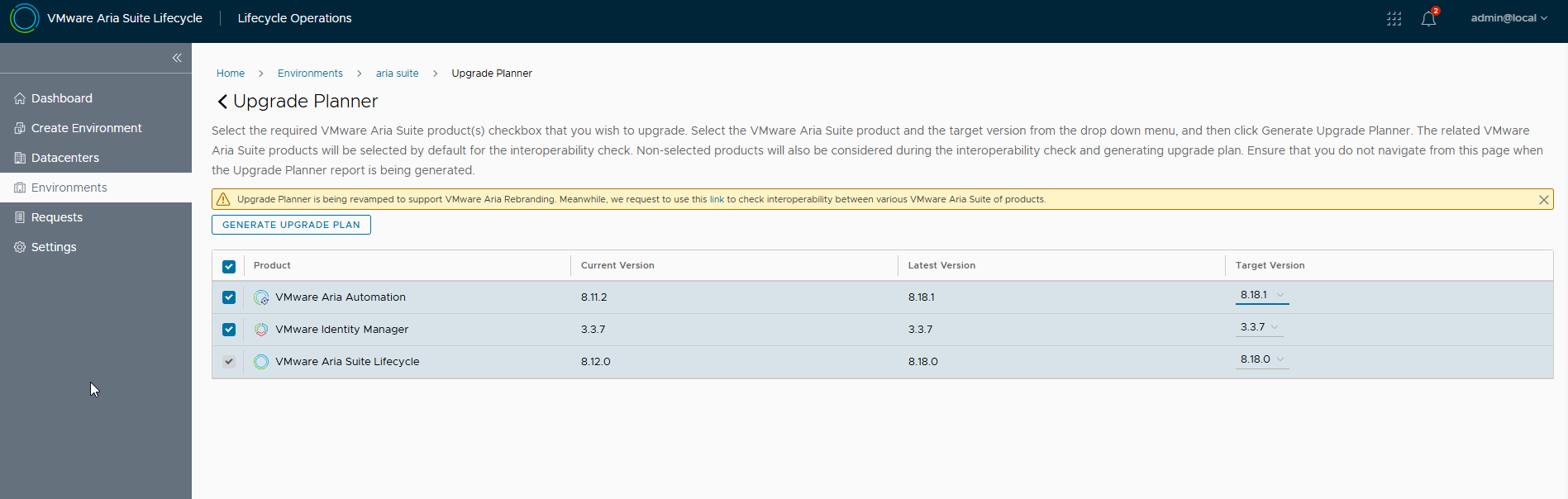

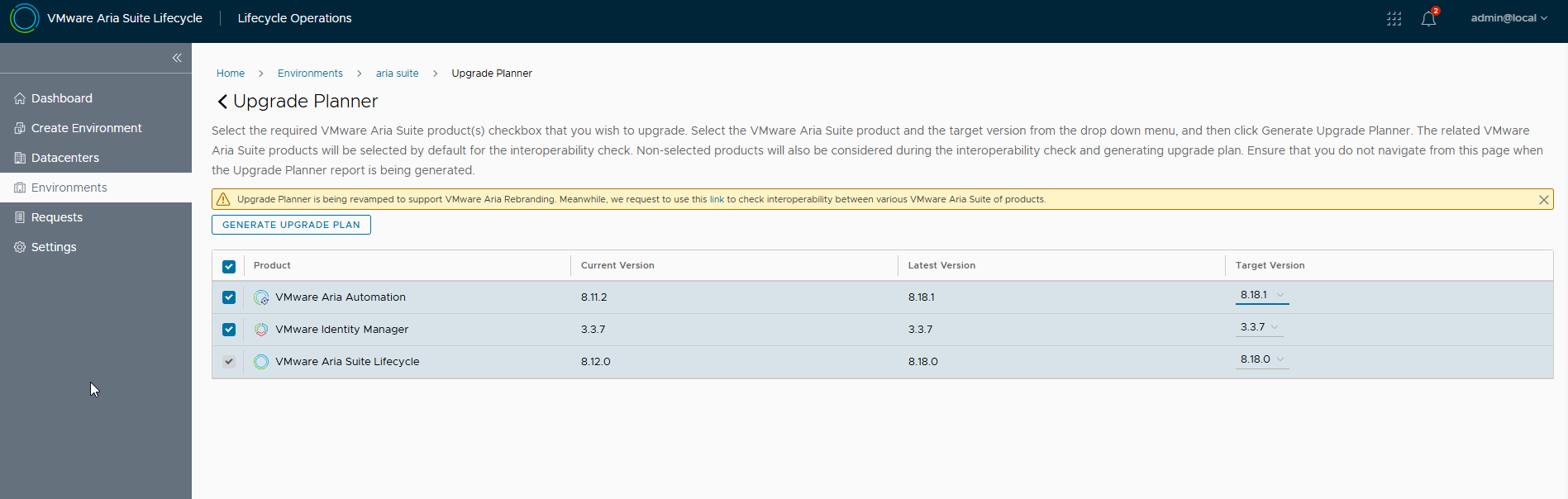

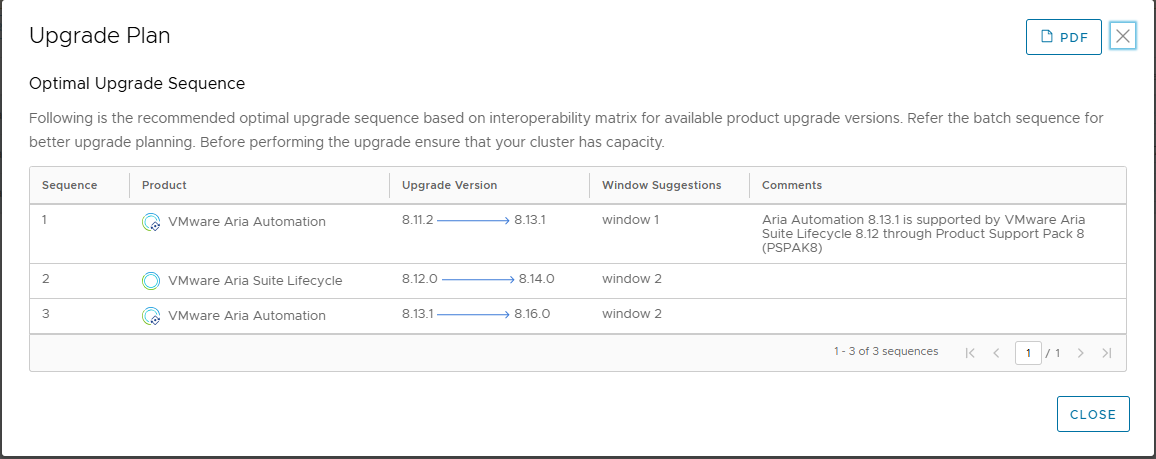

Lifecycle manager provides the Upgrade Planner tool within the environment that hosts Aria Automation, unfortunately version 8.10 (installed with Easy Installer) gave me various errors and was not usable 🙁

Only after the upgrade to 8.12 it started to work, but it started to not work correctly again after some releases. My advice is to use the upgrade paths and the site compatibility matrix.

However, let’s see an image of the working tool, it reflects the correct upgrade path 🙂

From the environment select UPGRADE PLANNER, then you can specify the final release, select the product flags and run GENERATE UPGRADE PLAN

Here the result

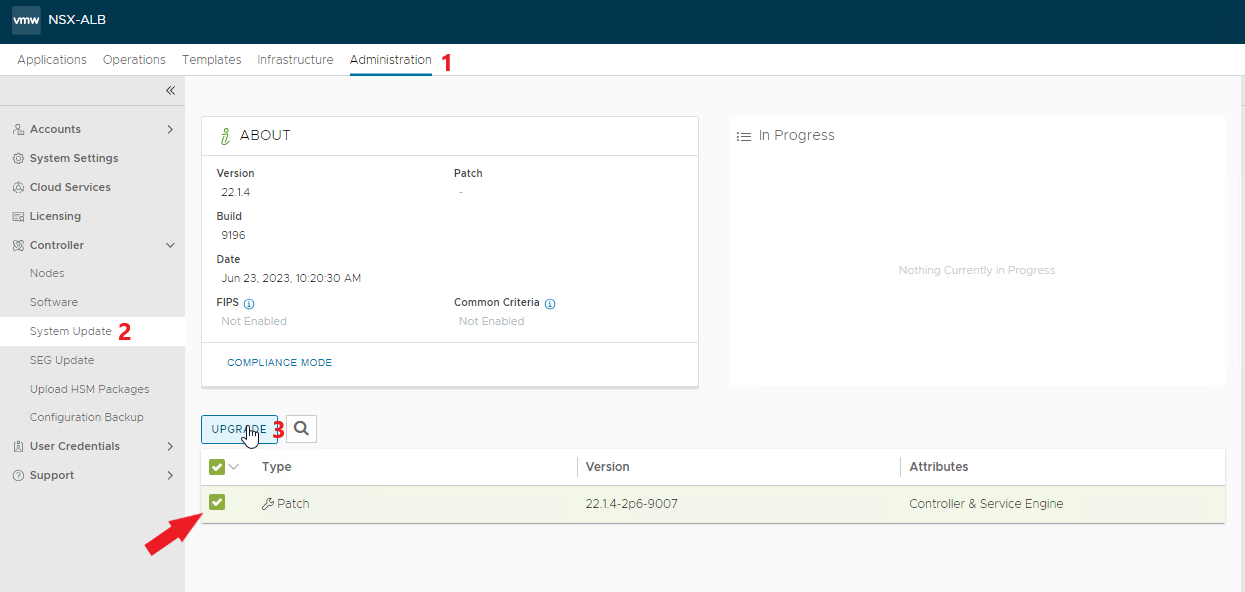

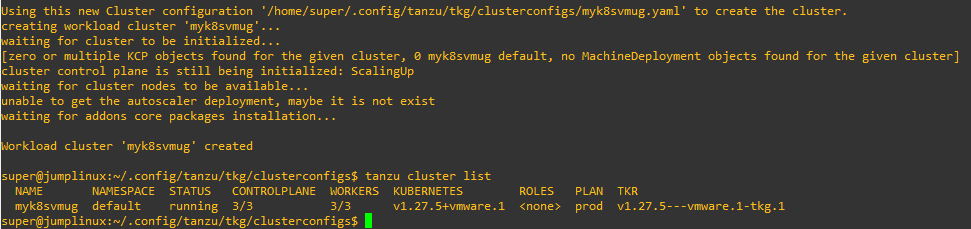

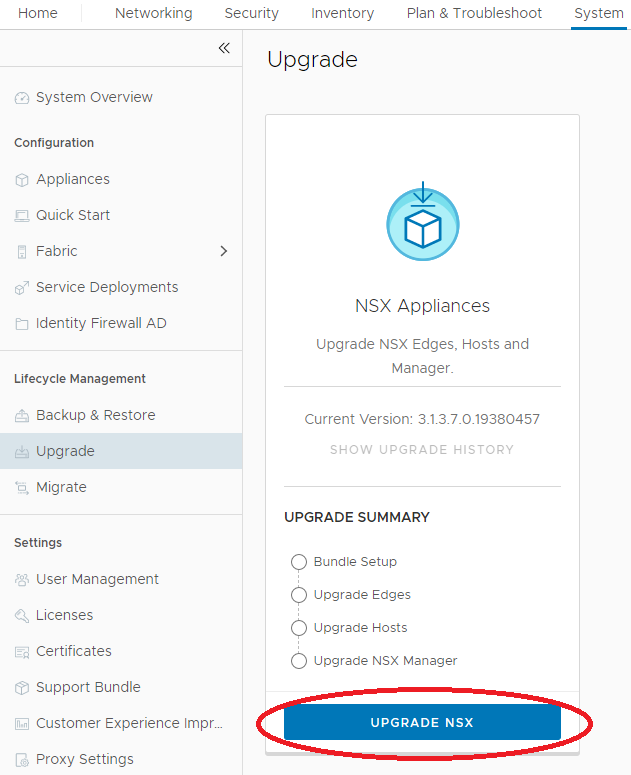

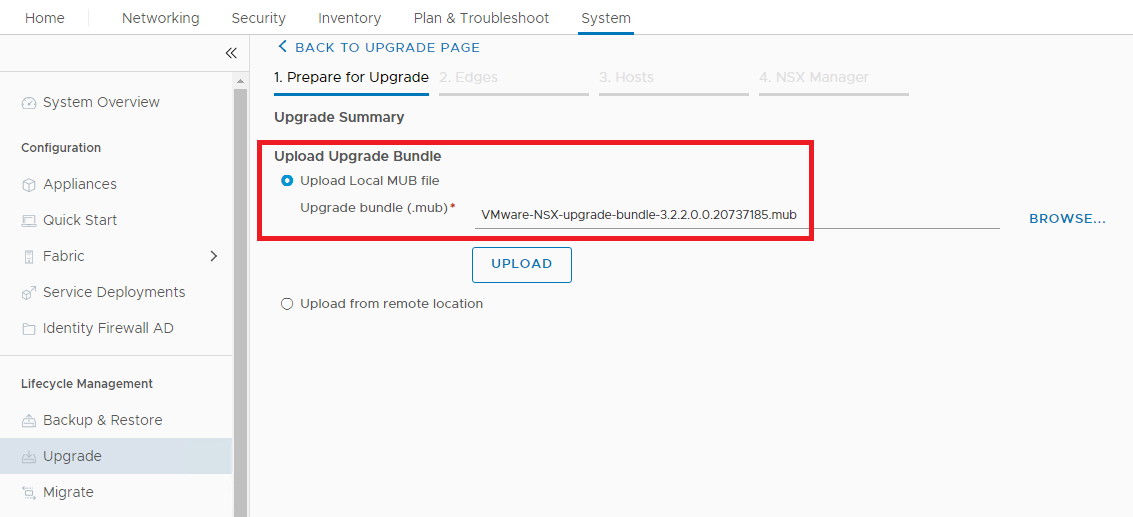

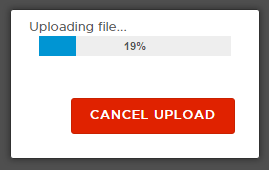

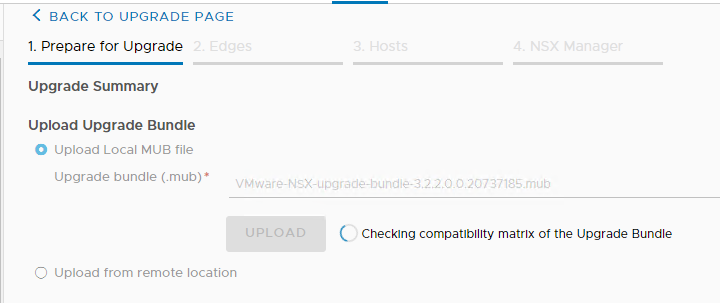

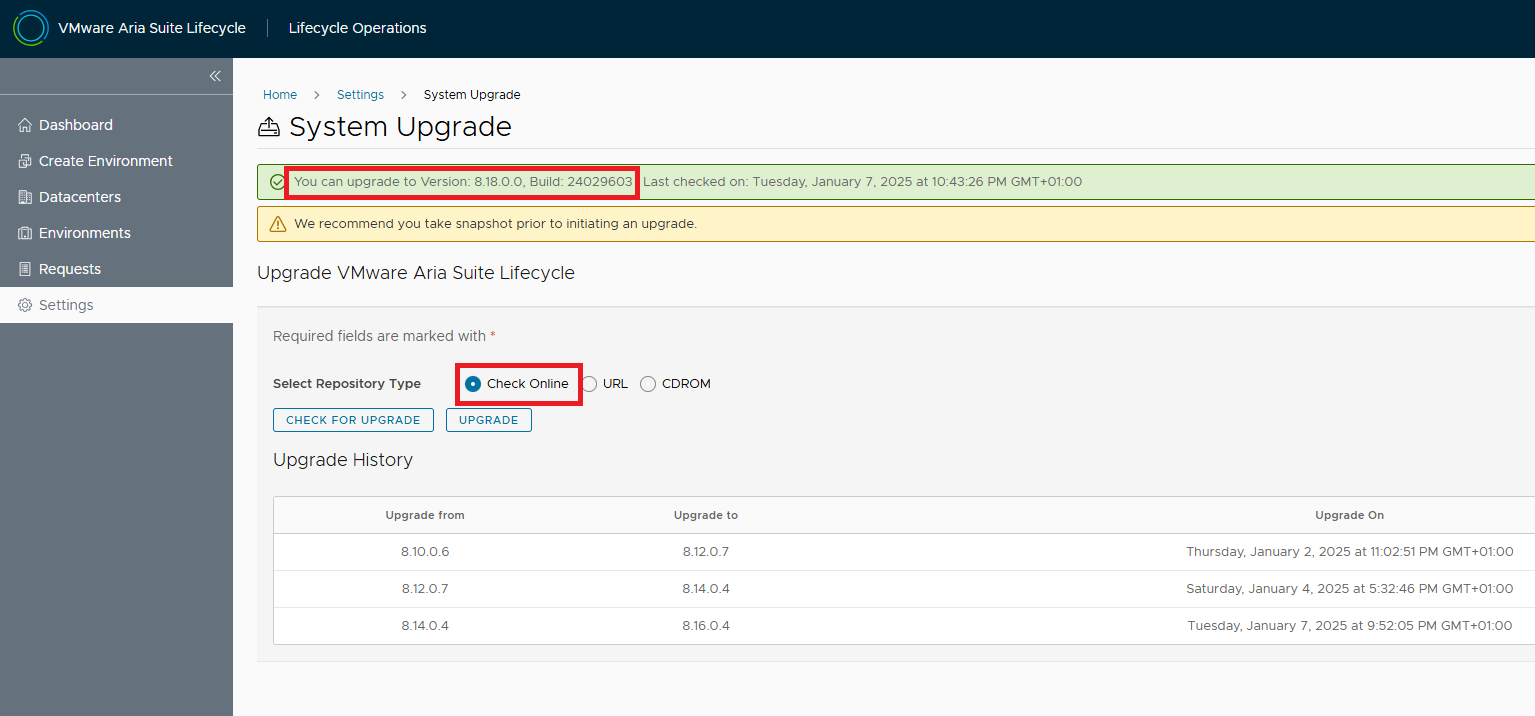

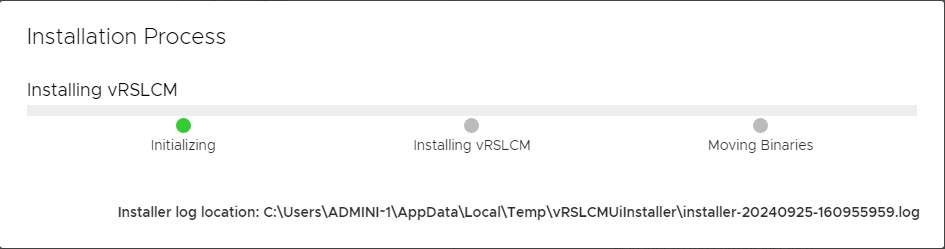

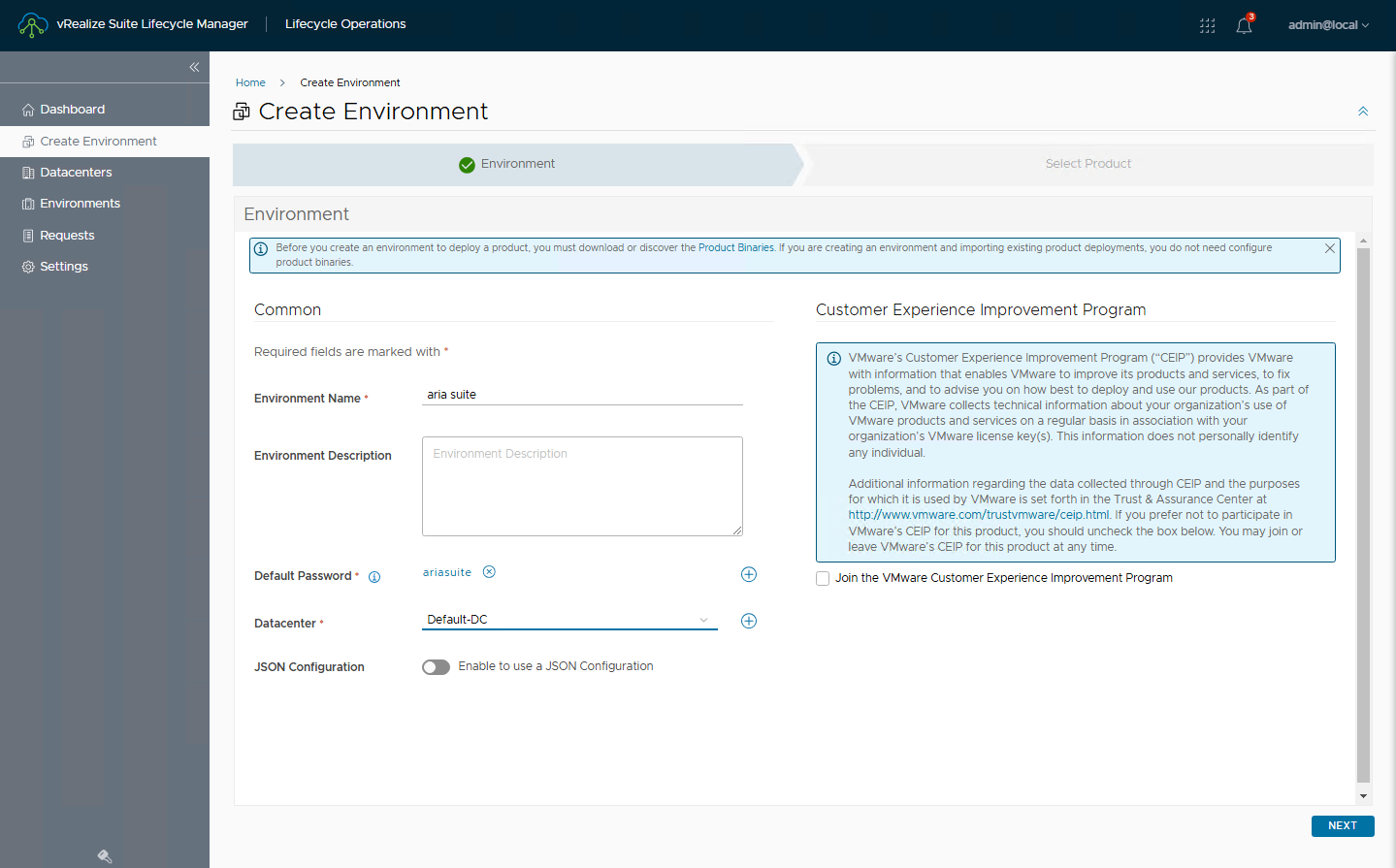

once the upgrade path is defined, you can start the upgrade. Let’s start from the Lifecycle manager (LCM). The procedure is the same for all releases.

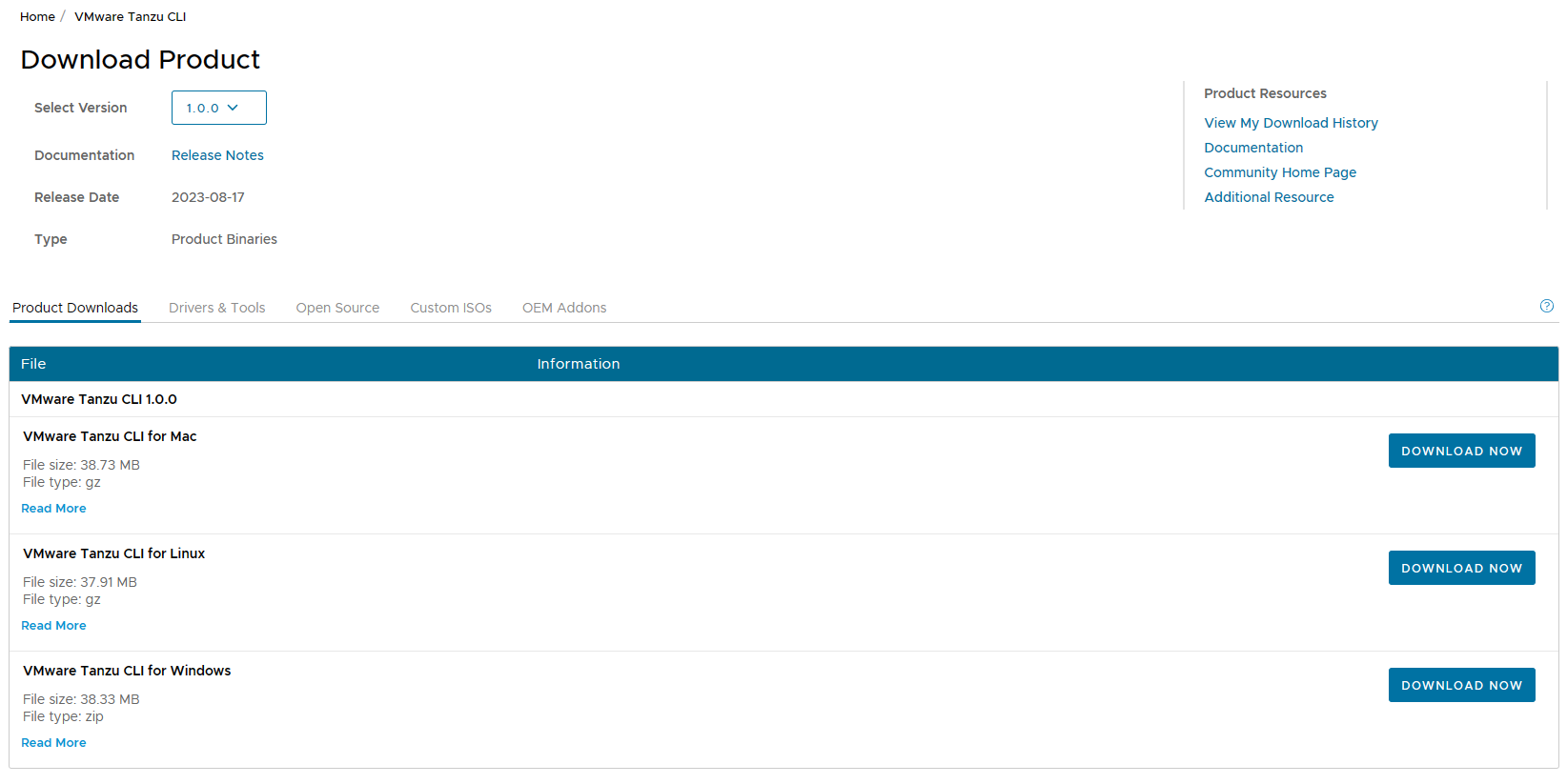

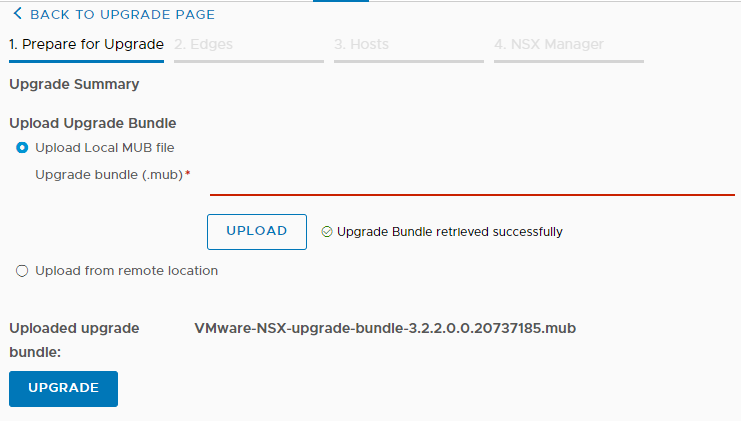

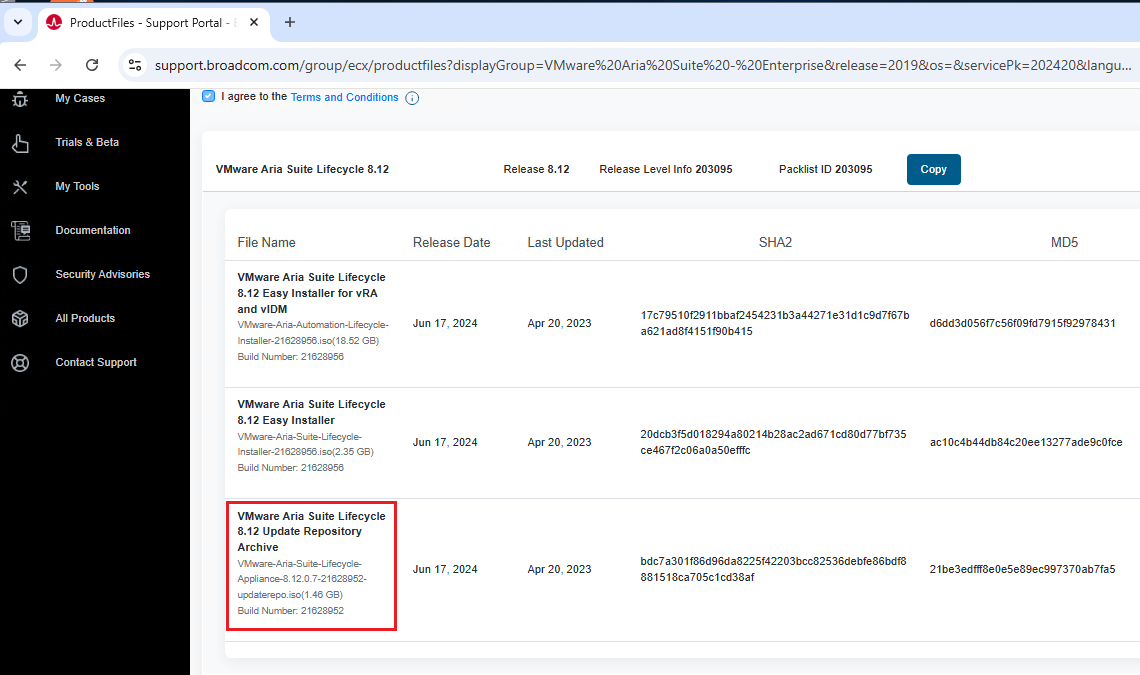

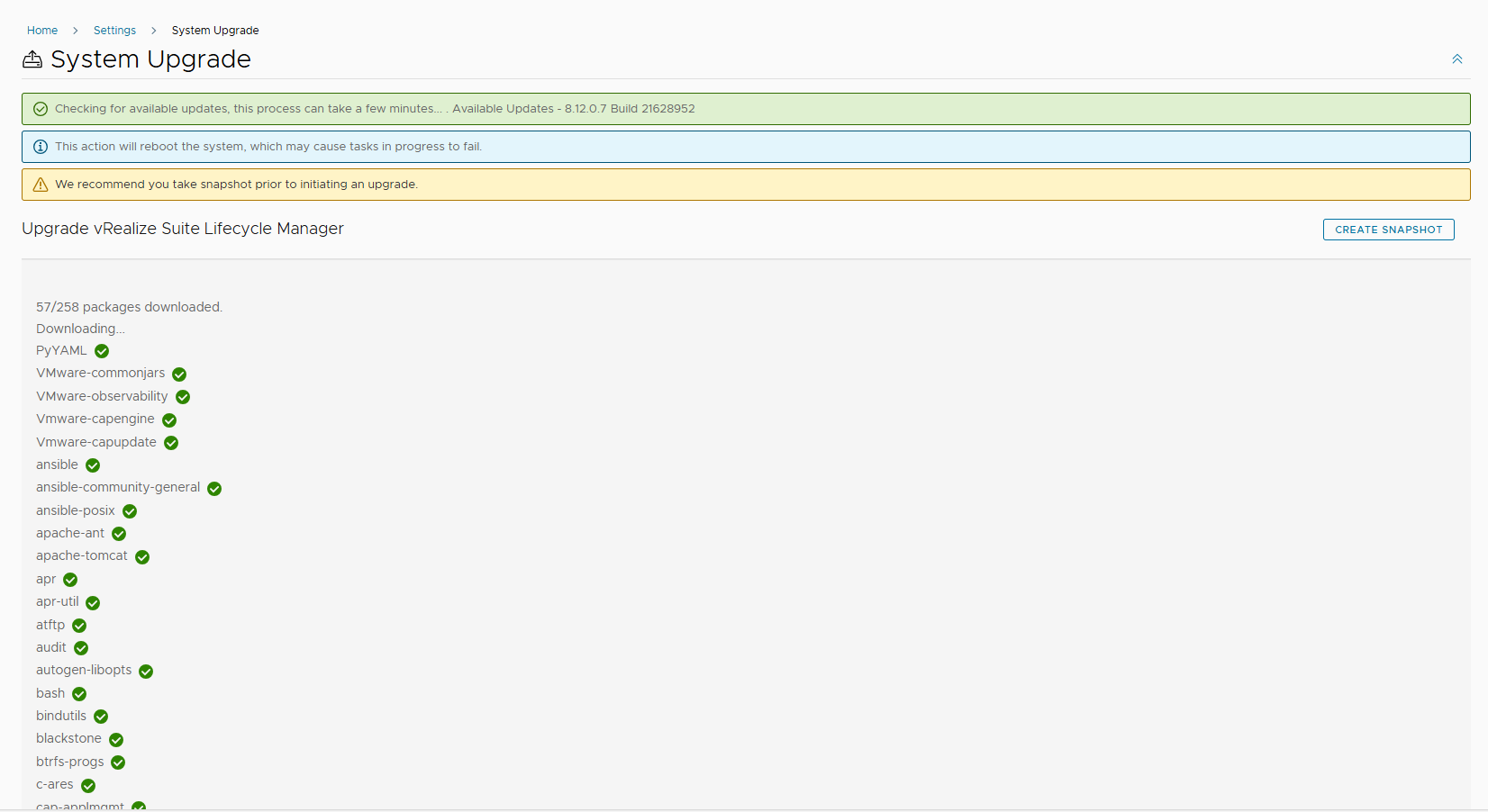

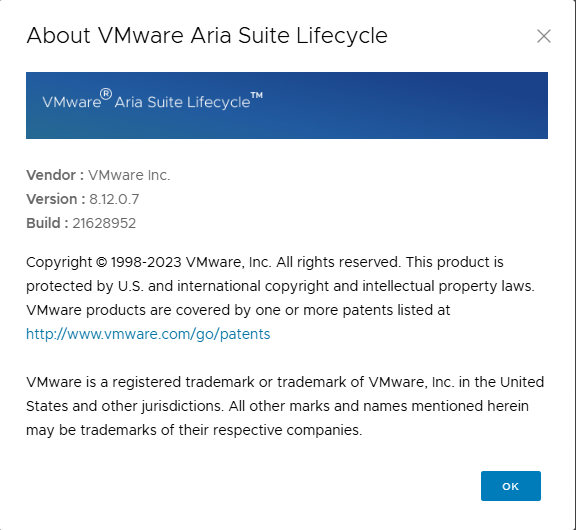

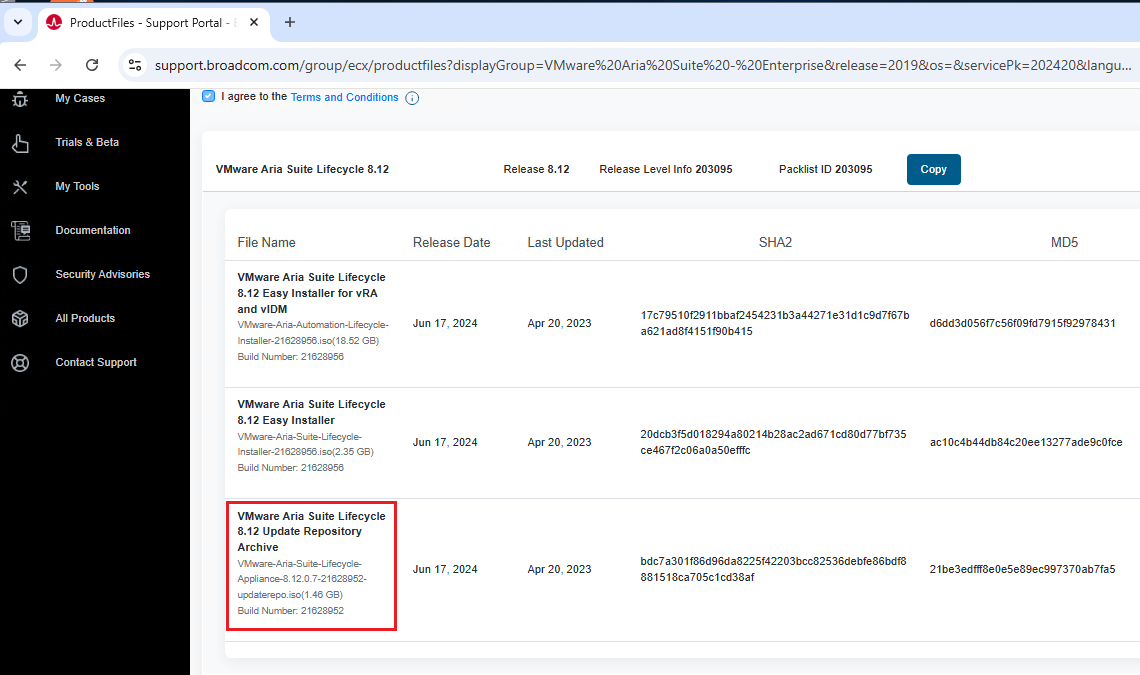

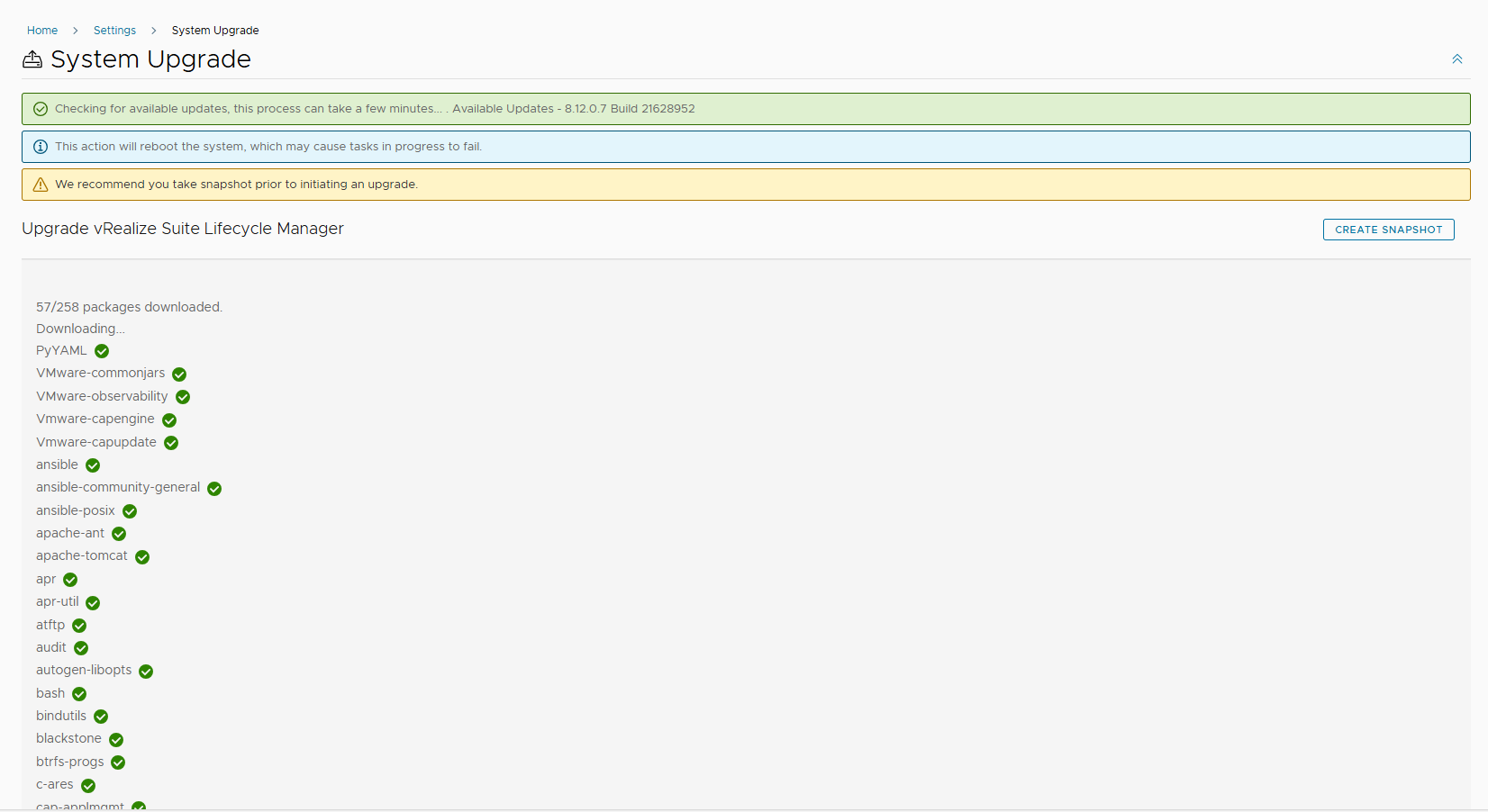

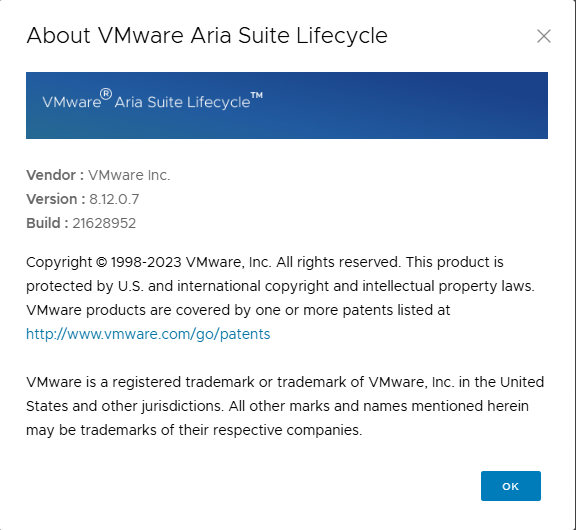

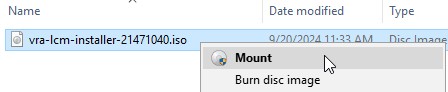

First we need to download the ISO for the upgrade from the broadcom site, the file name is VMware-Aria-Suite-Lifecycle-Appliance-8.12.0.7-21628952-updaterepo.iso

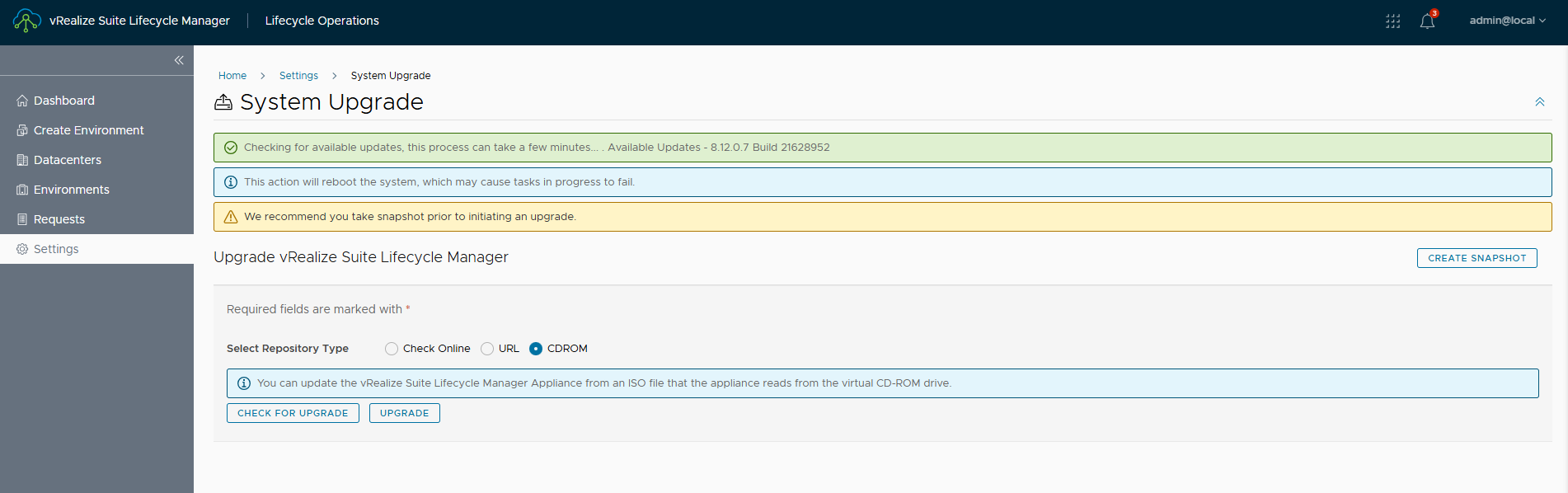

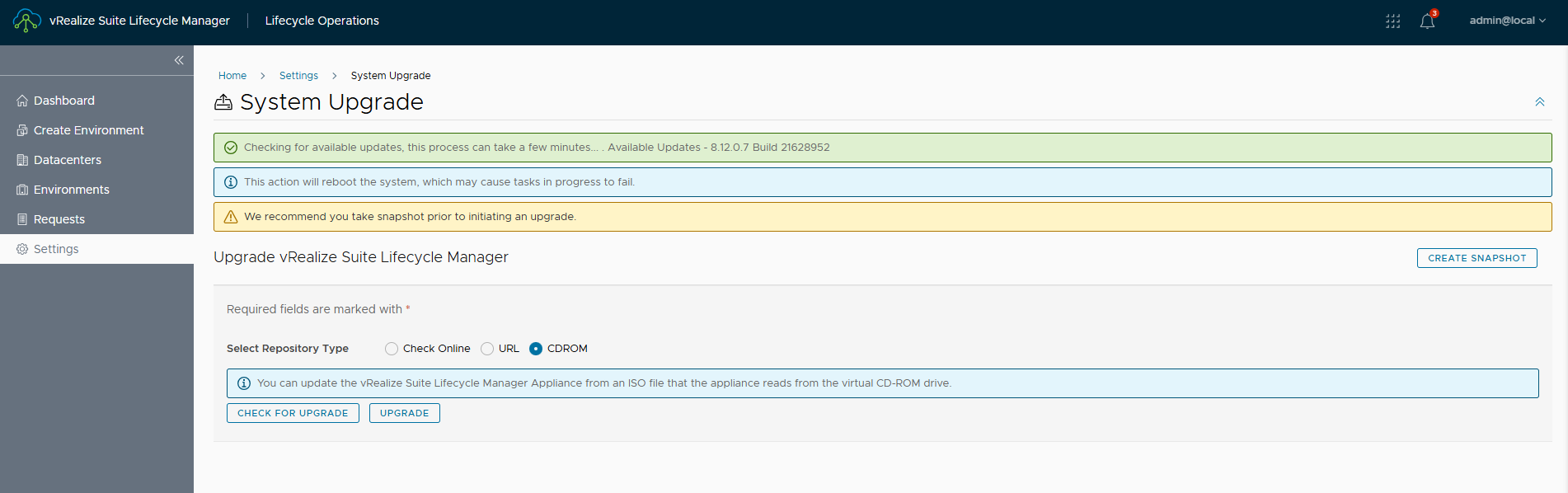

The ISO must be loaded on a datastore of the cluster where LCM resides and connected to the appliance. Then, from LCM, go to Settings and System Upgrade. Select CDROM as the repository and then CHECK FOR UPGRADE.

If the ISO is correctly connected, the release for the upgrade will be detected.

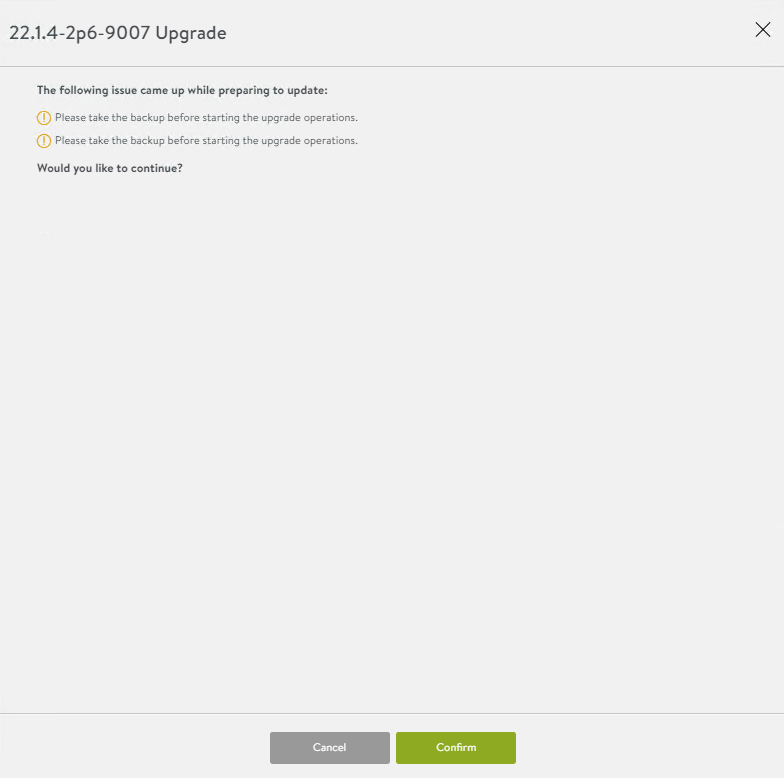

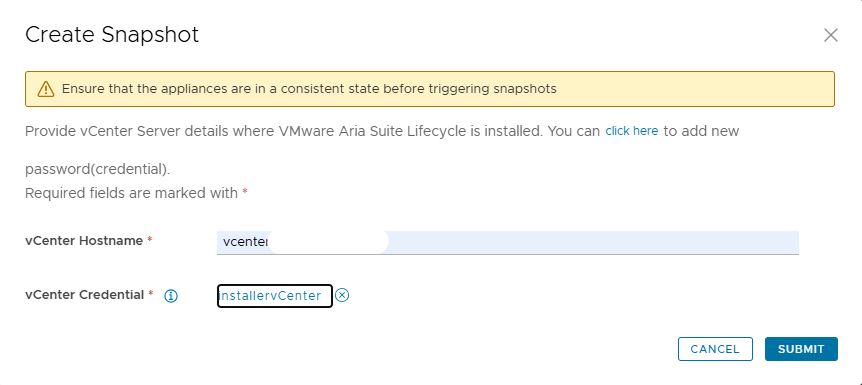

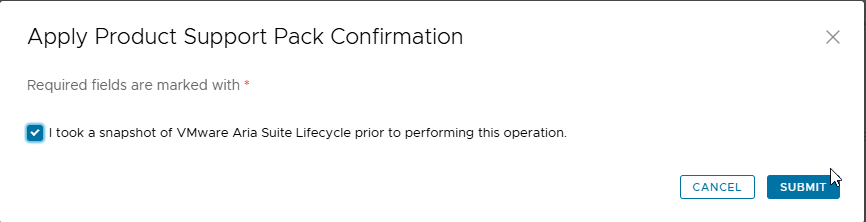

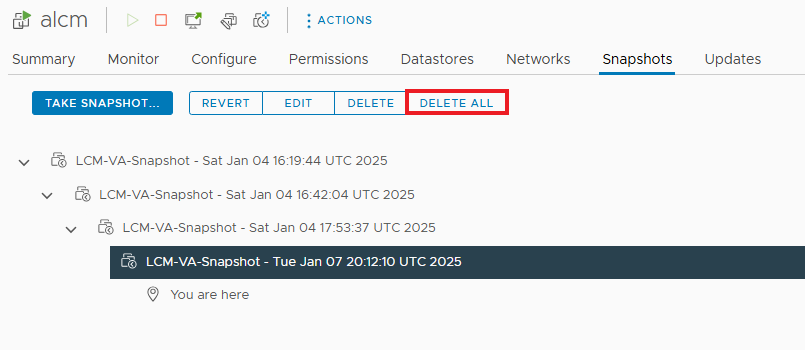

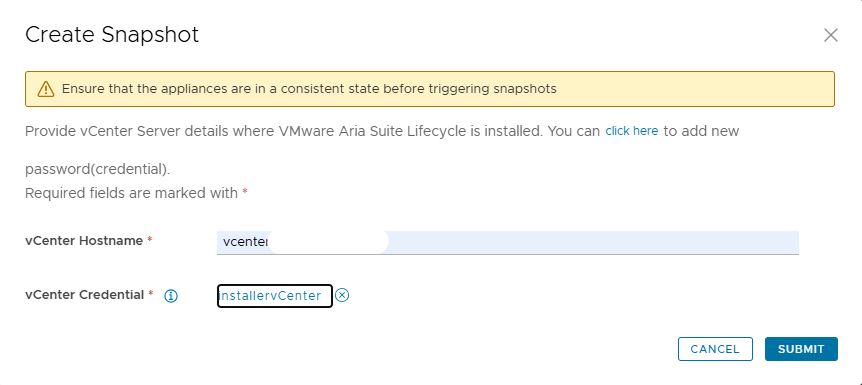

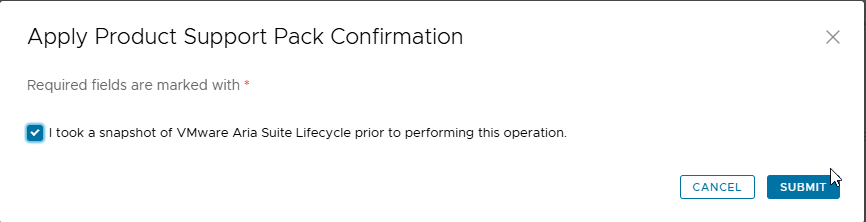

NOTE: before starting the upgrade it is necessary to take a snapshot of LCM, if something goes wrong we can always go back.

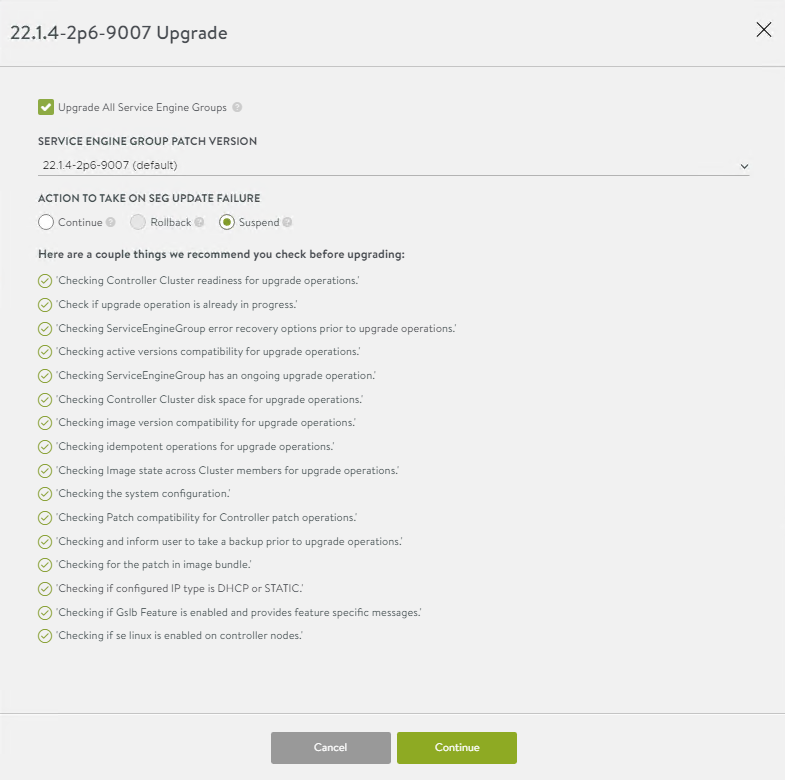

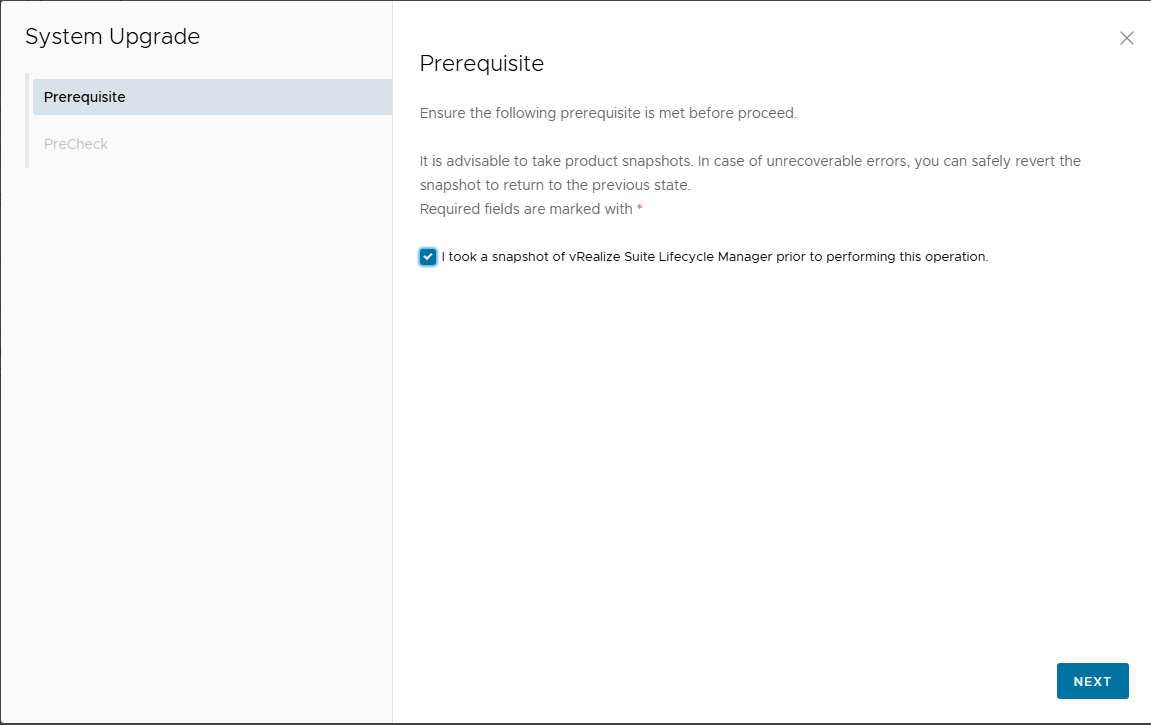

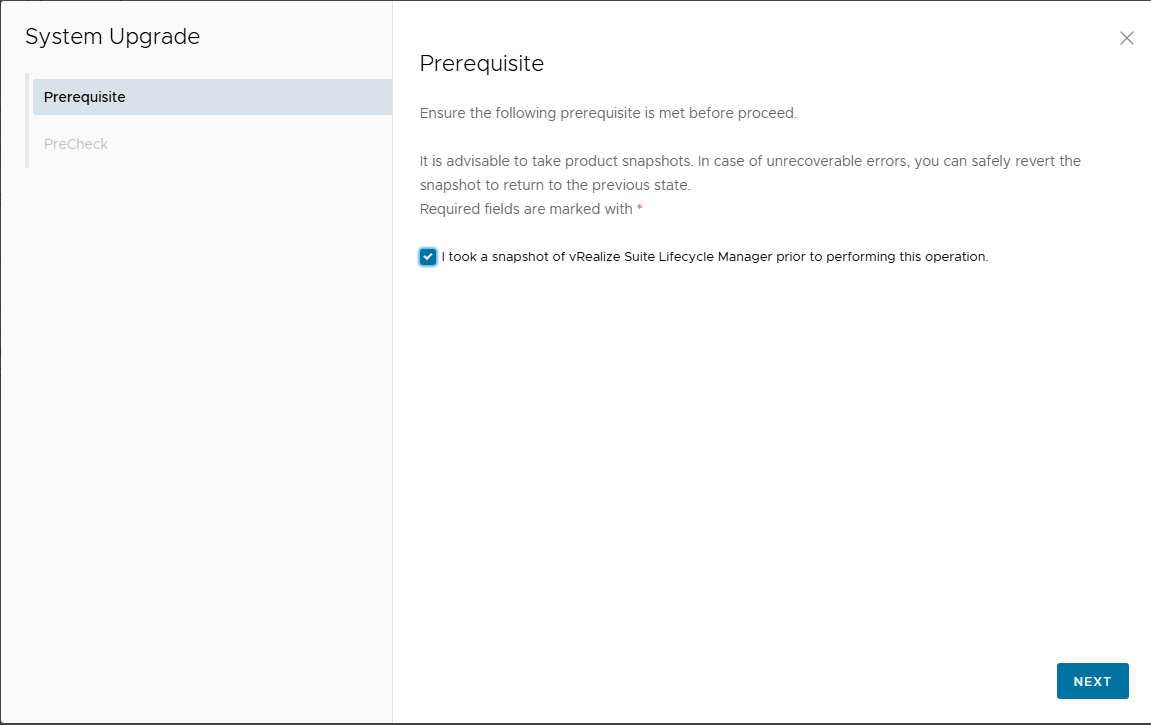

Let’s go with the upgrade, to continue enable the snapshot flag.

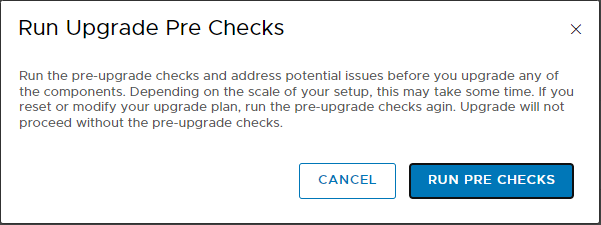

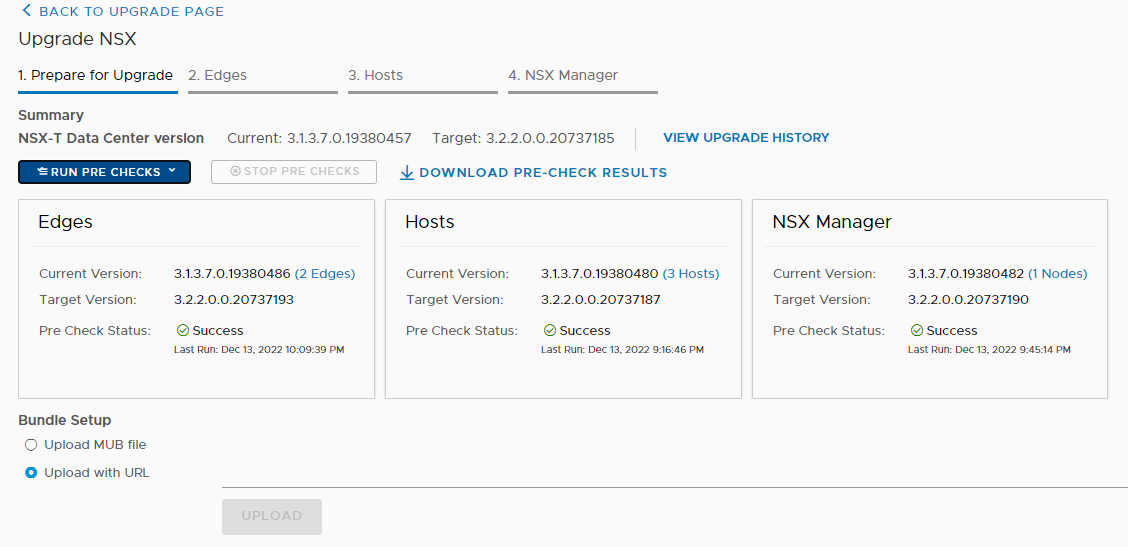

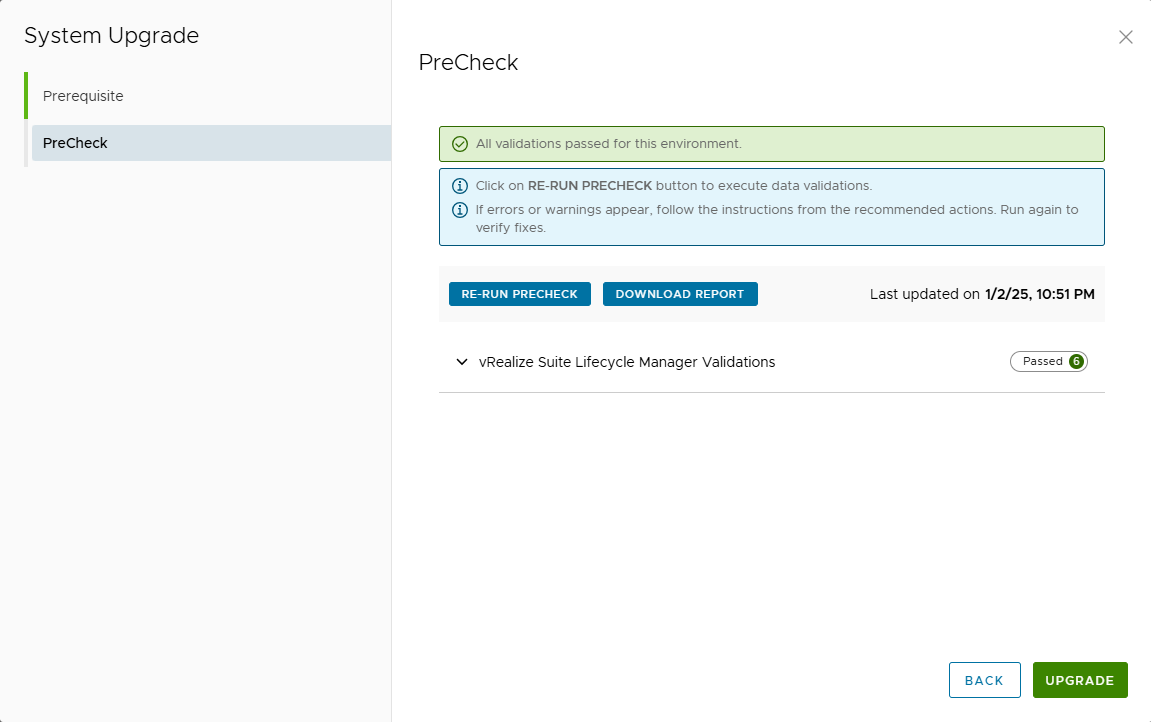

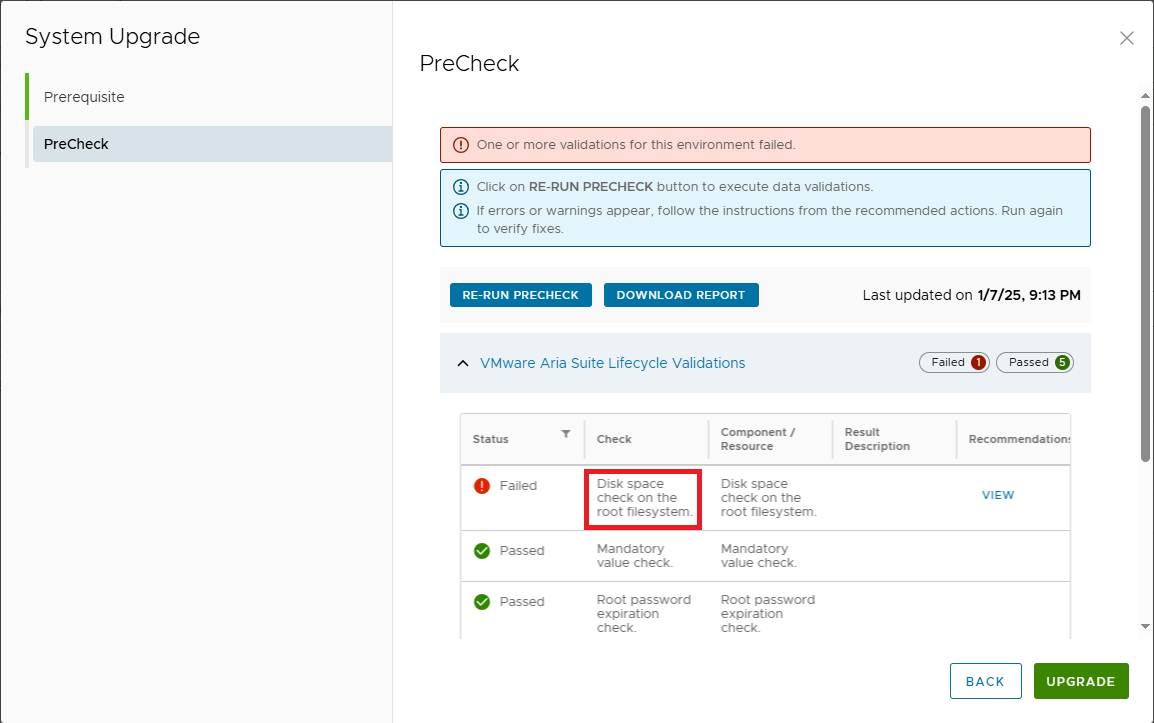

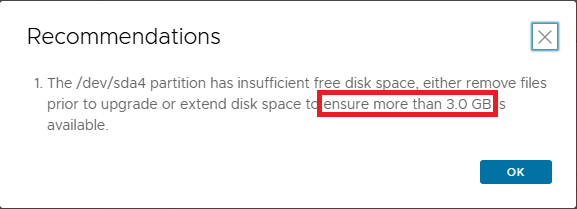

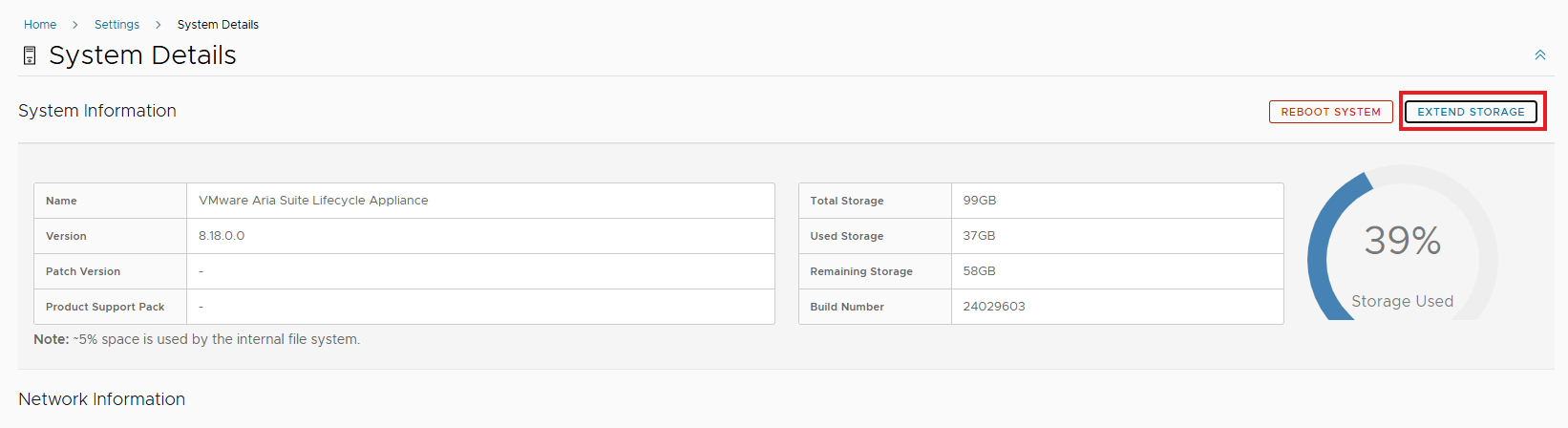

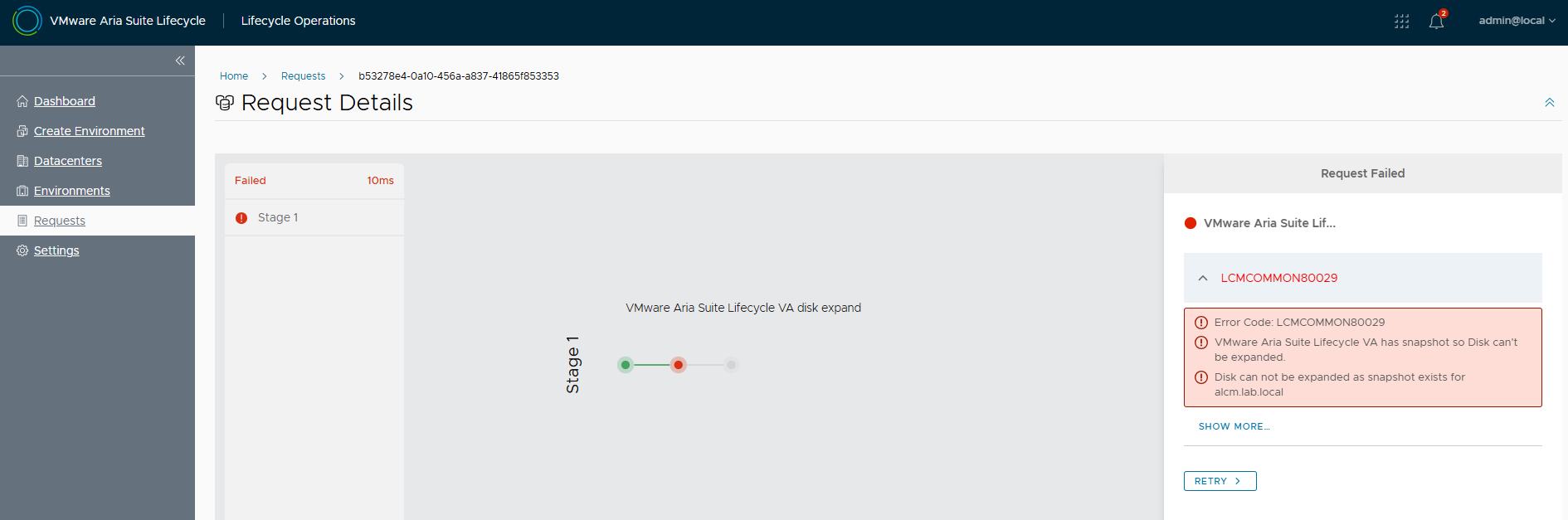

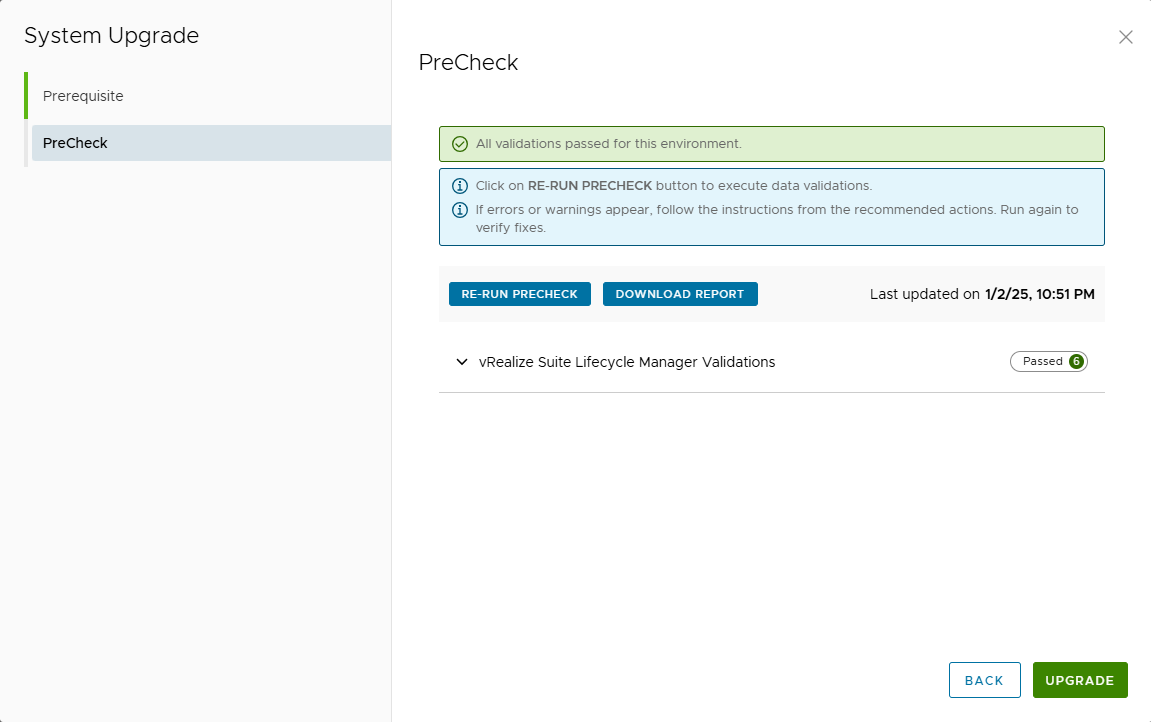

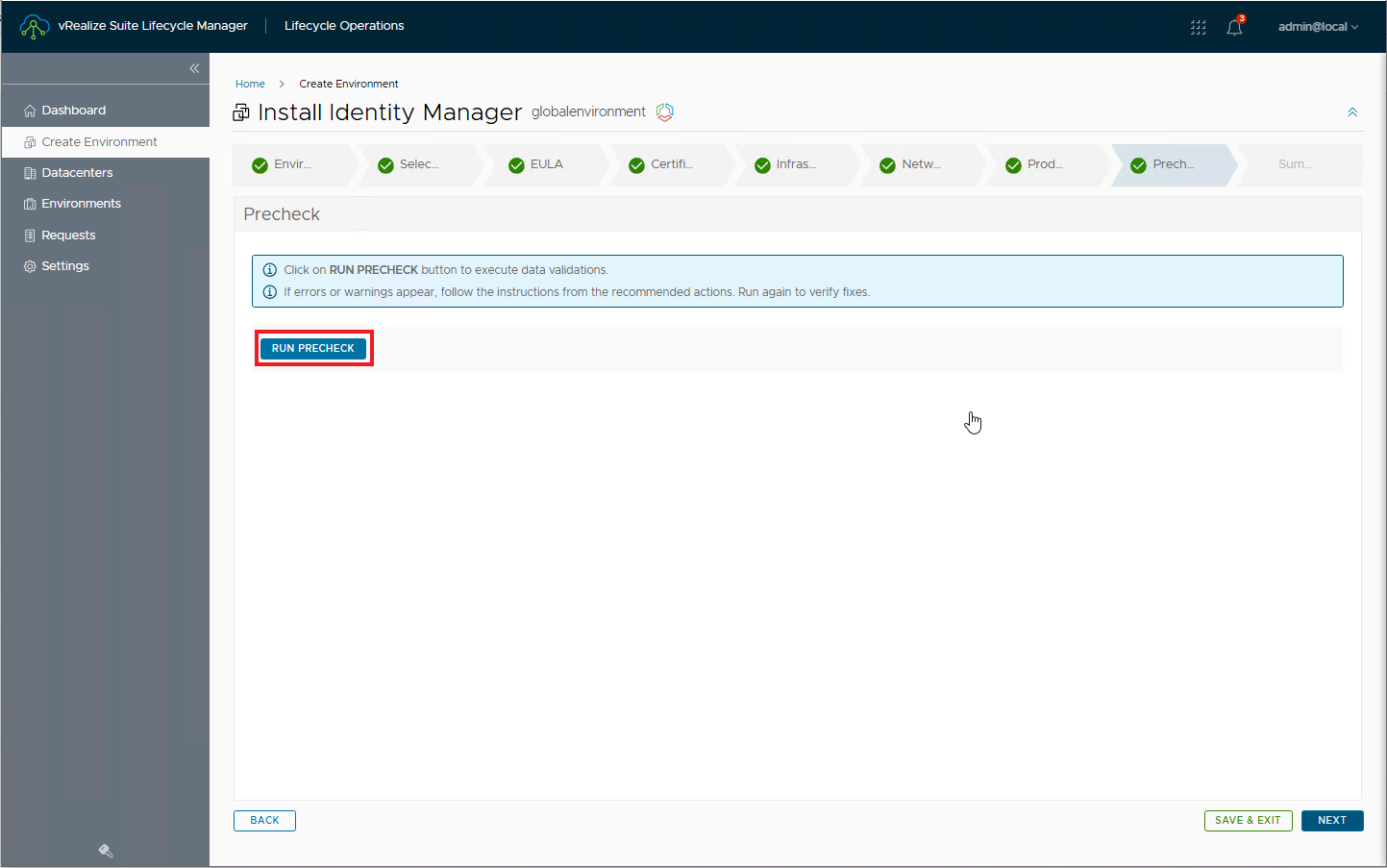

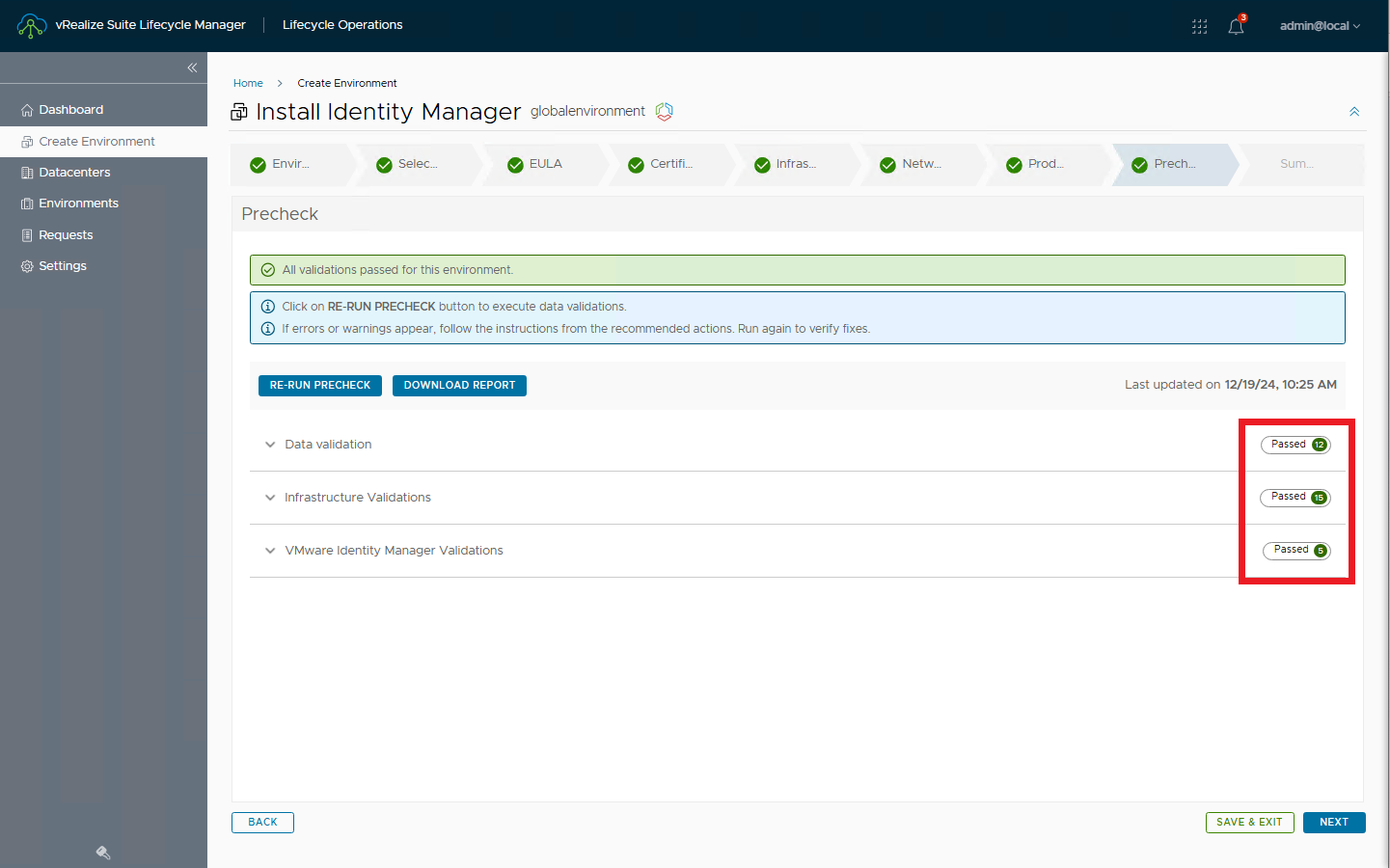

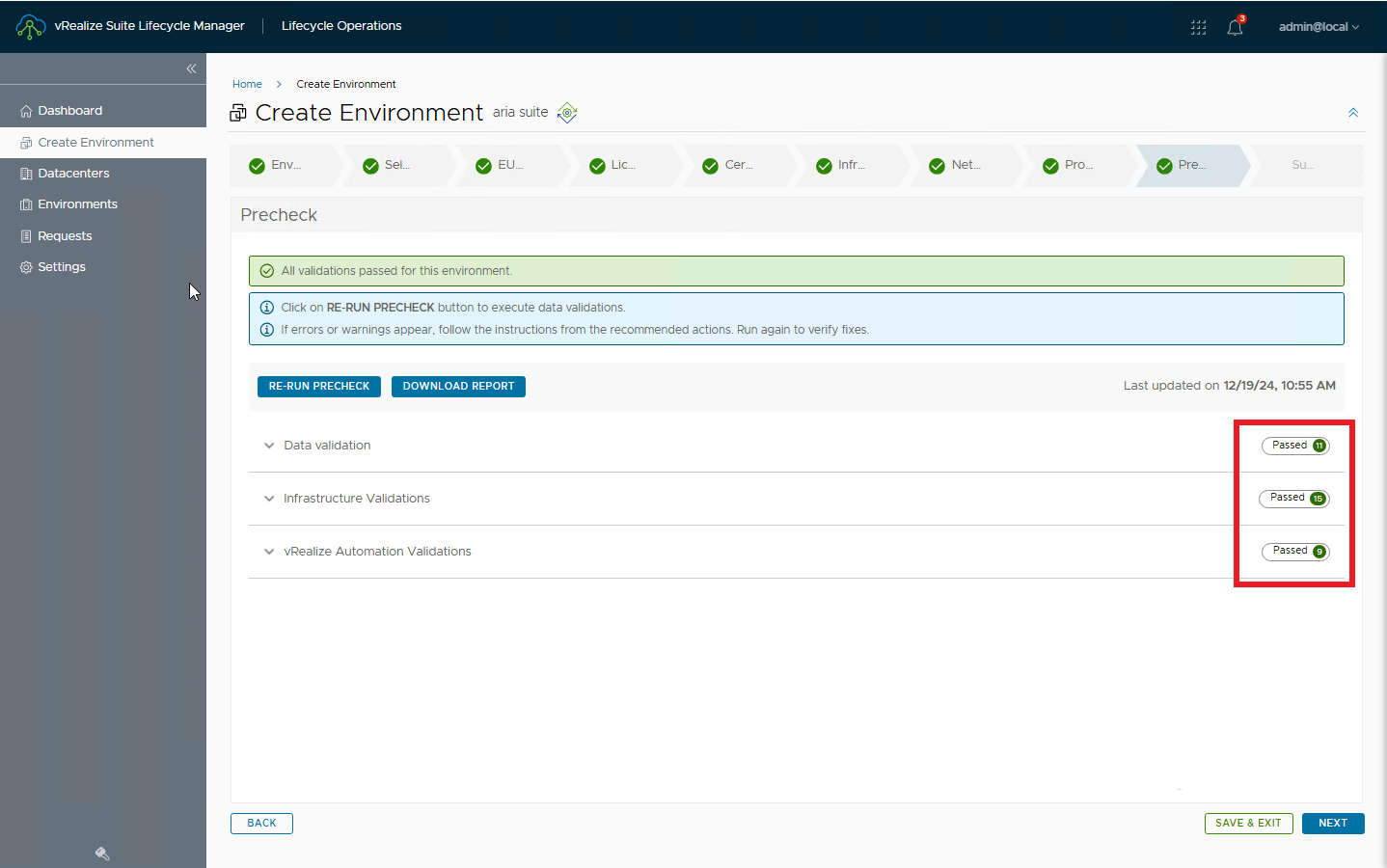

Run pre-checks and verify that everything is OK

Confirm with UPGRADE

LCM will update and restart, wait for services to come back up.

Upon completion you will be able to check the new release

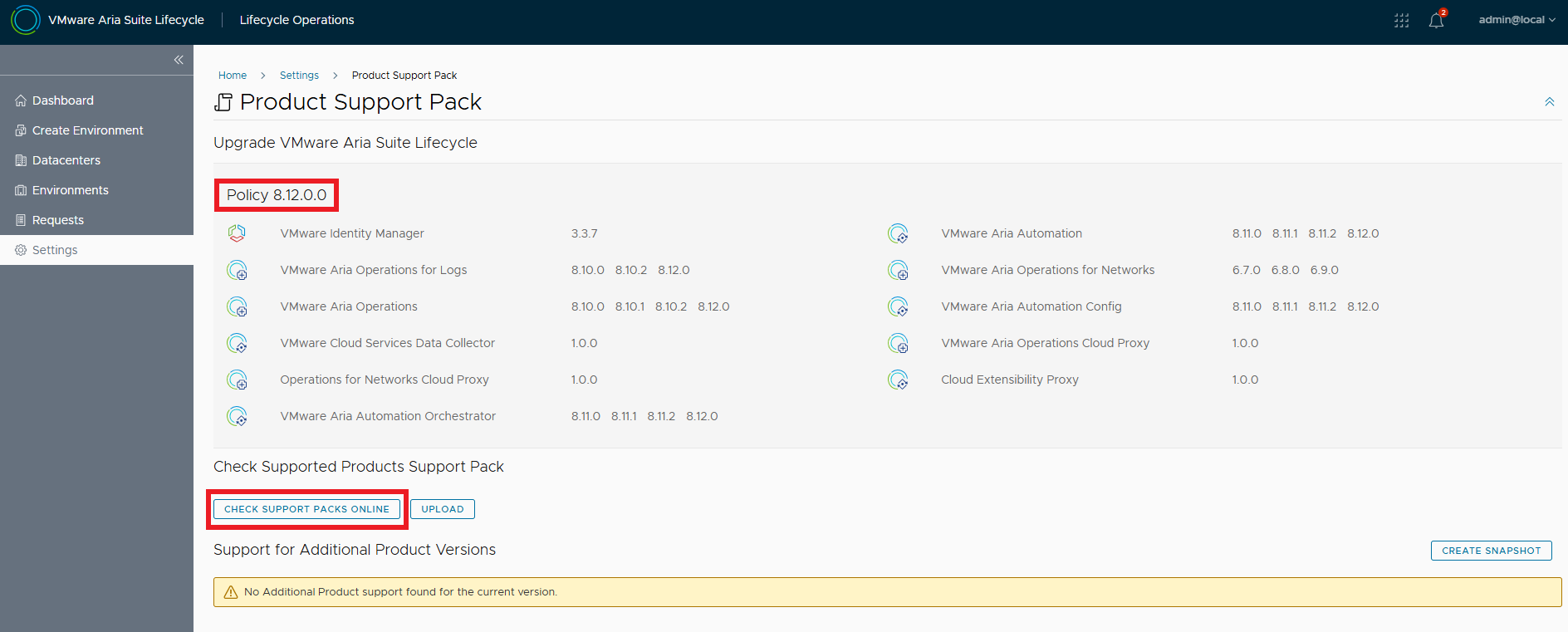

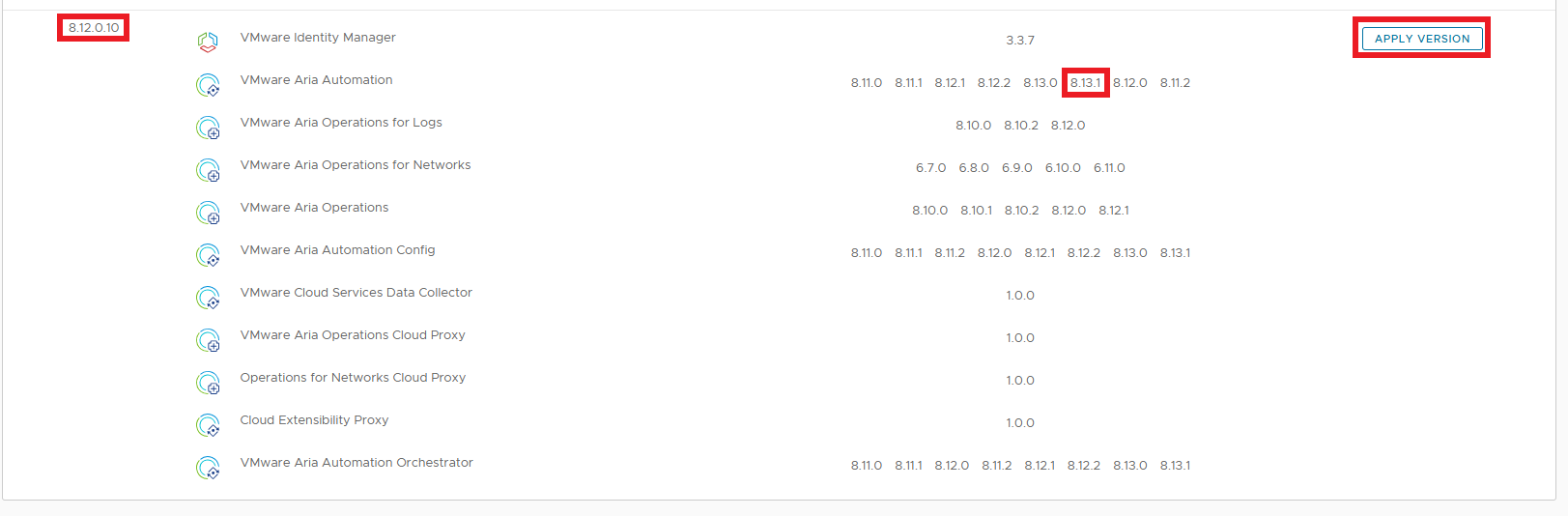

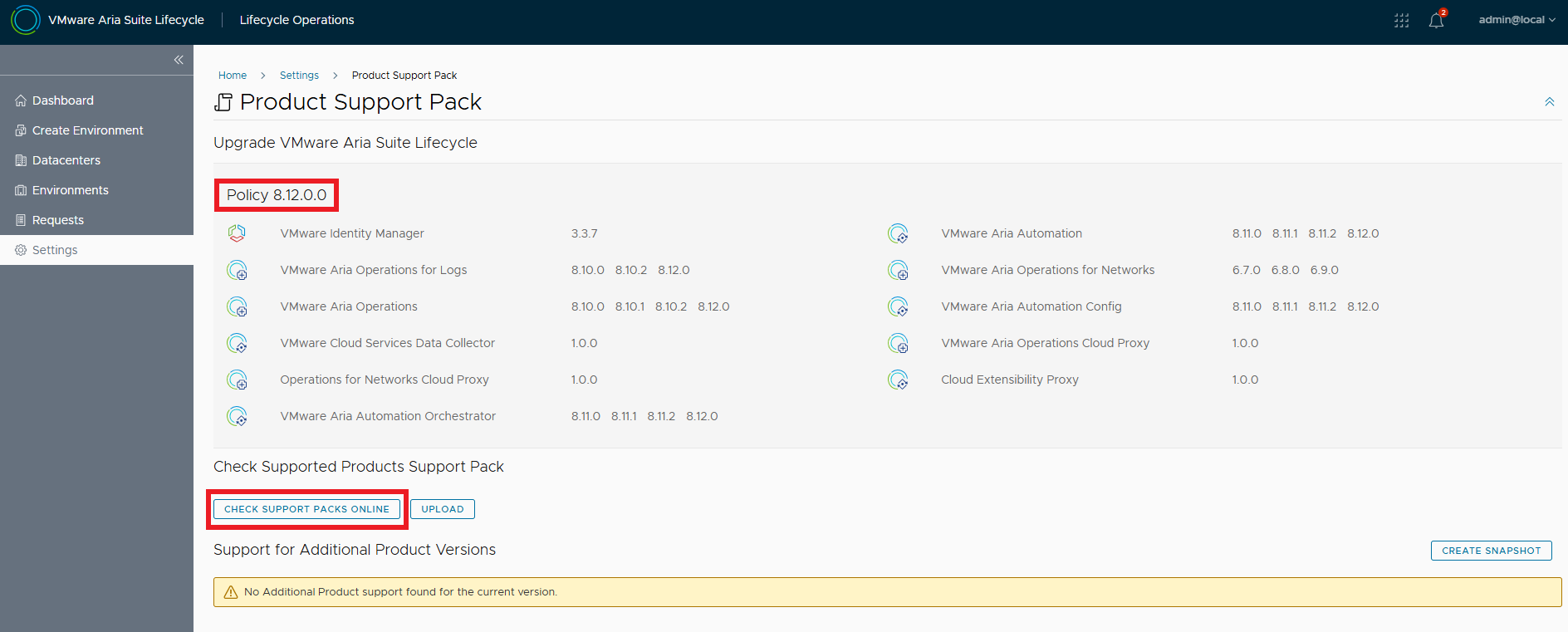

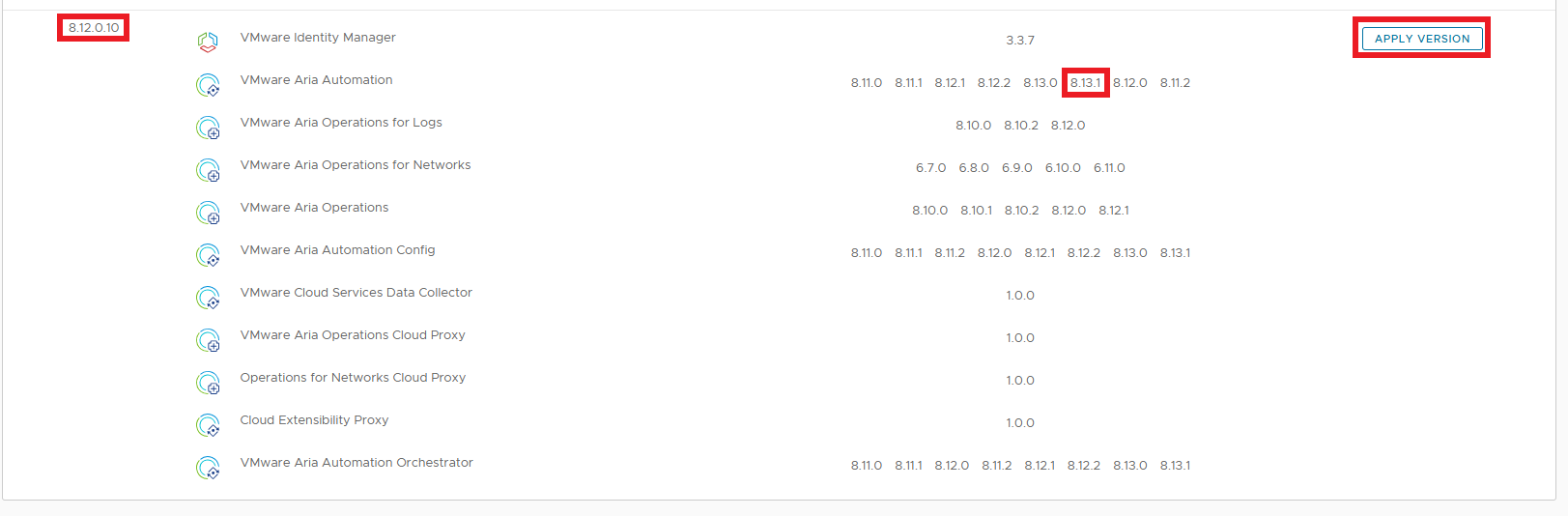

Once the upgrade is complete, we install the latest Product Support Pack, go to Settings and then to Product Support Pack. The active Pack is the one available with the upgrade, check that there are more recent ones.

Select the latest one and click APPLY VERSION

We see that the latest Support Pack supports exactly the version of Aria Automation that we need for the upgrade step 2.

LCM must be restarted for the new configurations to take effect.

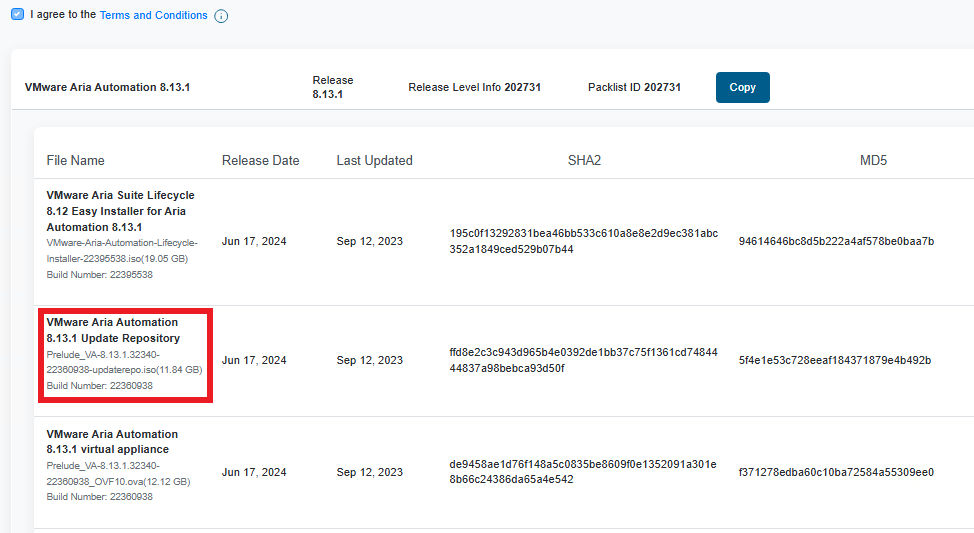

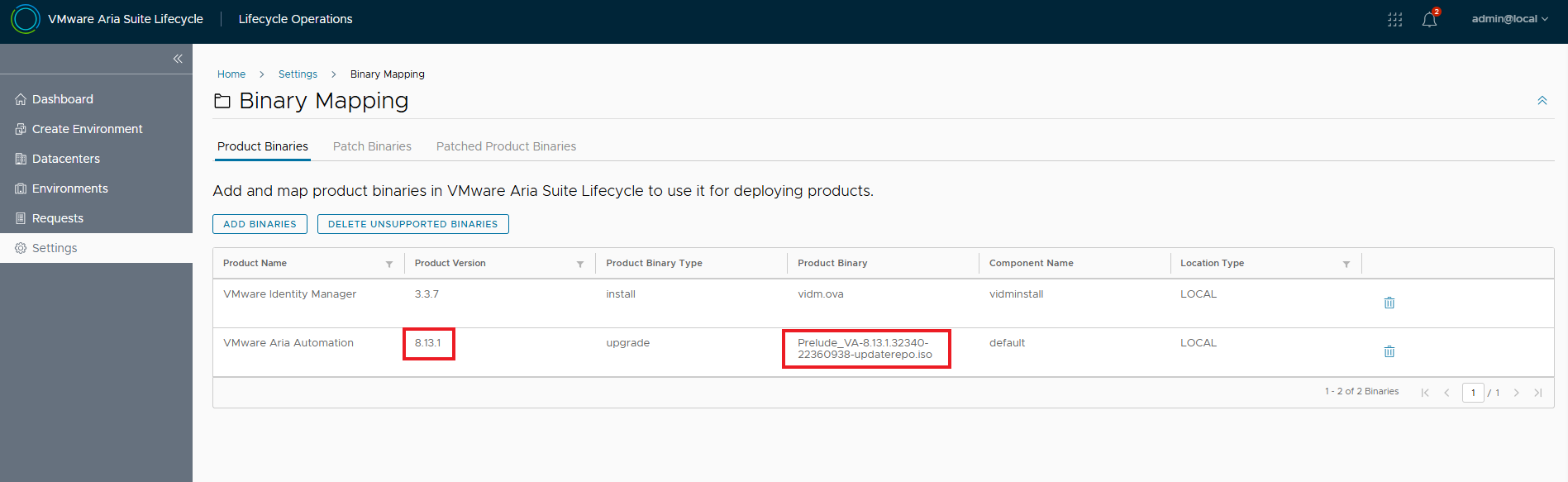

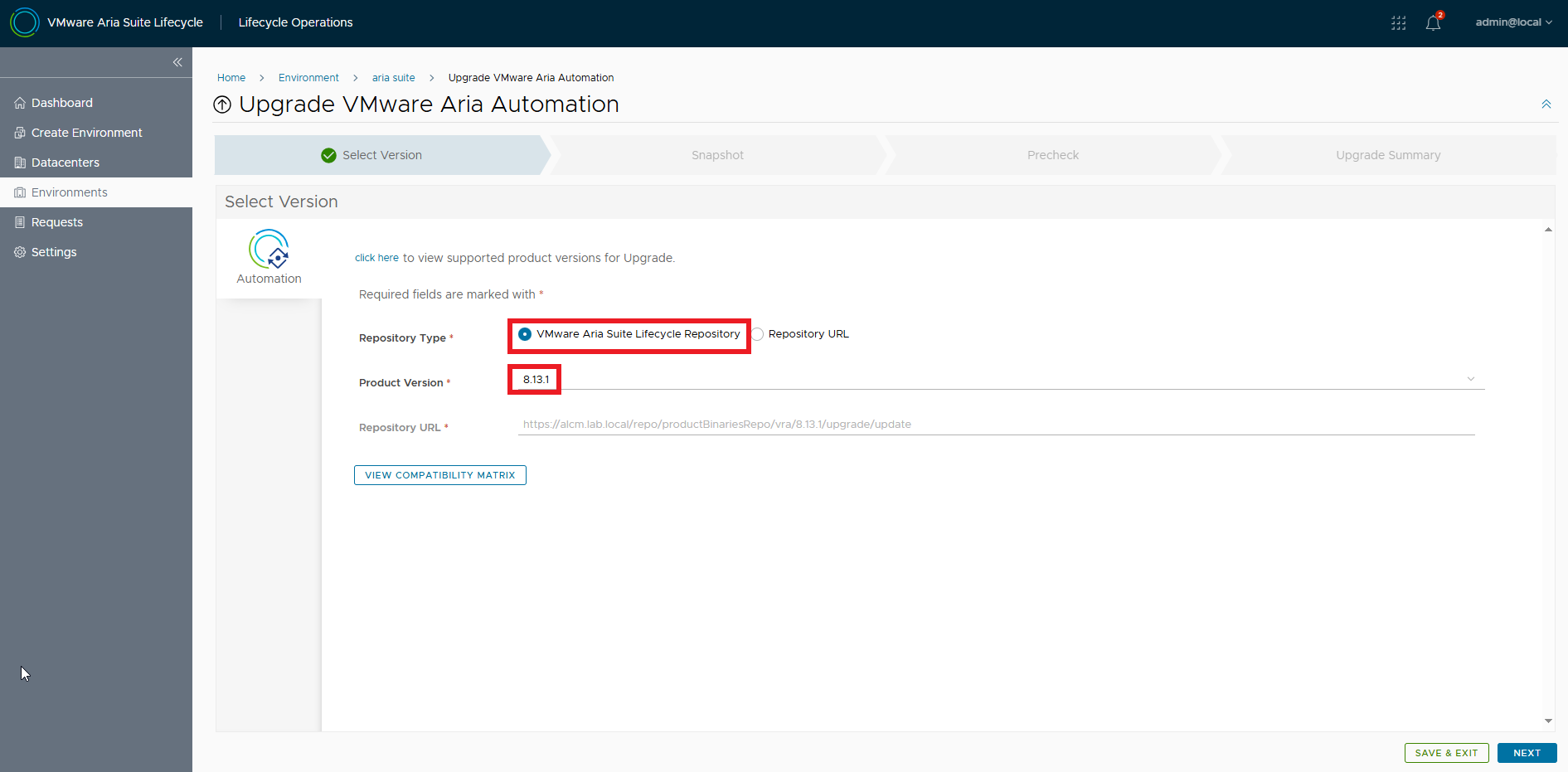

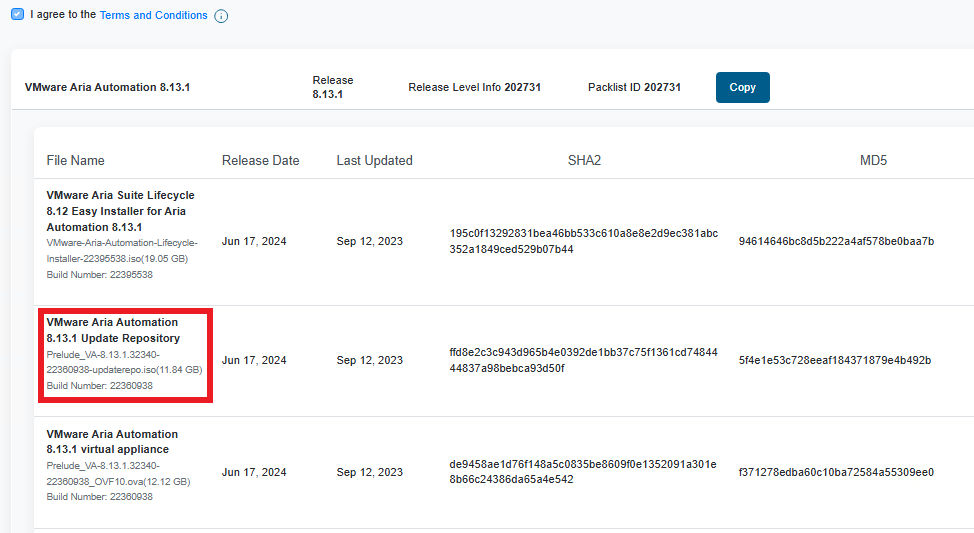

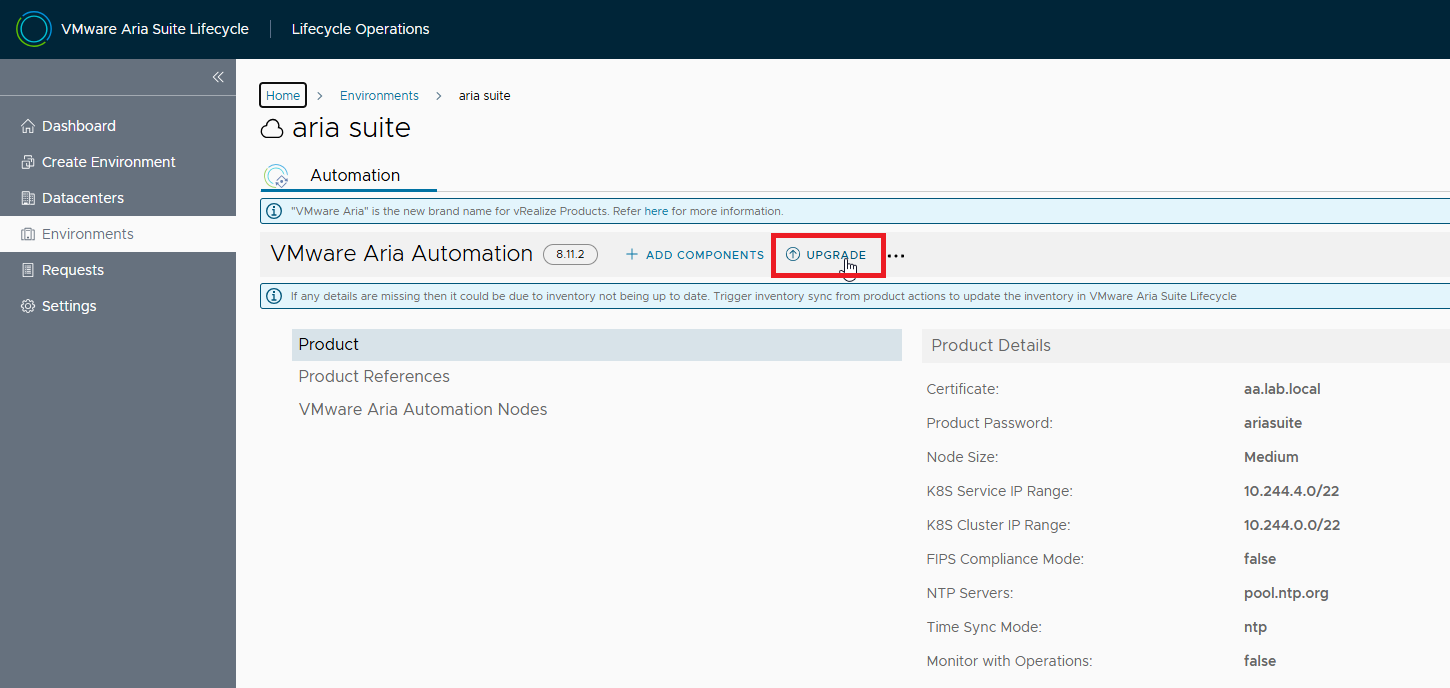

Let’s move on to the second step, download the ISO for the Automation upgrade to release 8.13.1

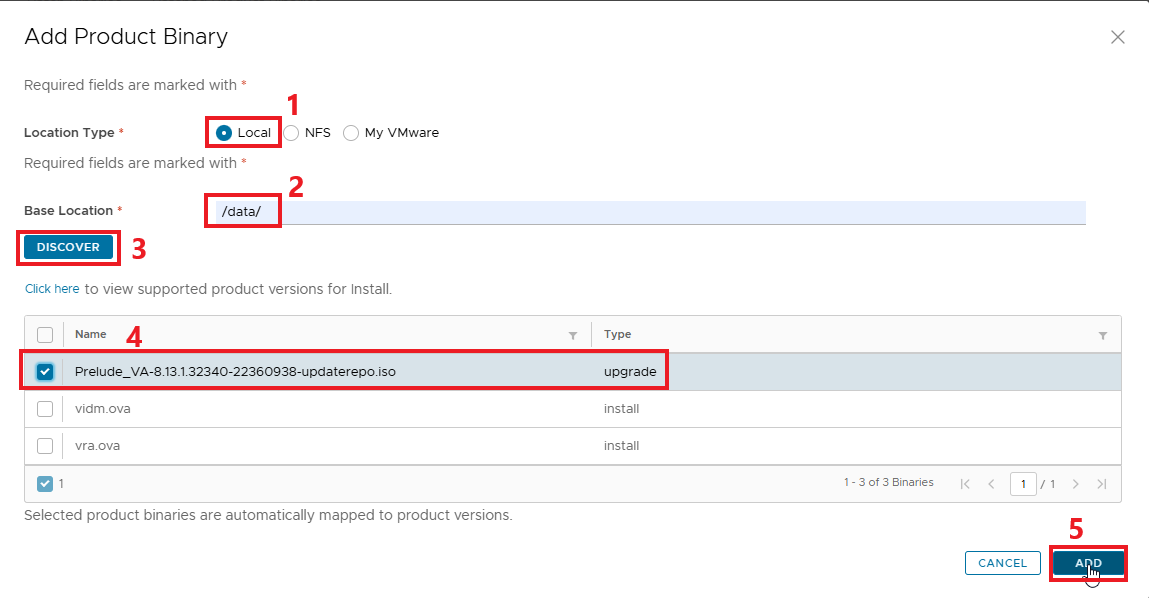

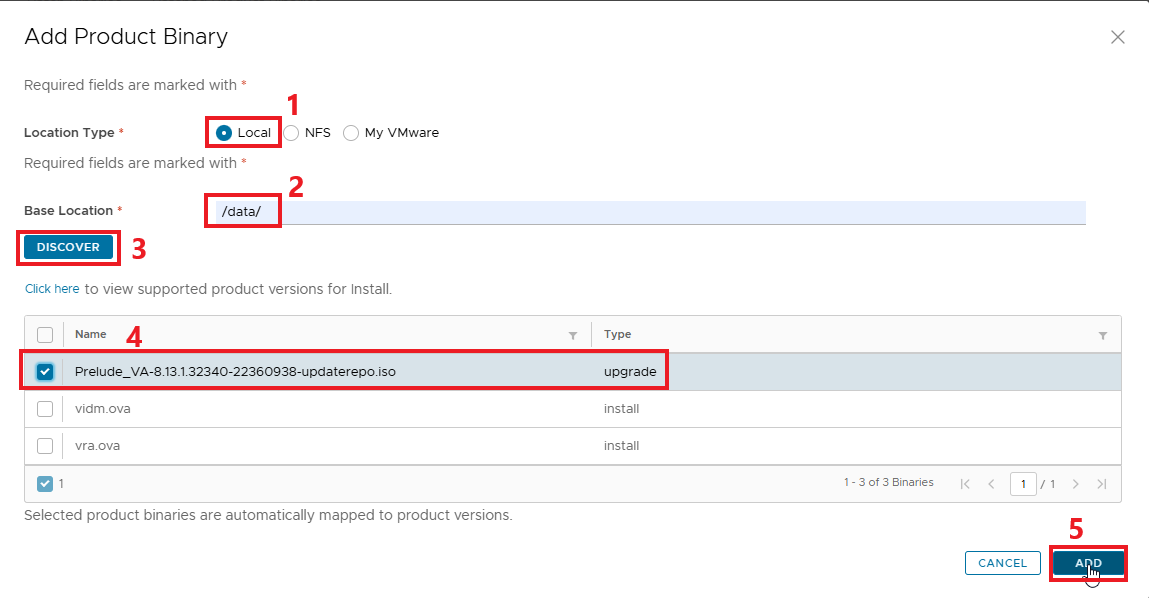

This time the ISO must be loaded directly on LCM, it is possible to transfer it via SCP to the path /data

NOTE: If there is not enough space available it will be necessary to remove vra.ova with which the installation was done by EASY INSTALLER. In addition to removing the image from Binary Mapping it will be necessary to physically remove it from /data via CLI

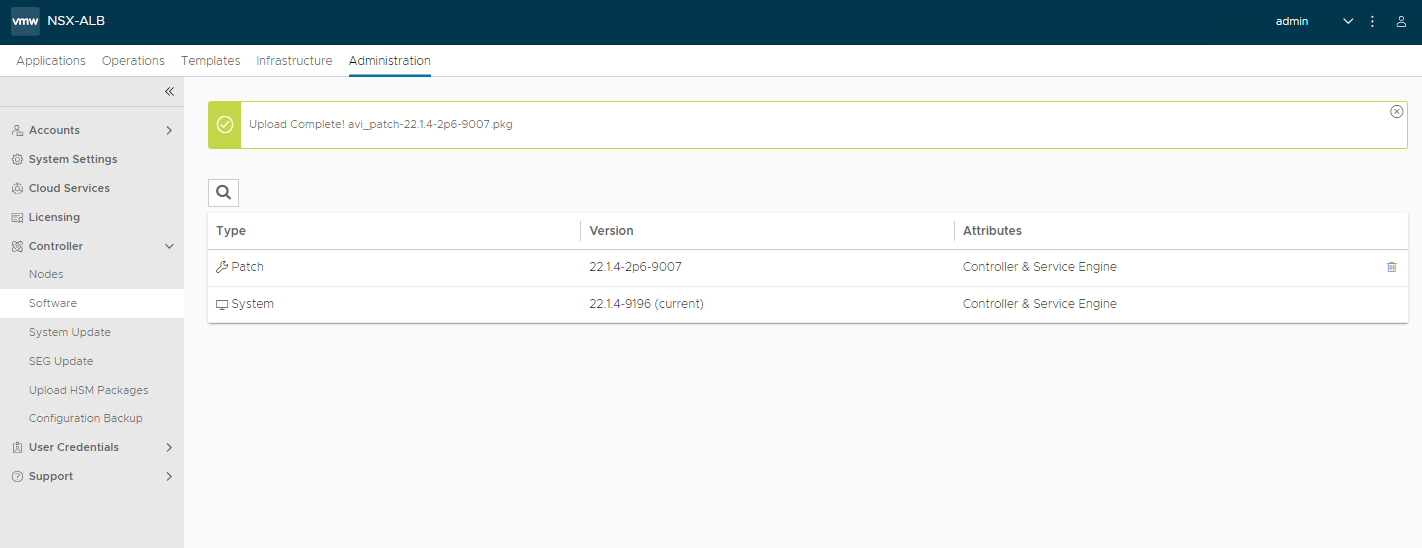

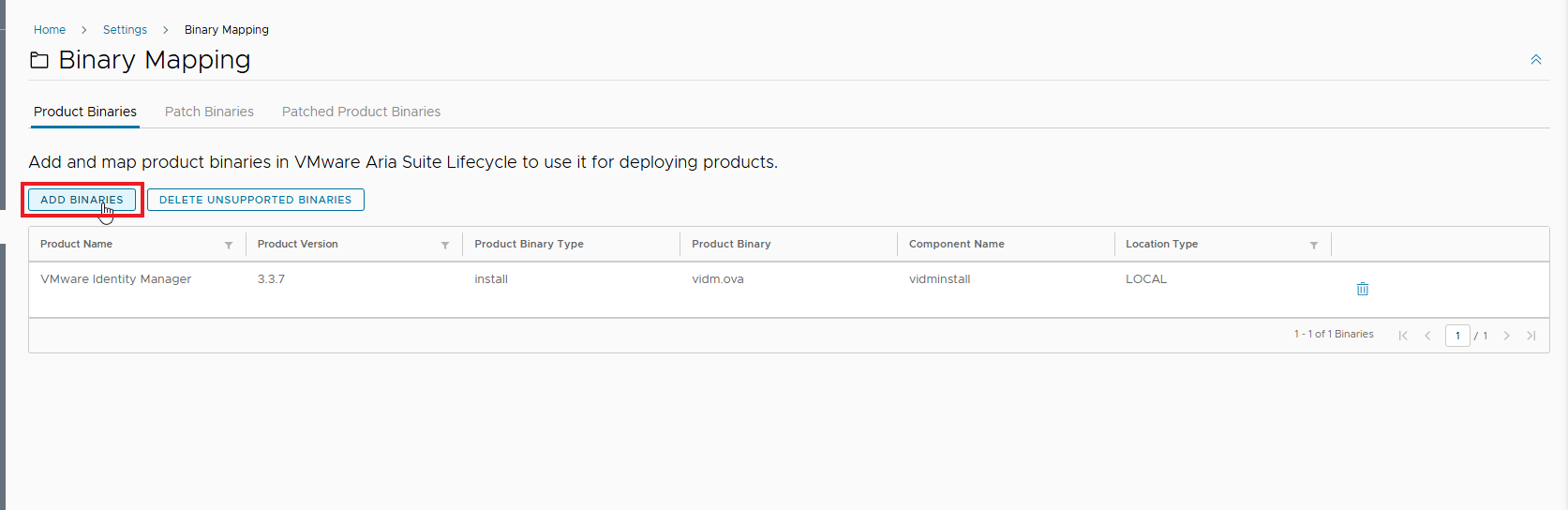

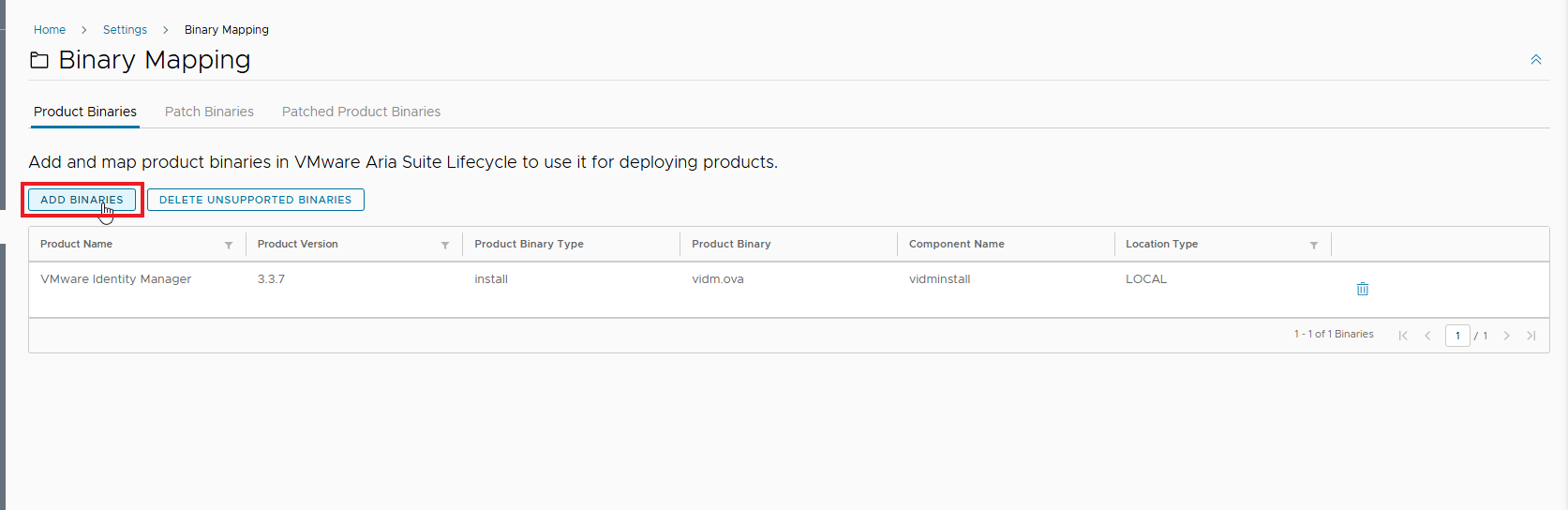

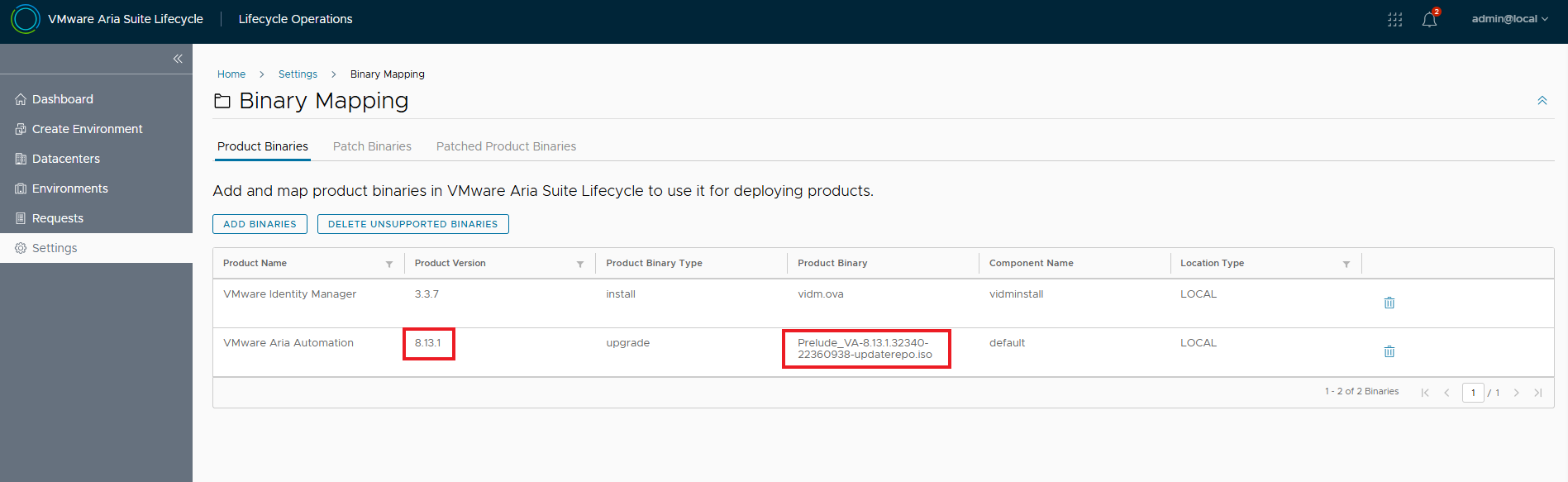

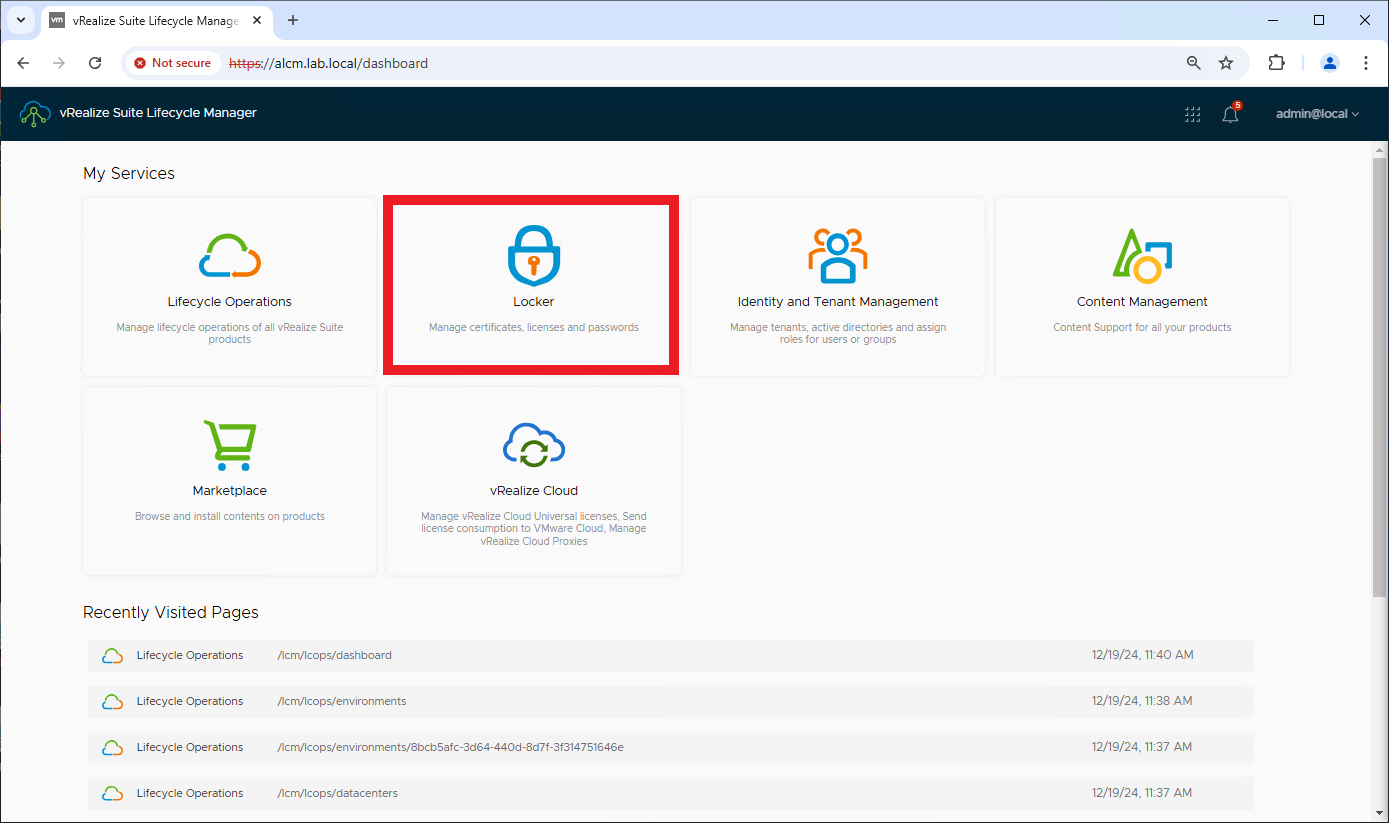

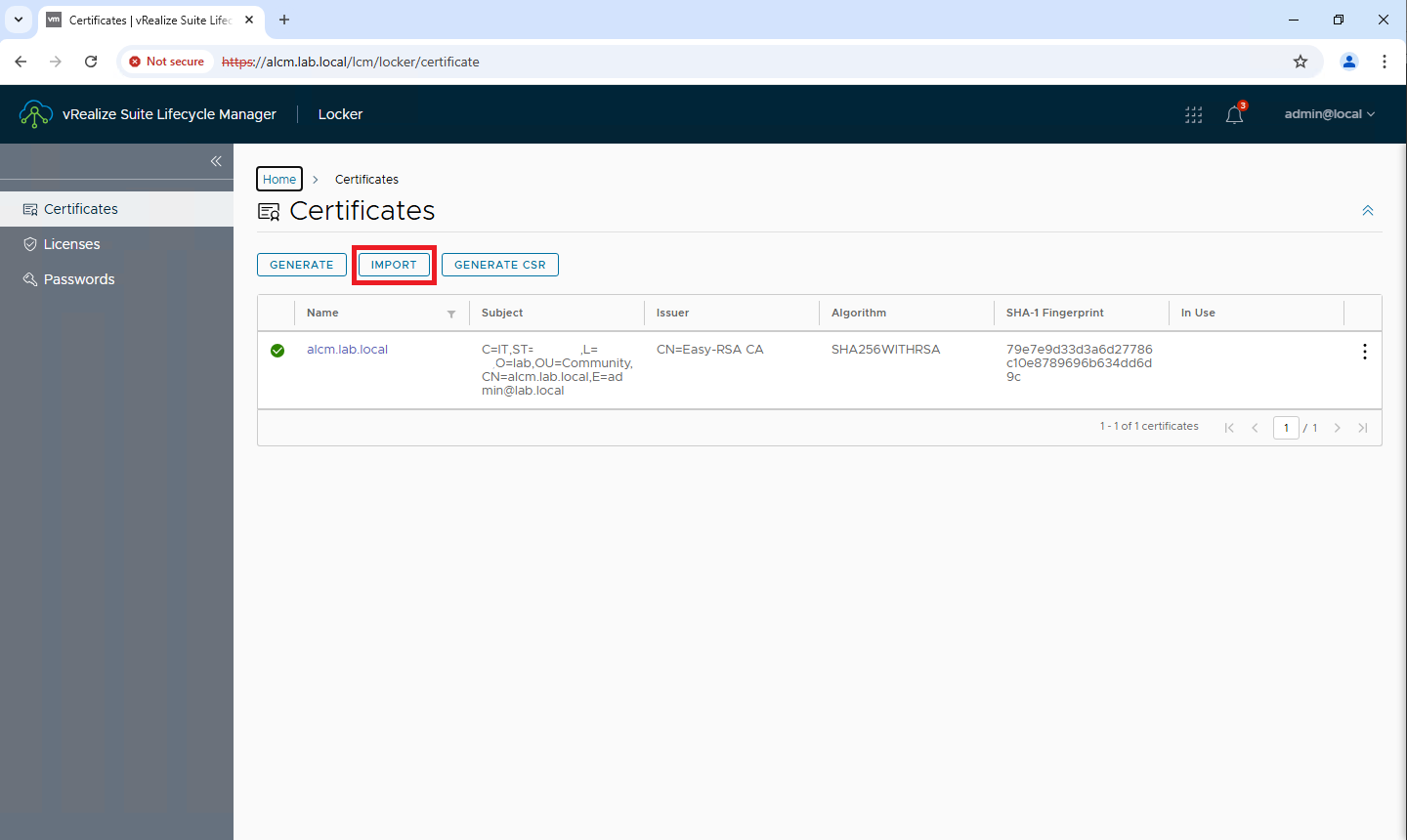

Mapping the ISO from Binary Mapping

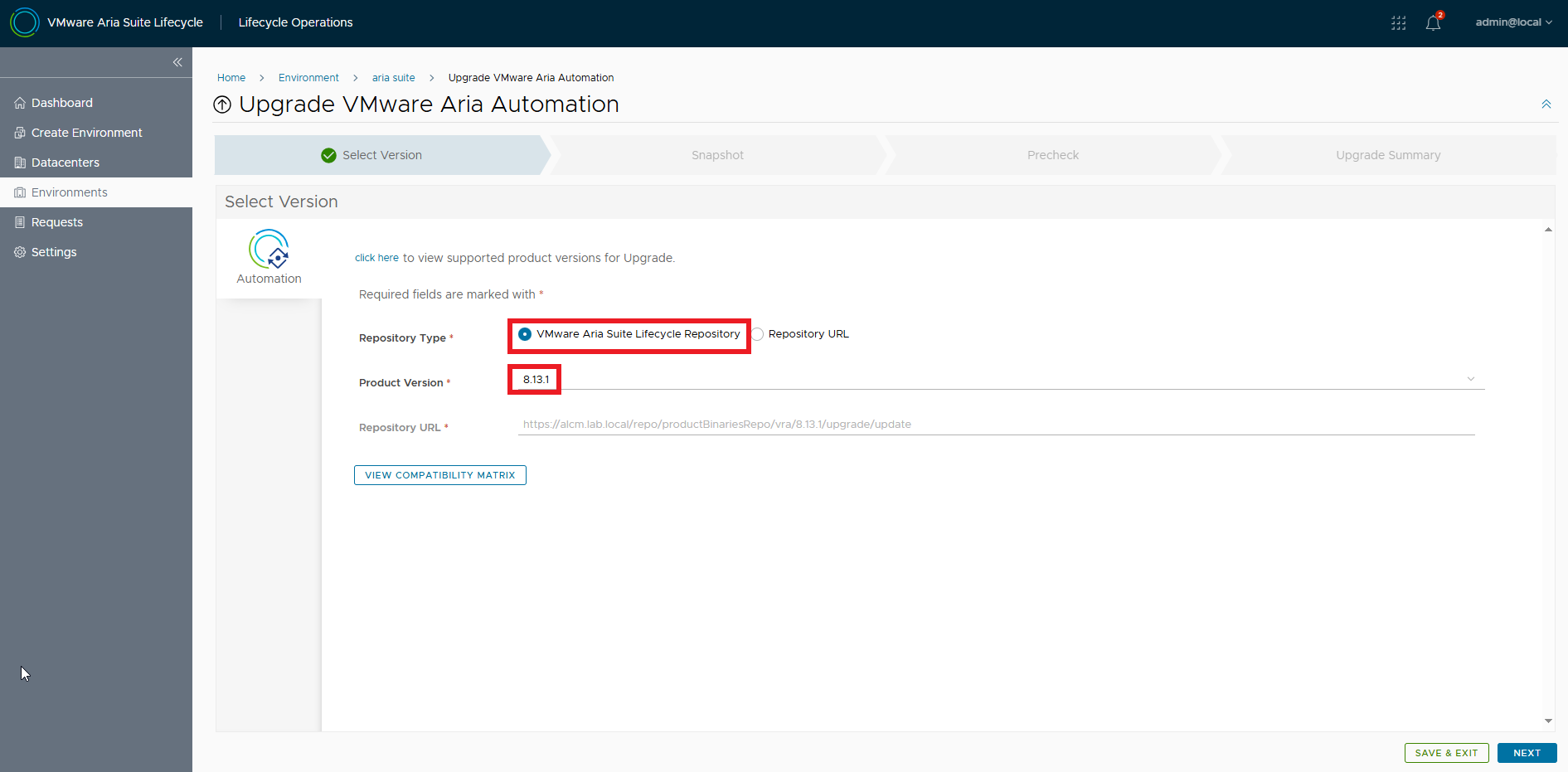

The new image is available for upgrade!

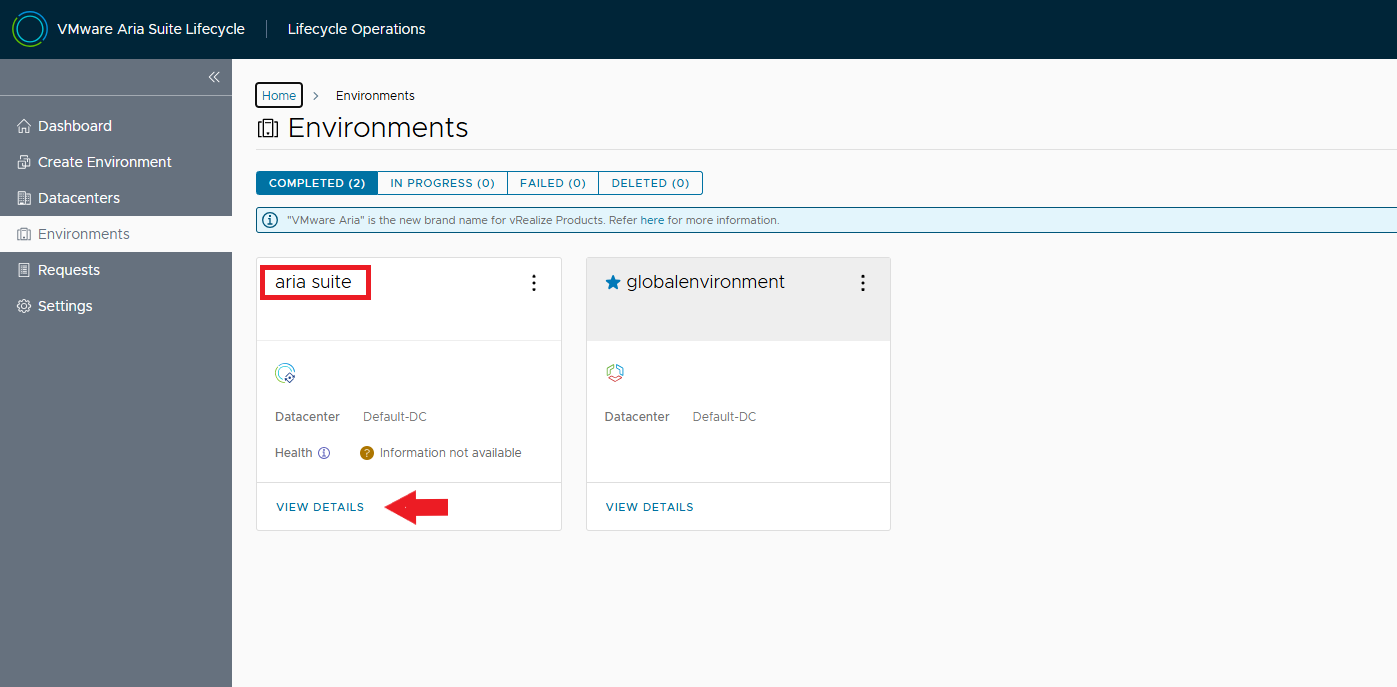

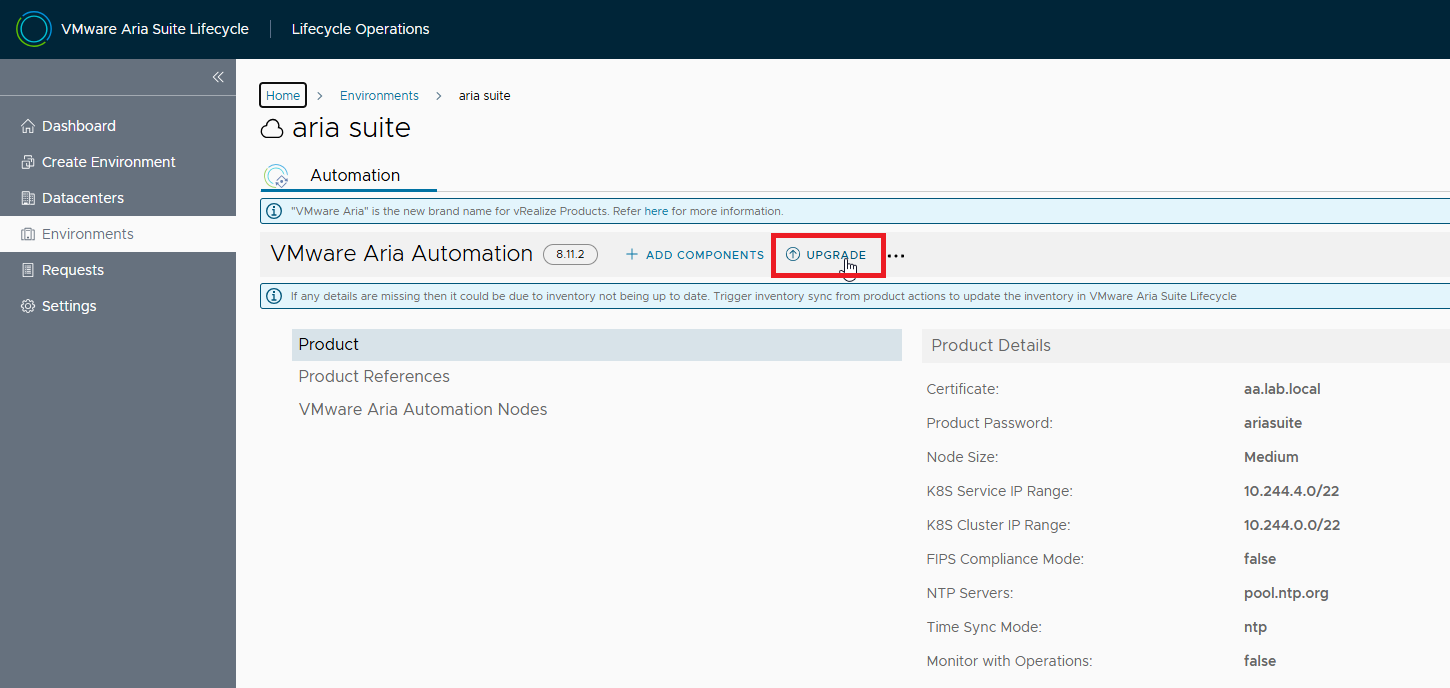

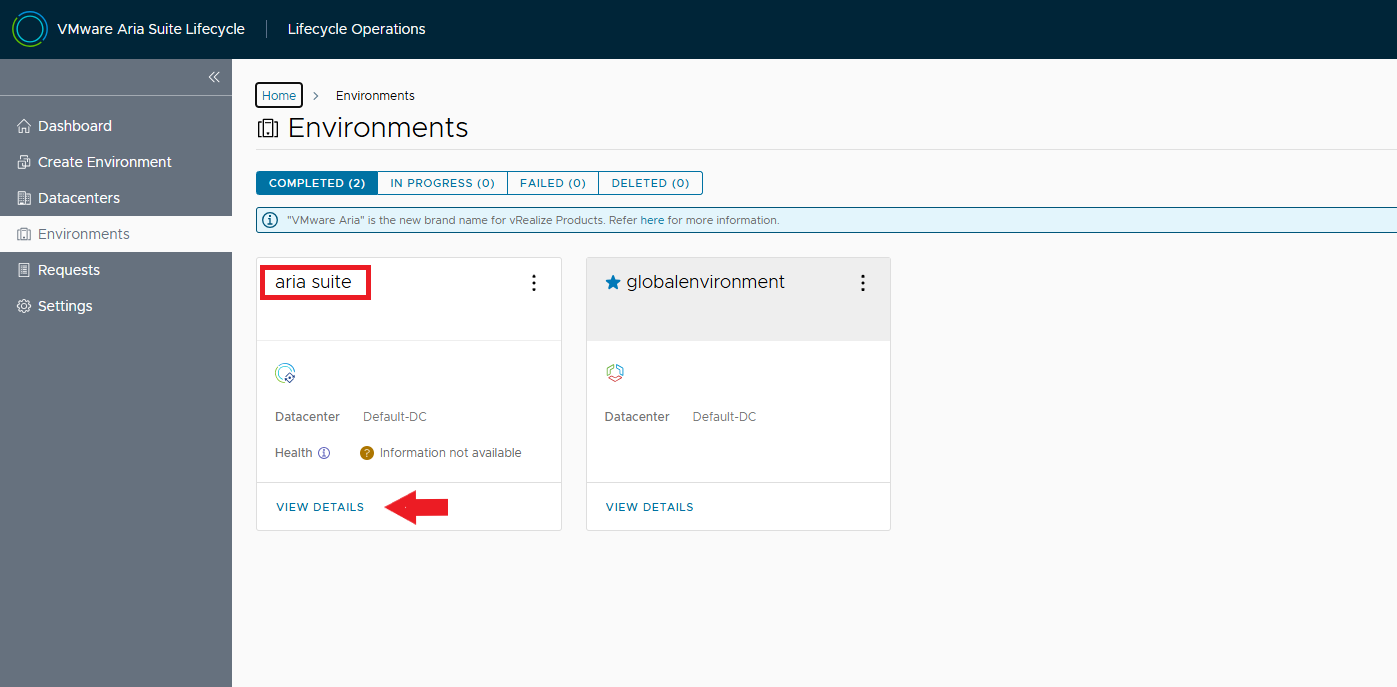

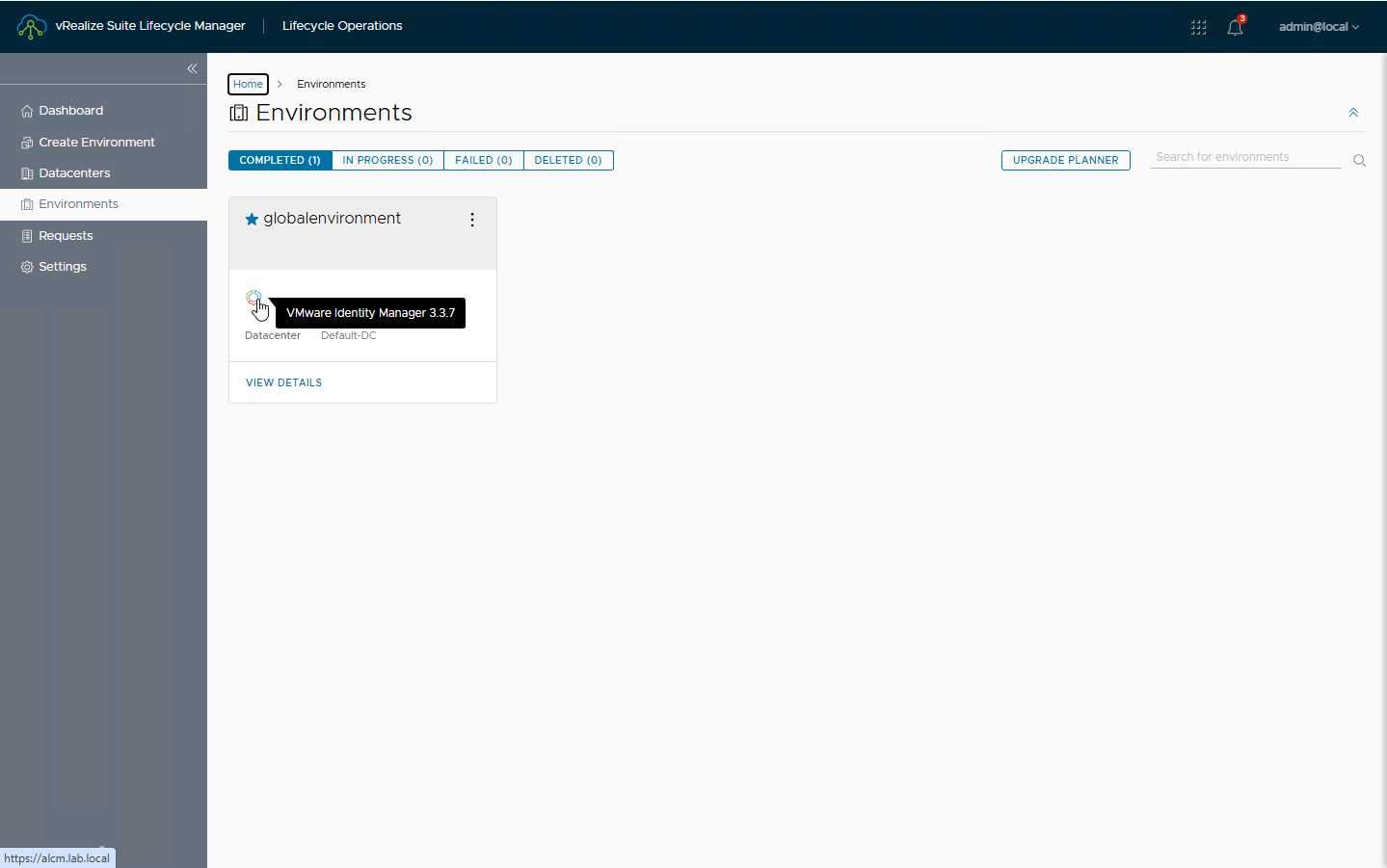

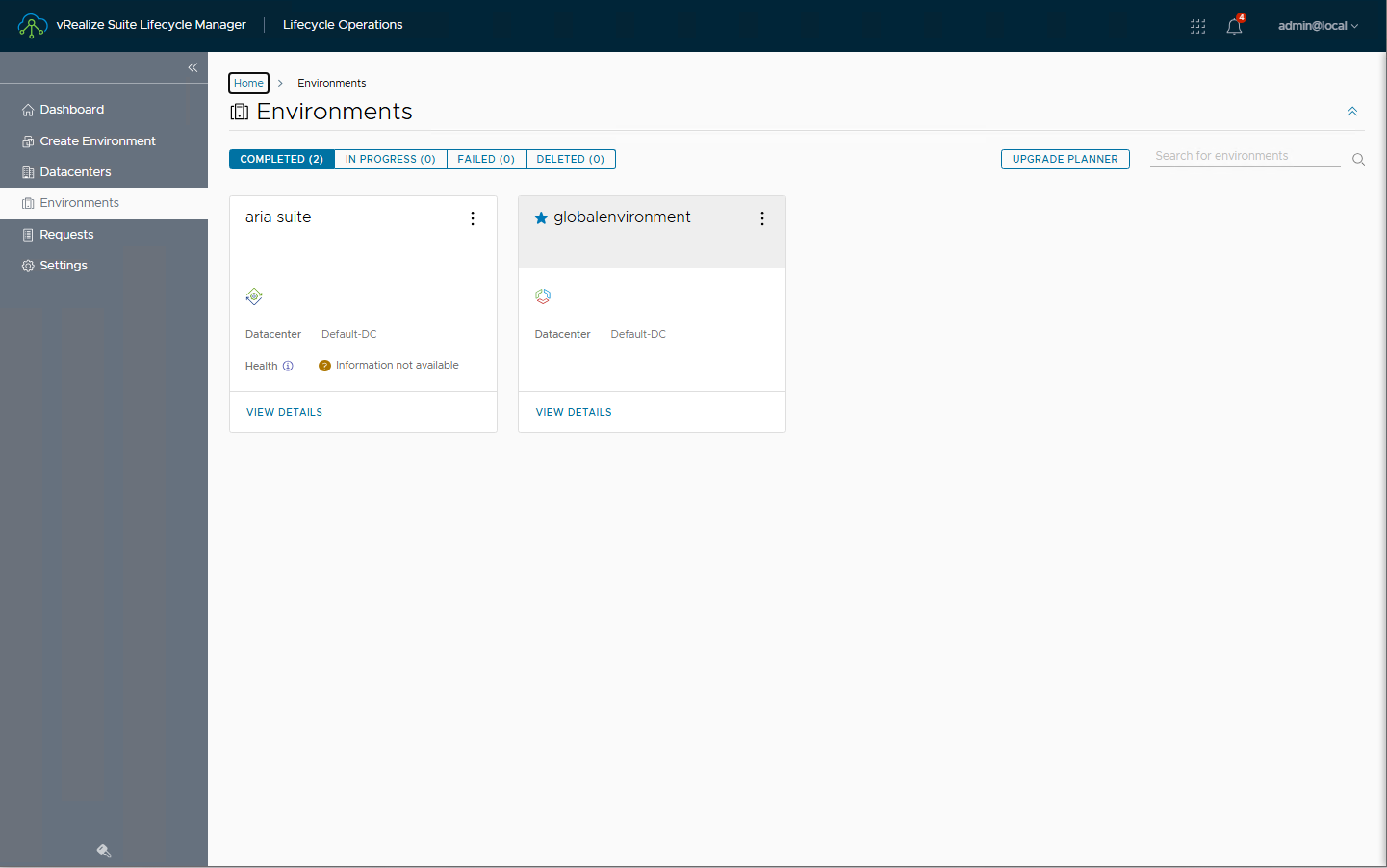

Let’s select the environment that hosts Aria Automation, VIEW DETAILS

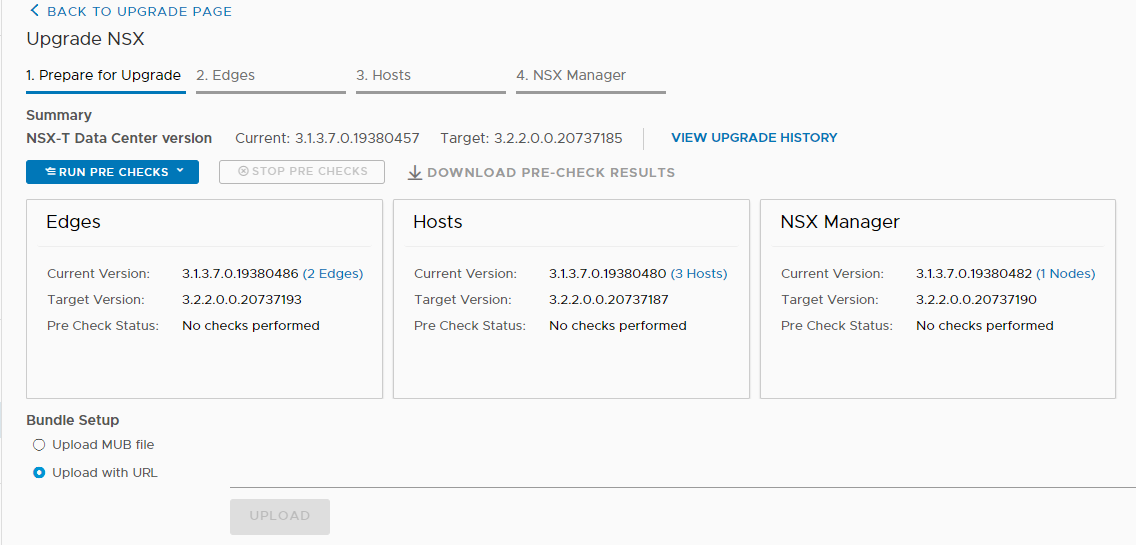

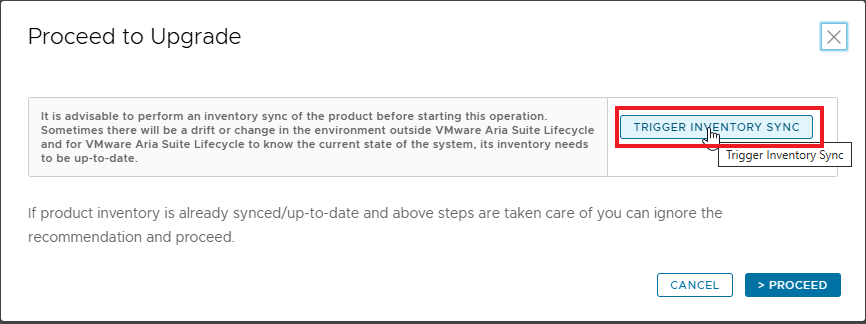

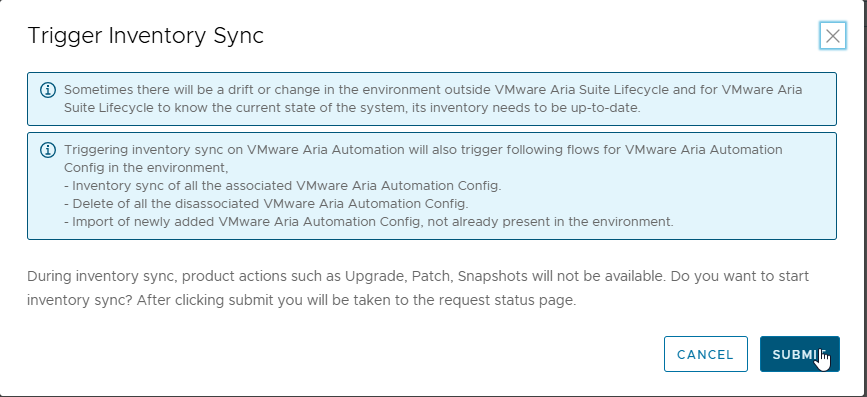

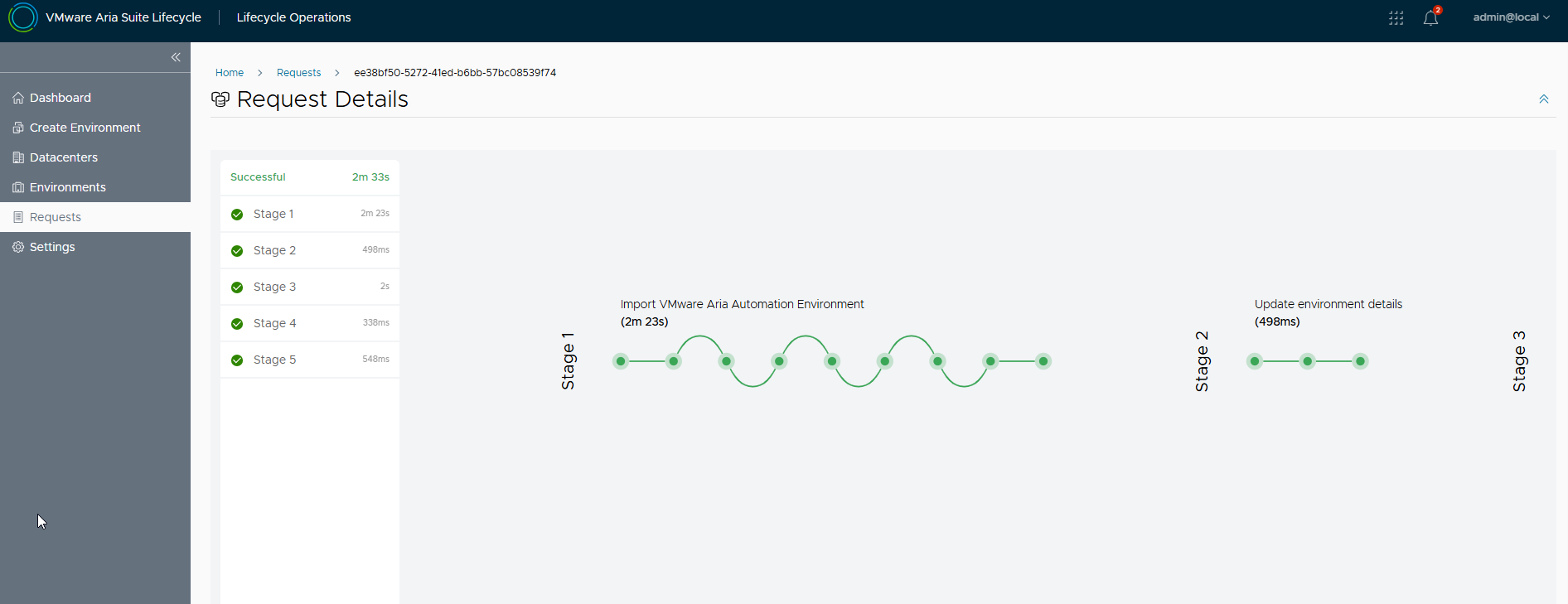

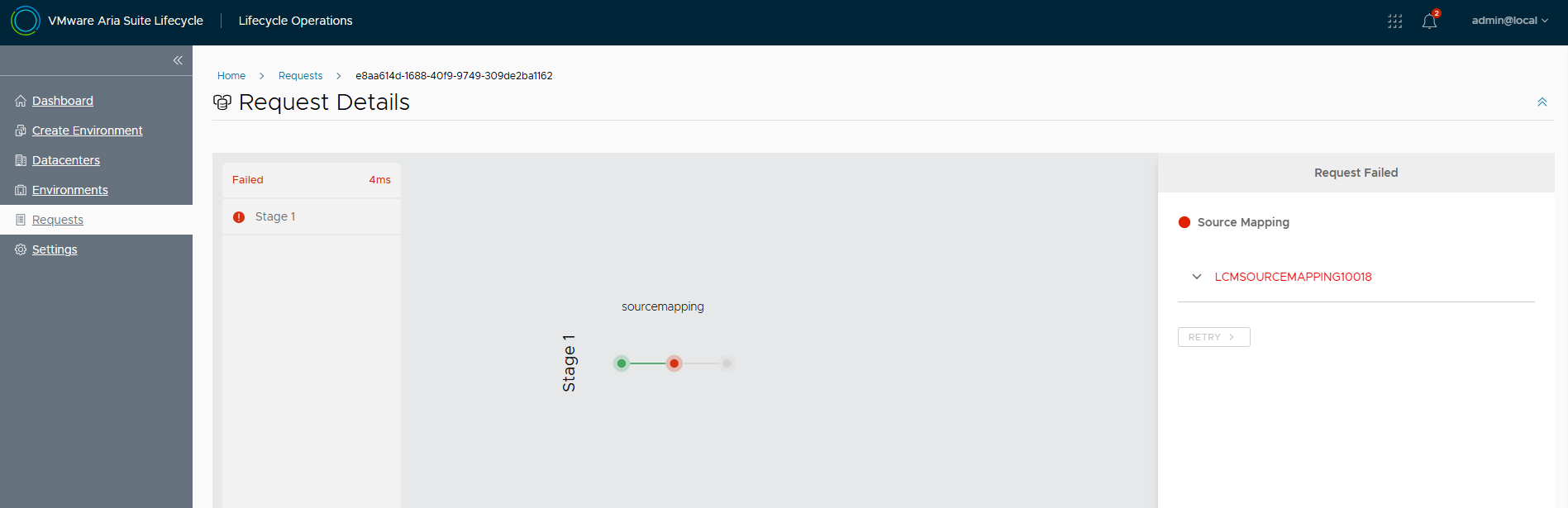

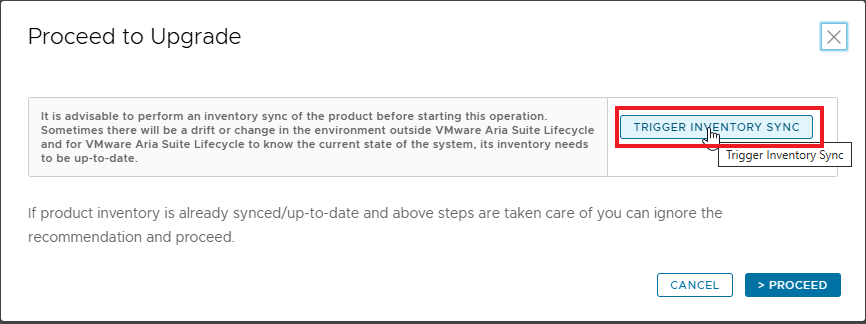

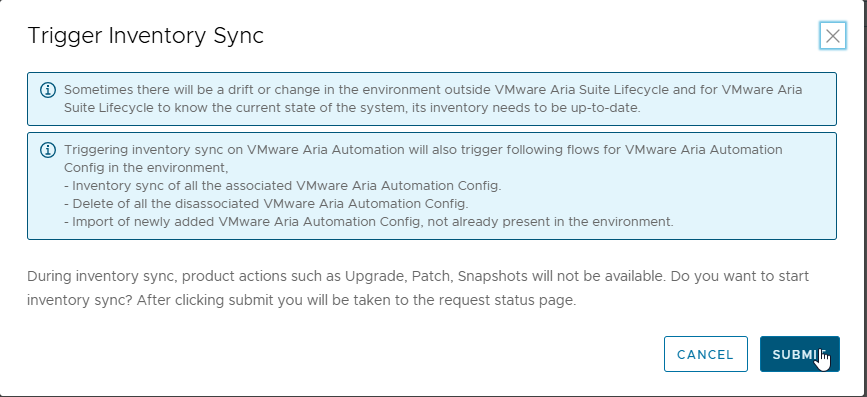

Before proceeding with the upgrade, perform a TRIGGER INVENTORY SYNC and verify that the job completes successfully

Back to the upgrade procedure, the new release is available

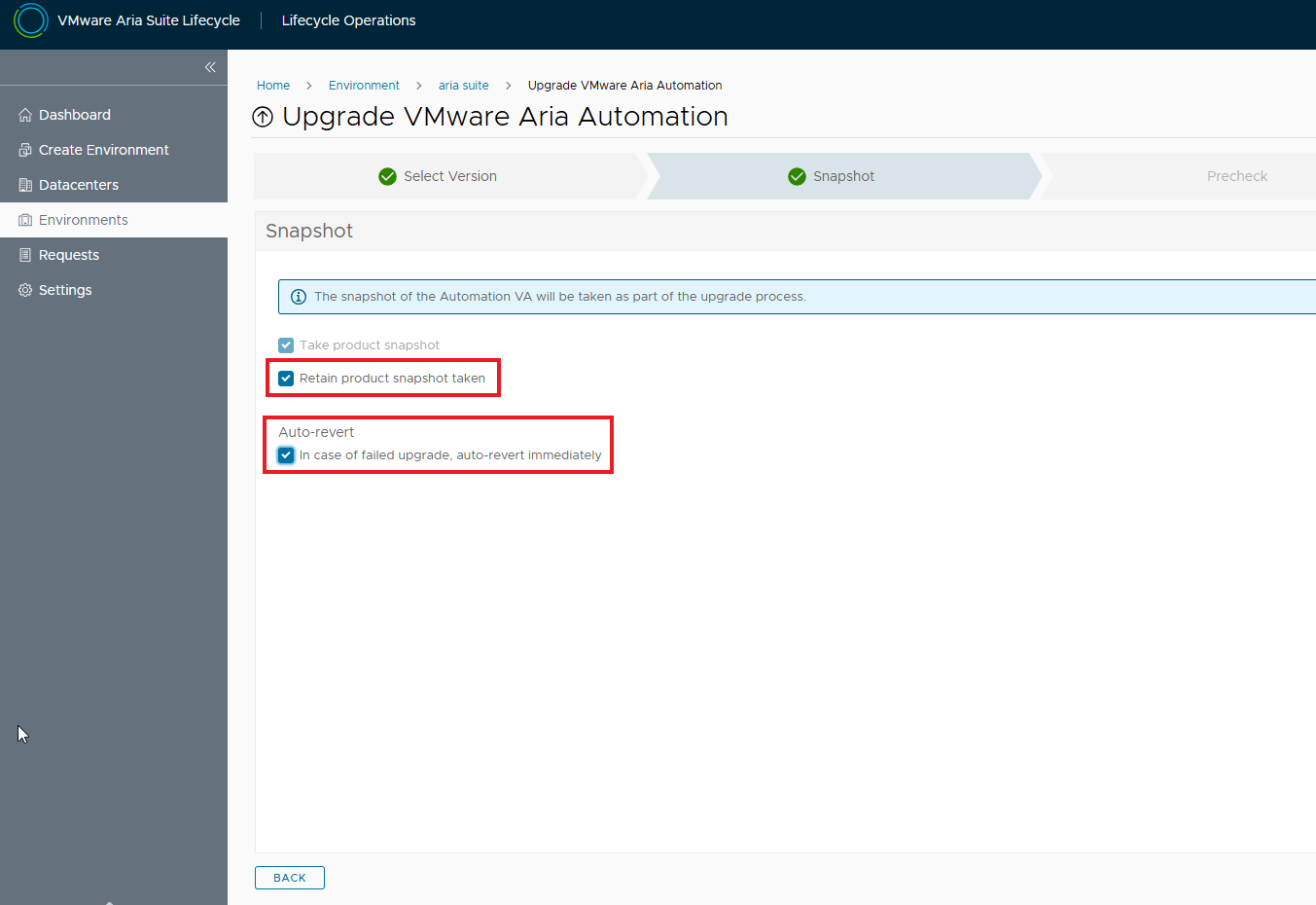

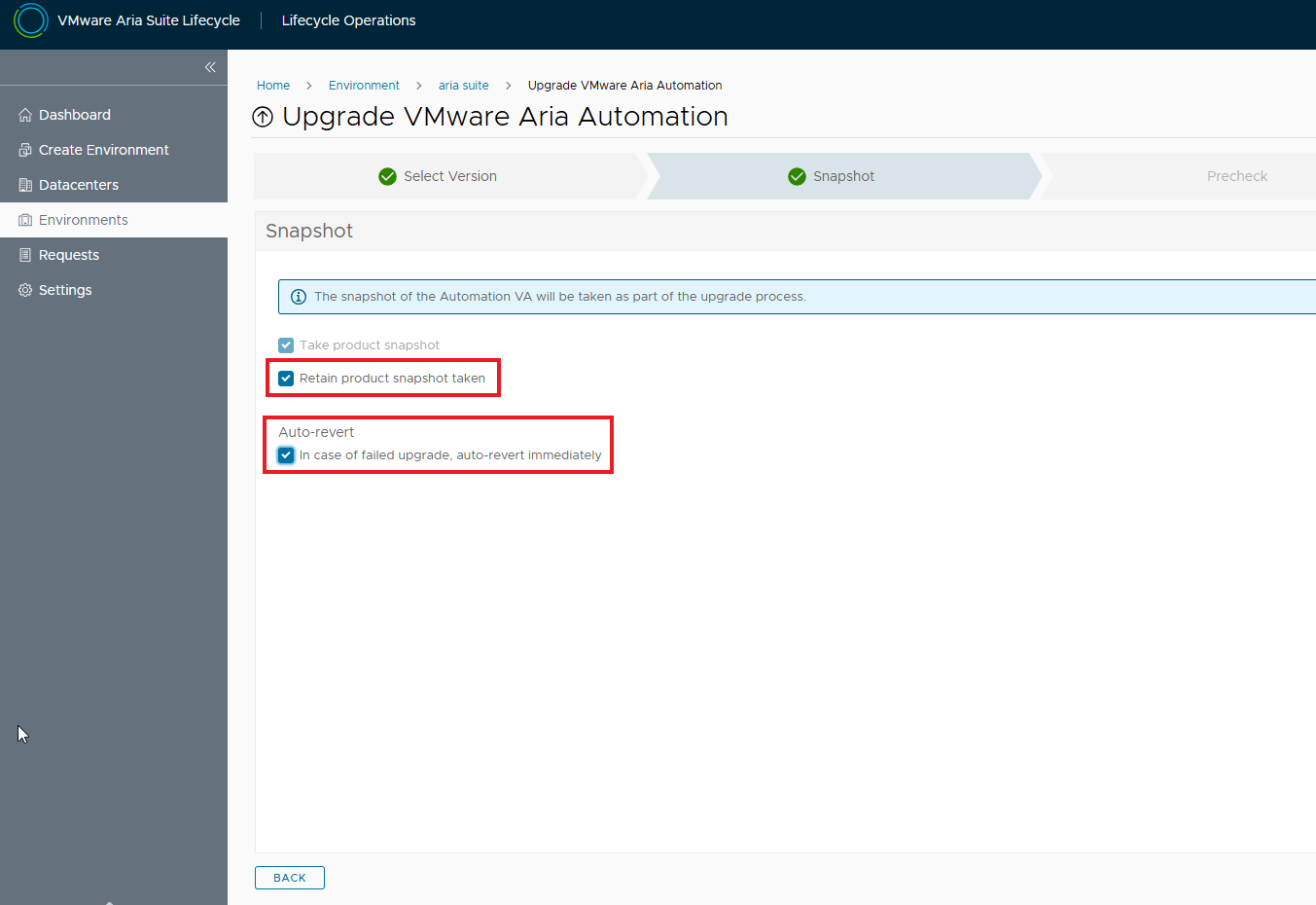

Enable snapshot and rollback flags if there are problems during the upgrade

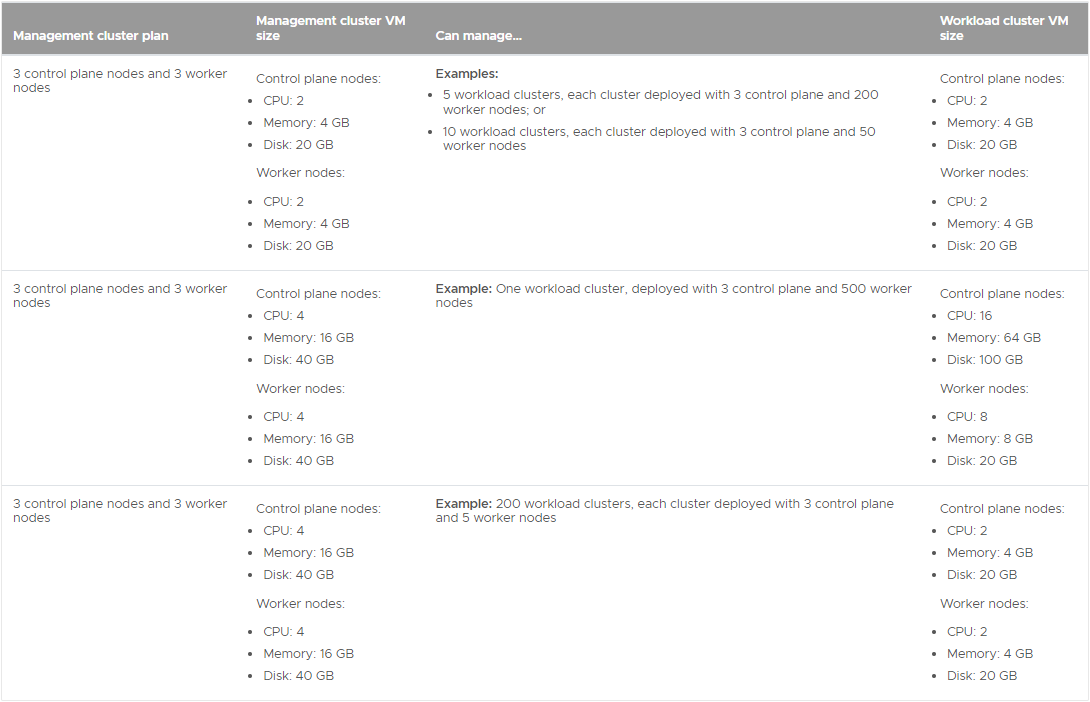

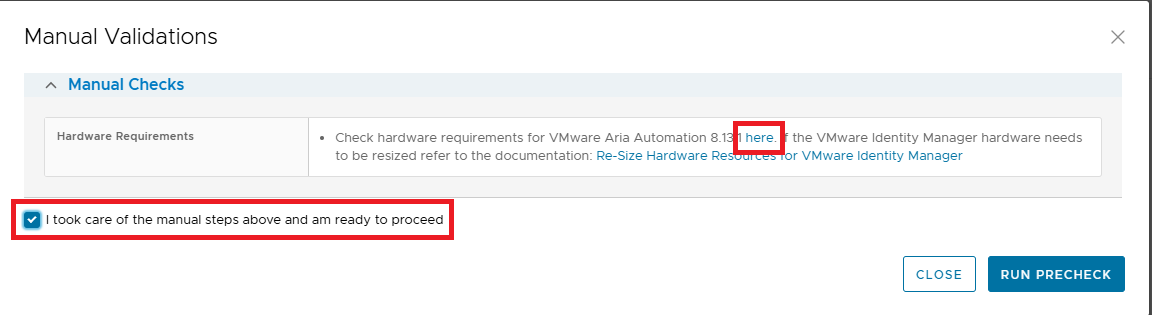

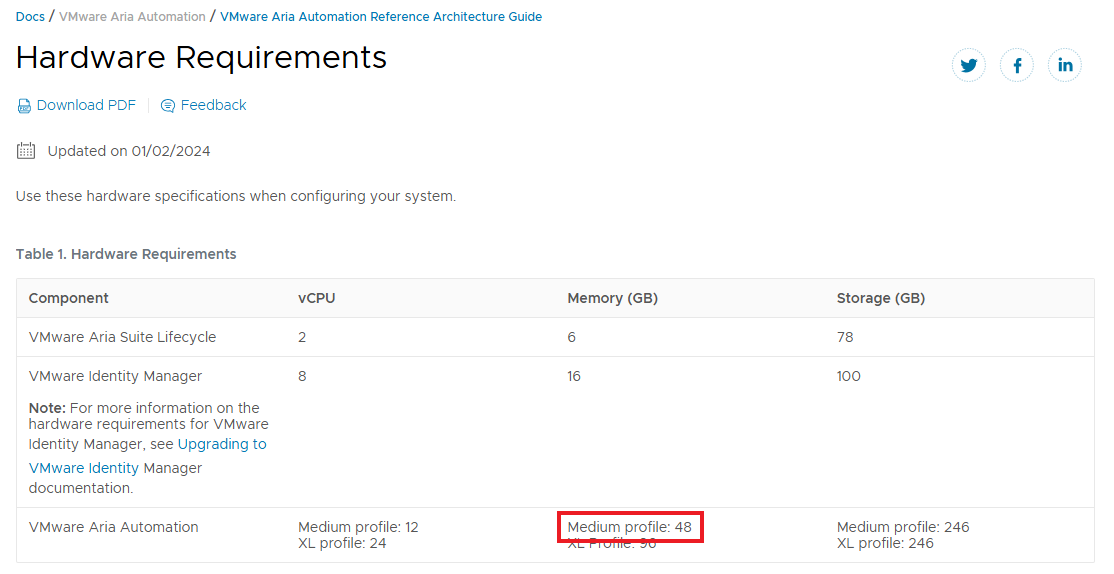

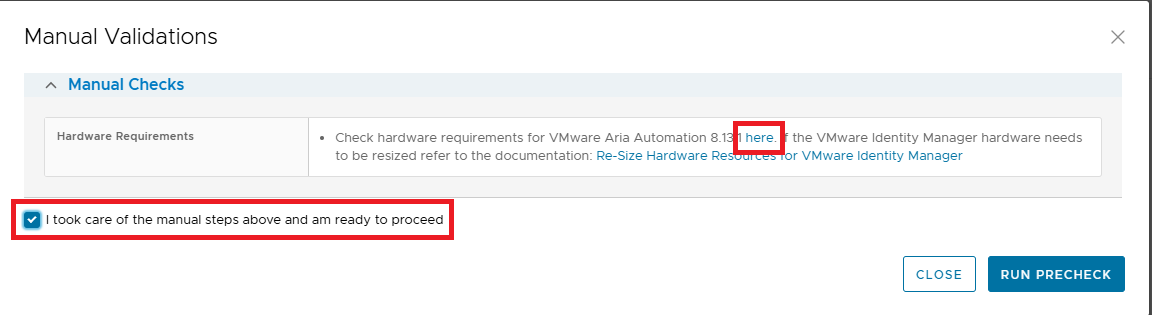

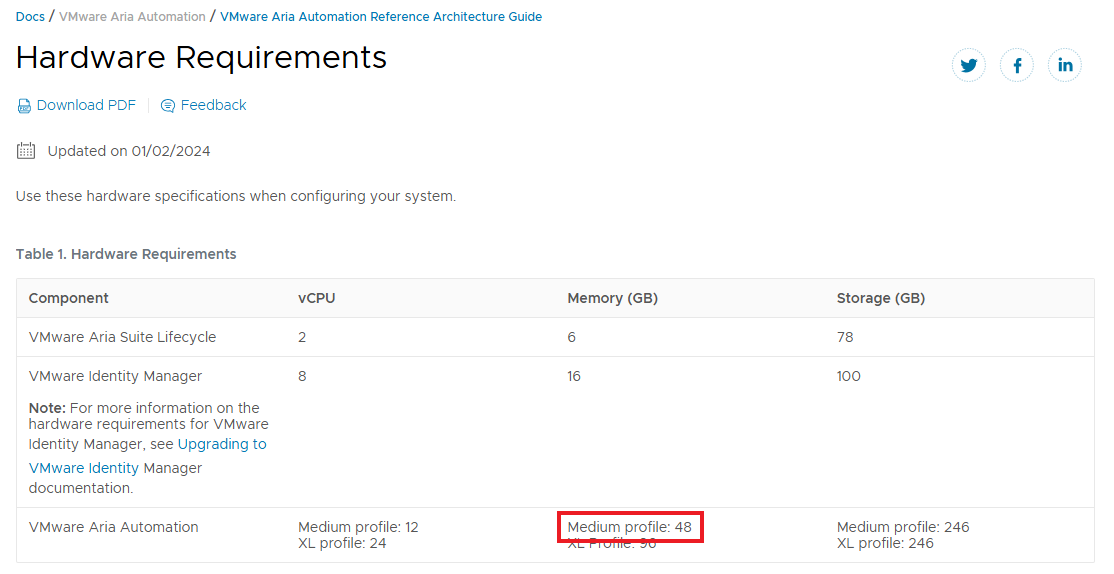

Let’s check the hardware requirements for the new release by following the highlighted link

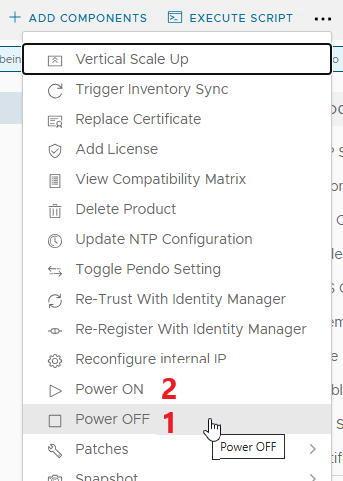

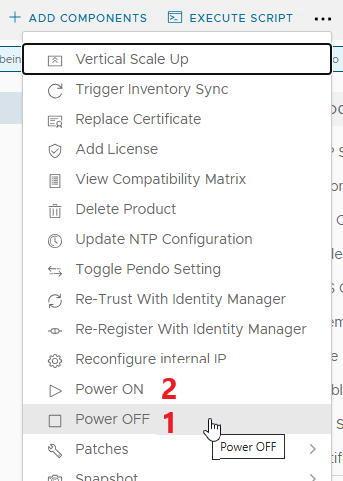

The RAM required is 48GB, my current implementation is MEDIUM and will need to increase the RAM as required. To do this use day 2 operations from the environment, first POWER OFF, change the RAM from the vSphere Client and then POWER ON. Wait for the services to come back up (takes a long time!)

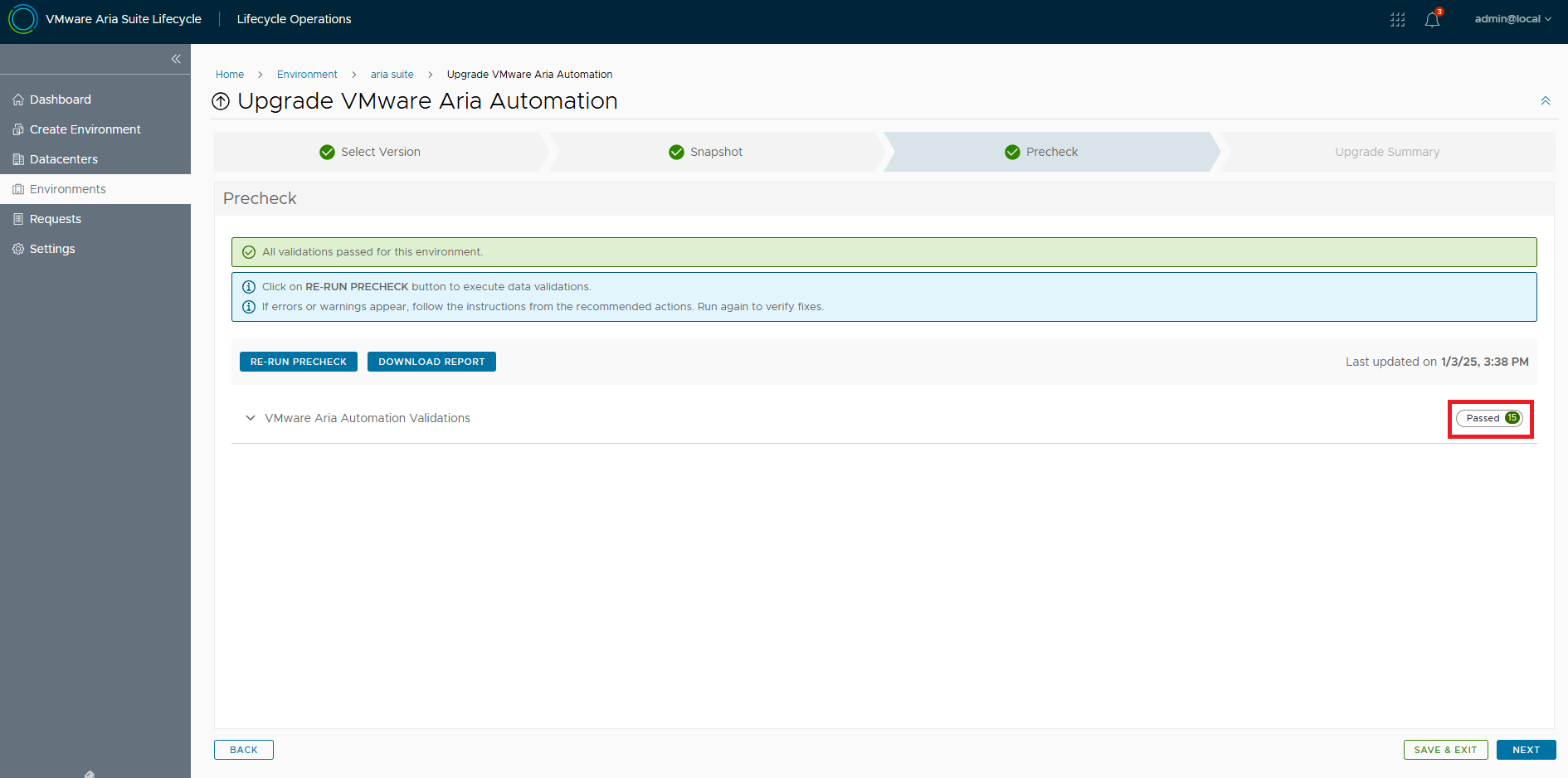

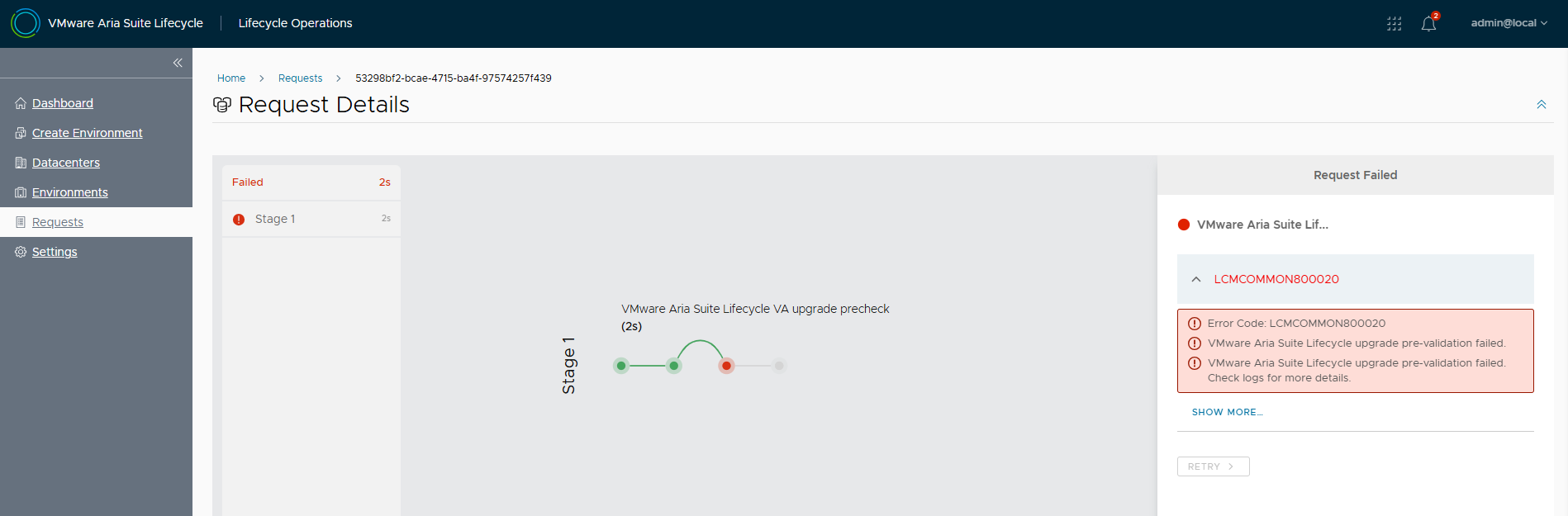

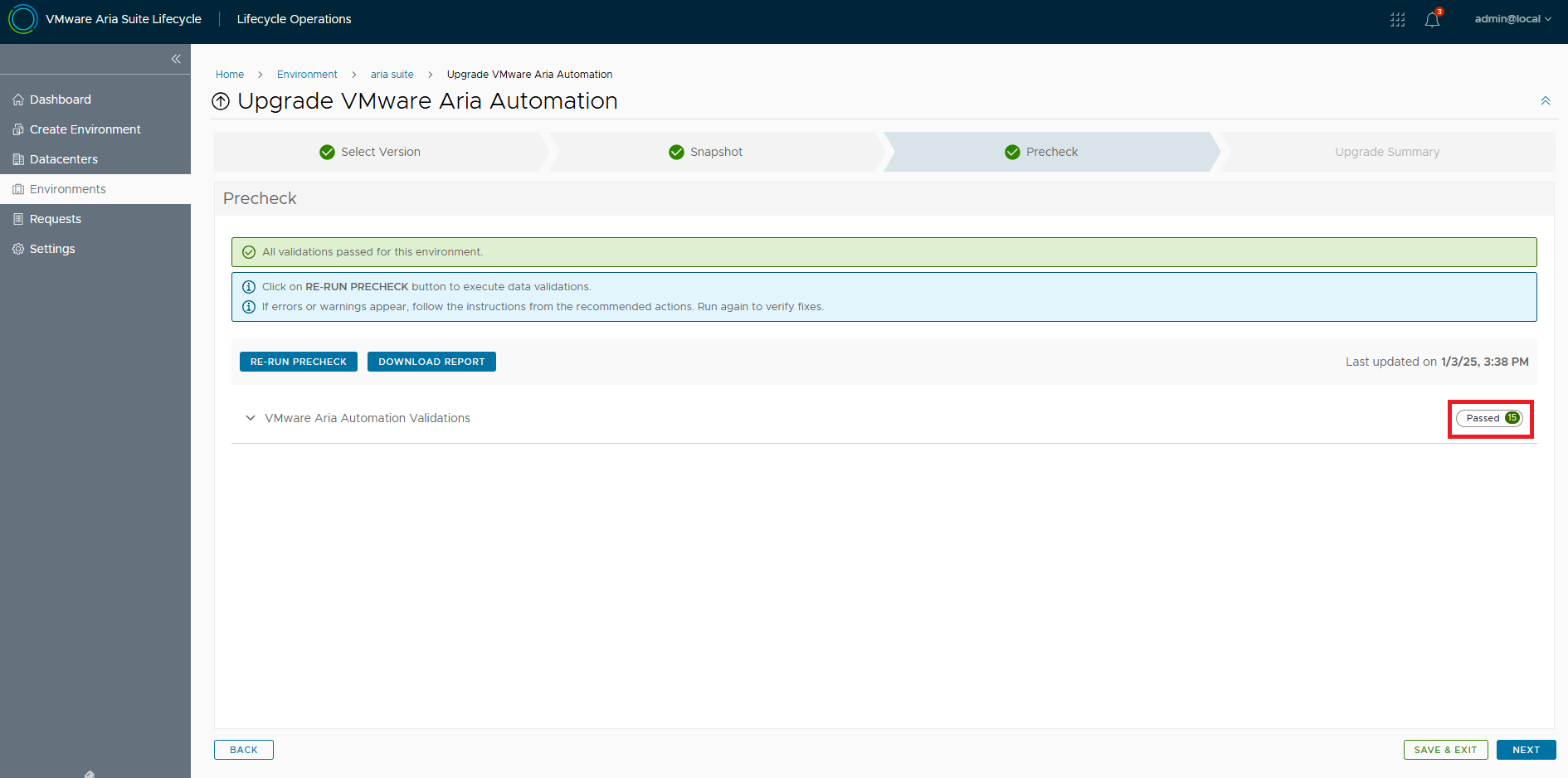

Let’s resume the upgrade by running the pre-checks

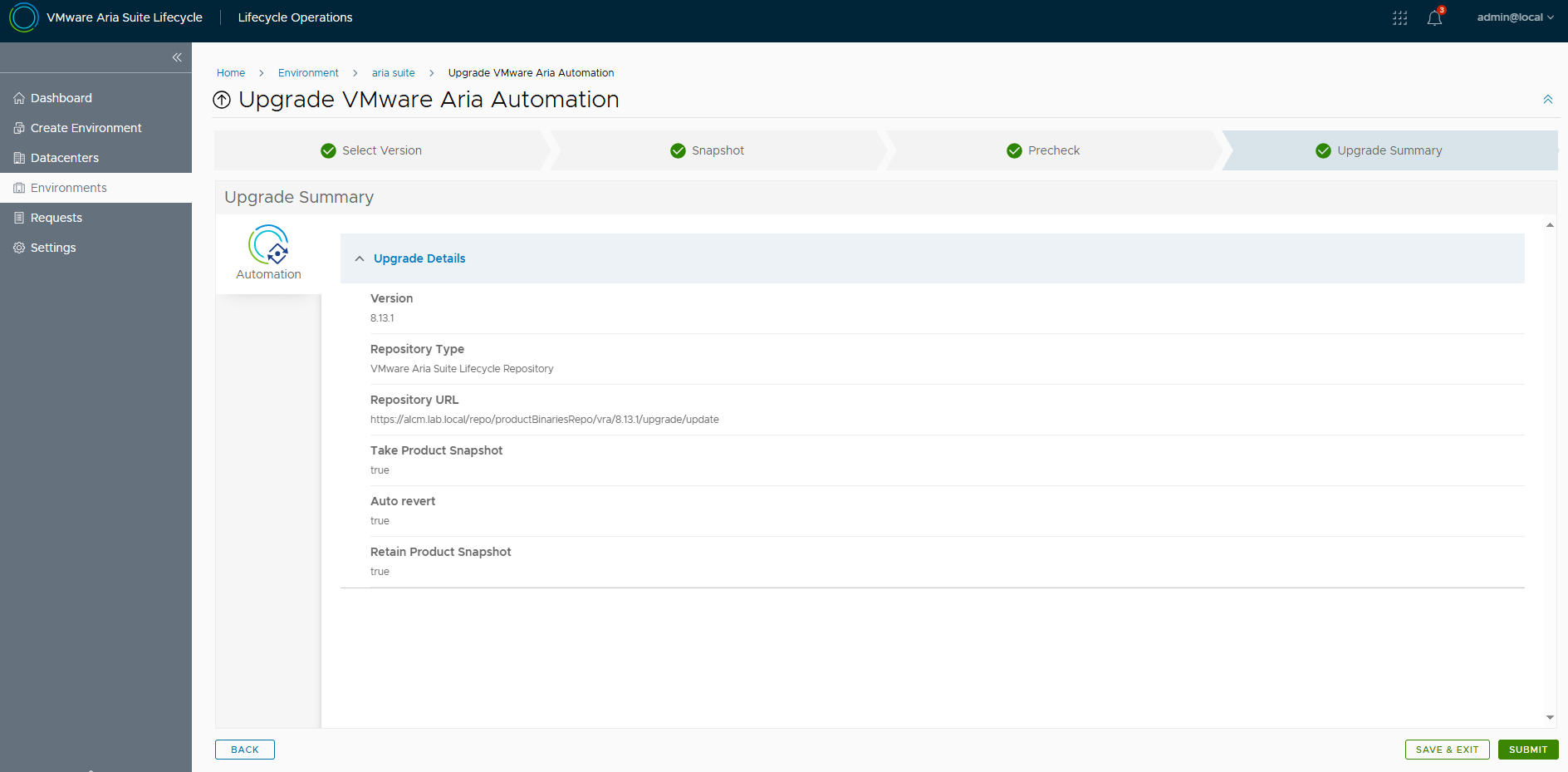

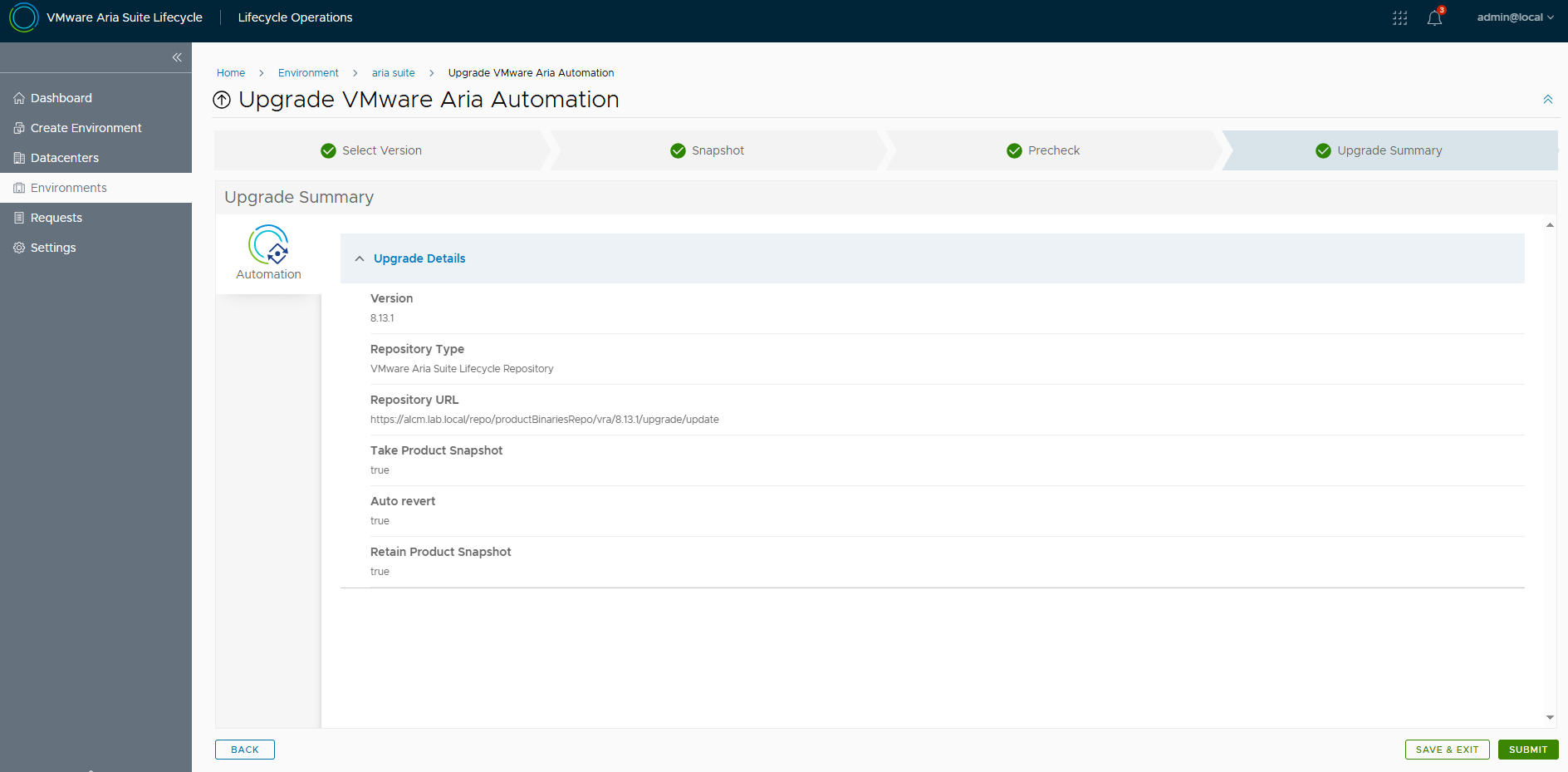

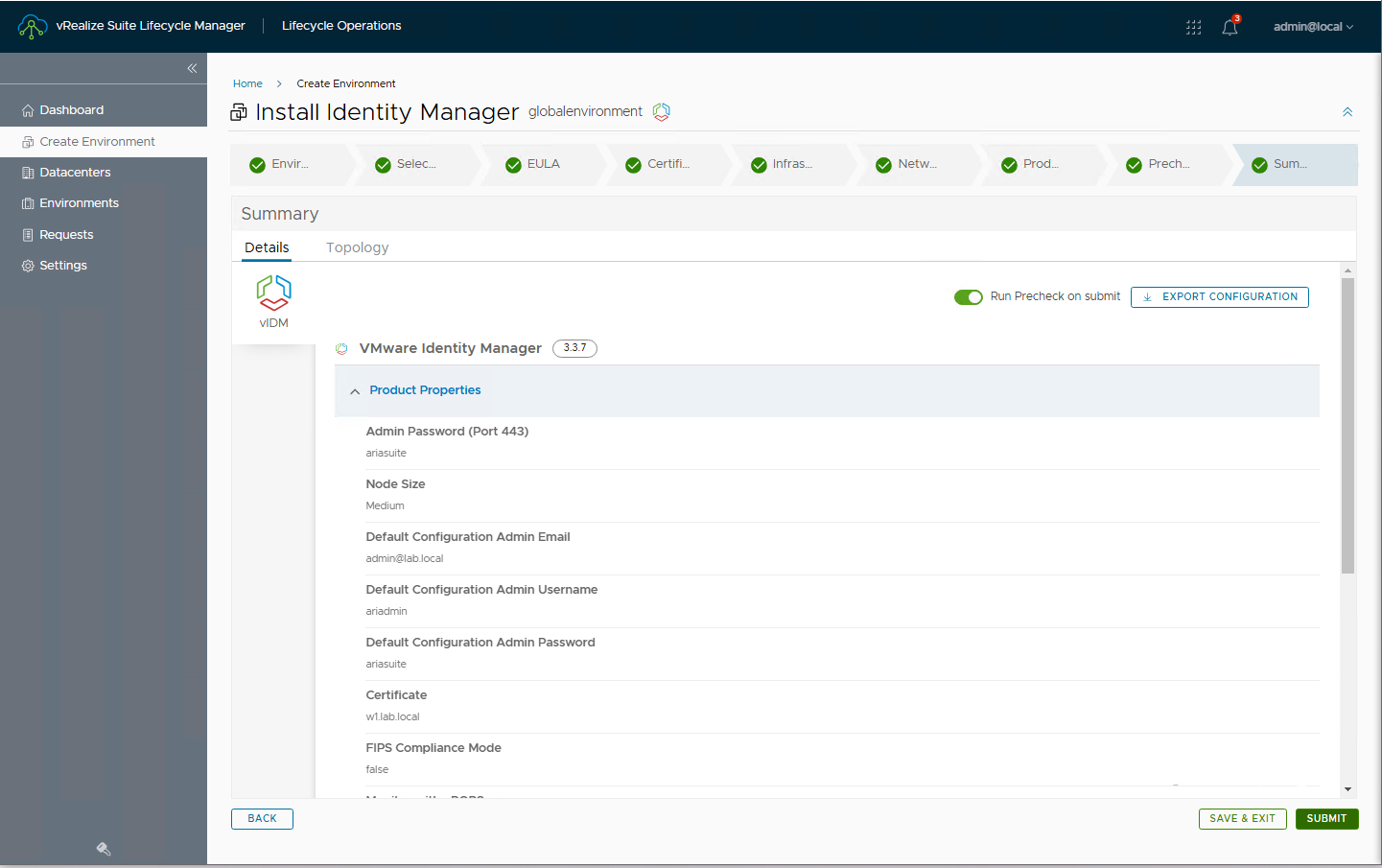

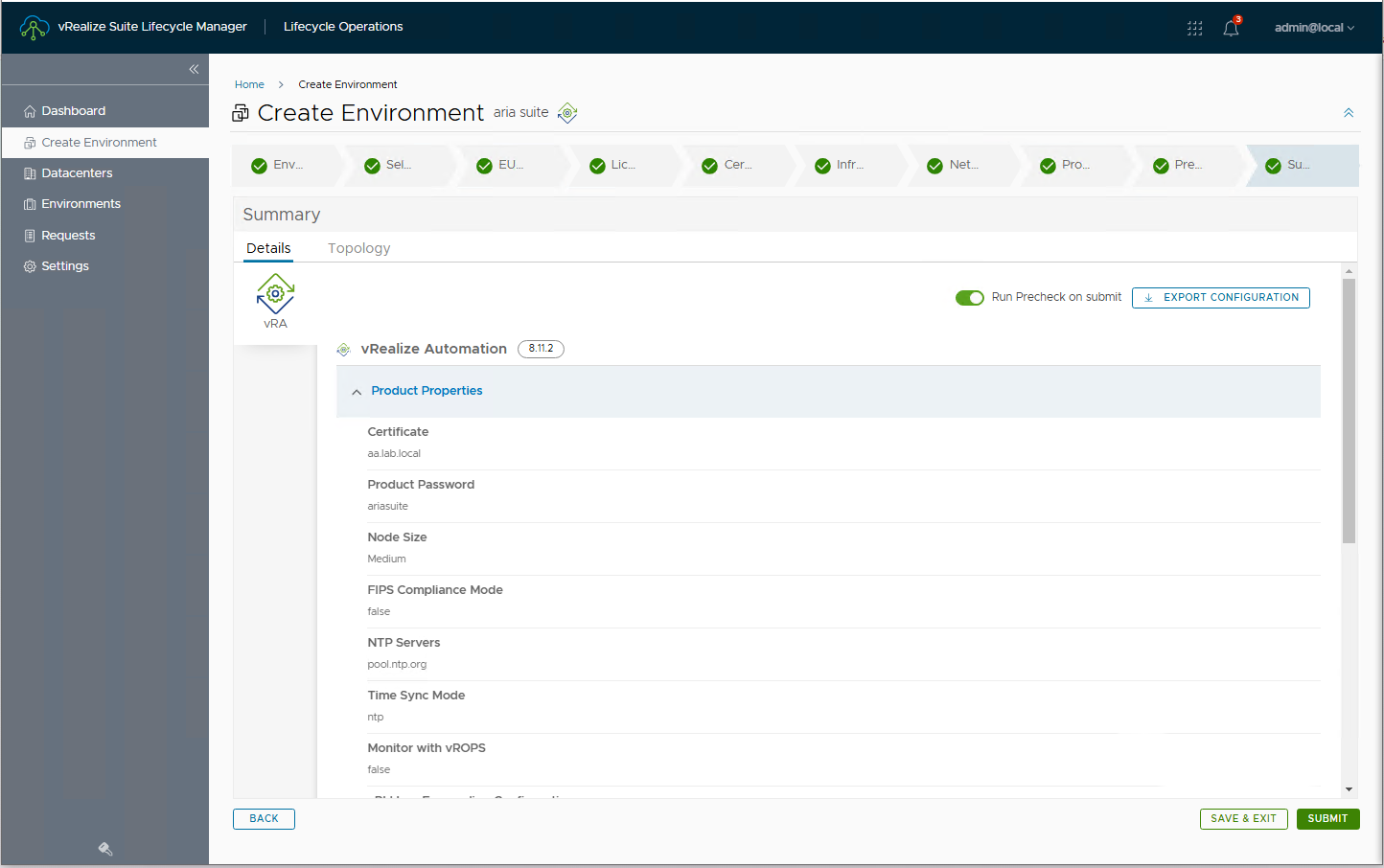

Let’s go to the Upgrade Summary and click Submit to start the upgrade

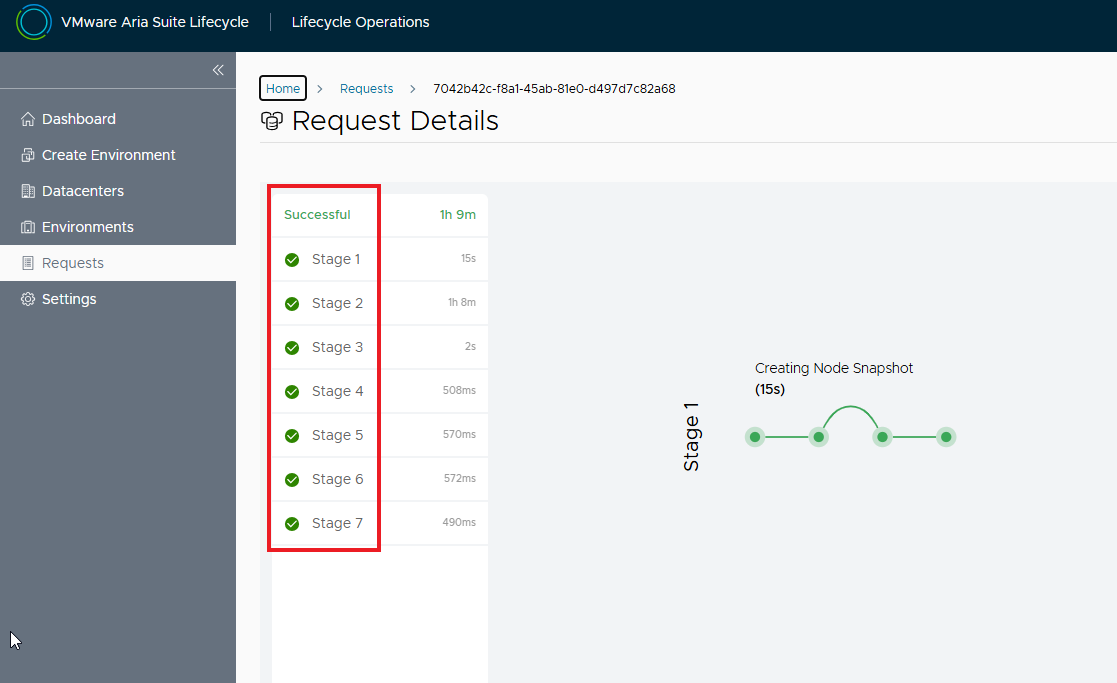

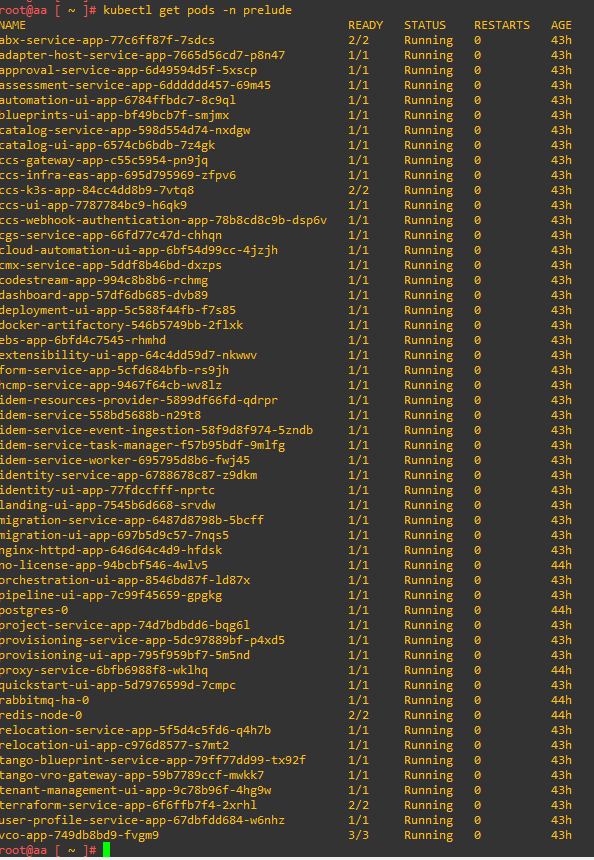

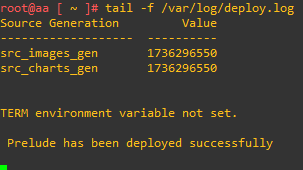

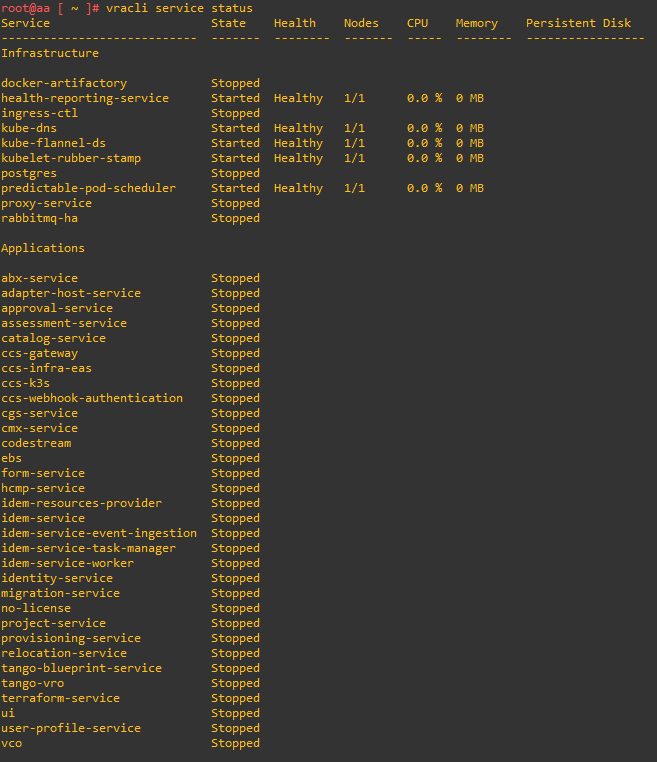

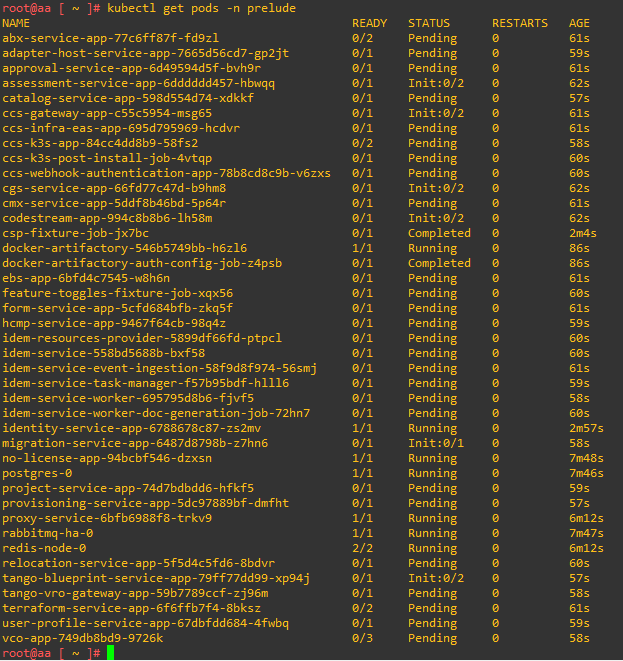

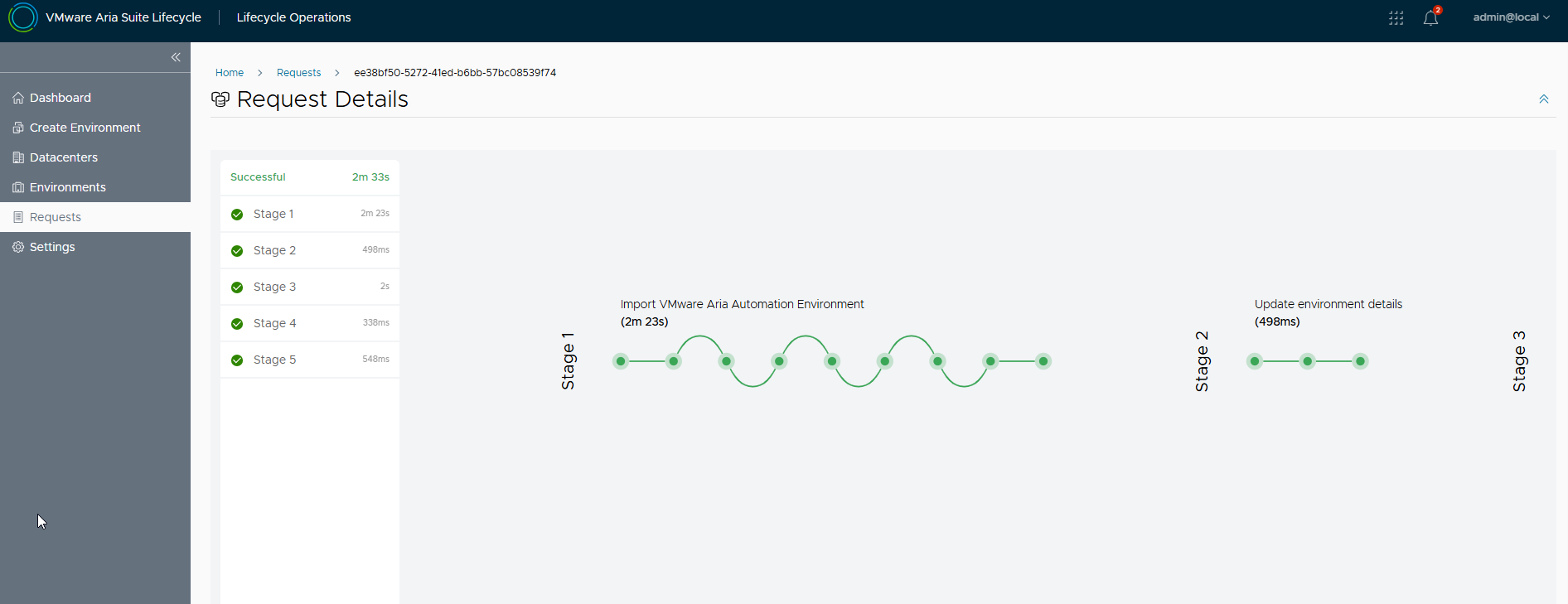

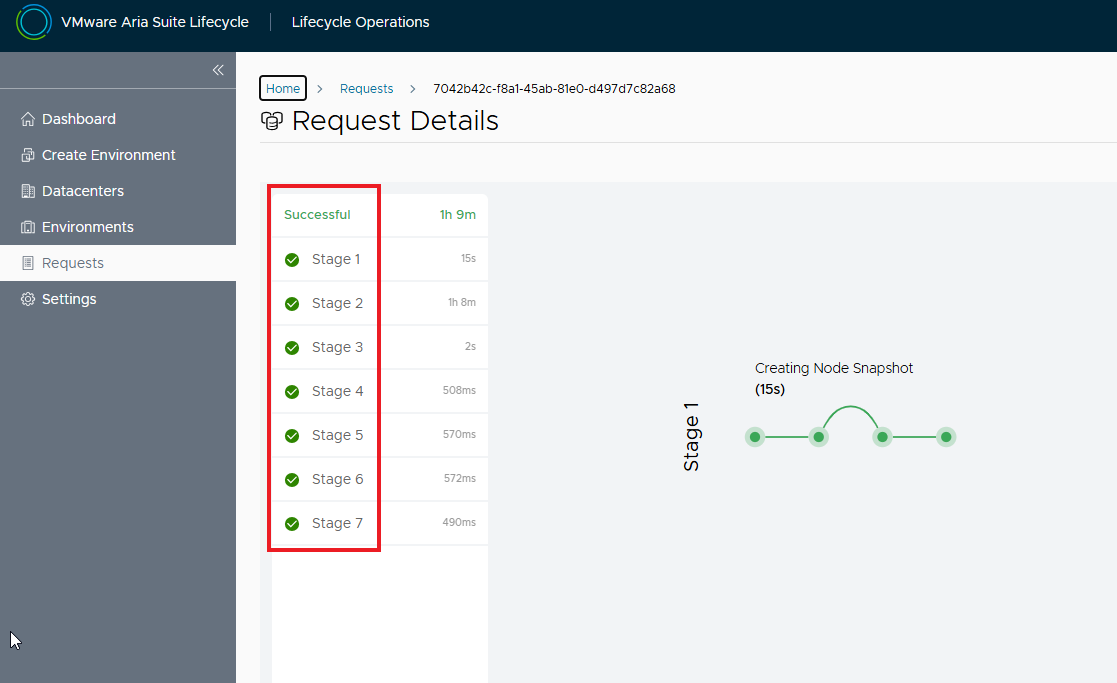

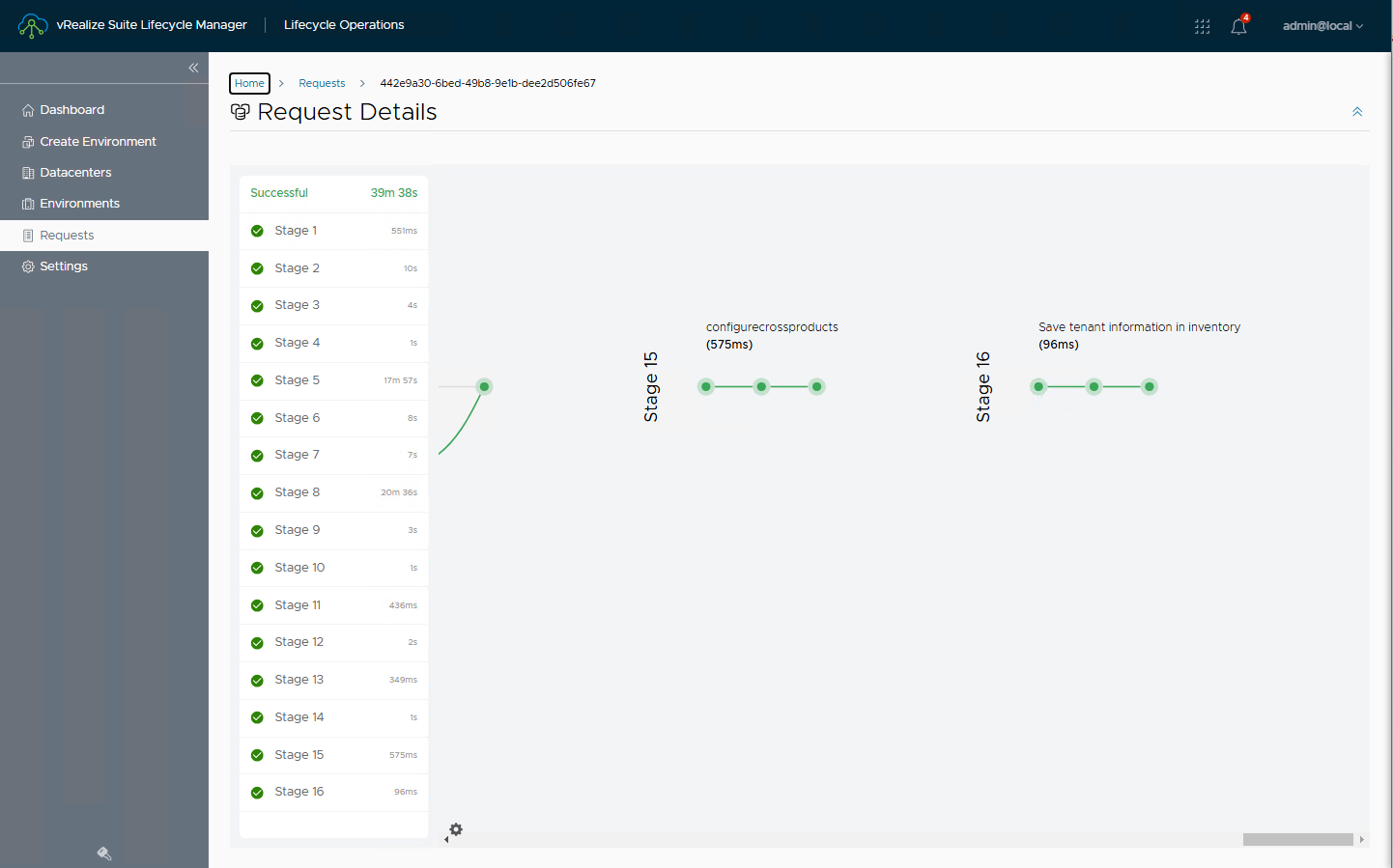

Waiting for the upgrade process to complete successfully.

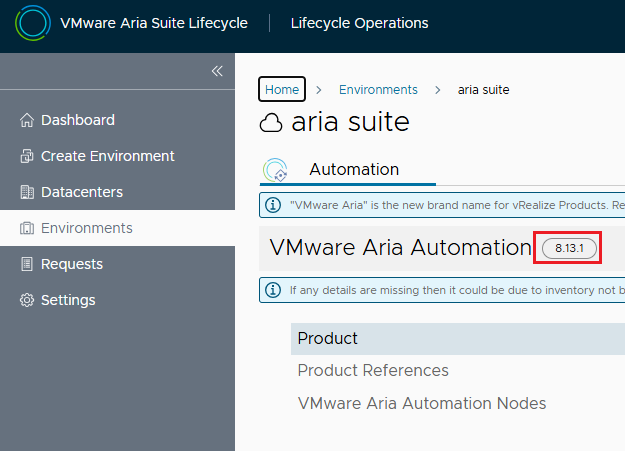

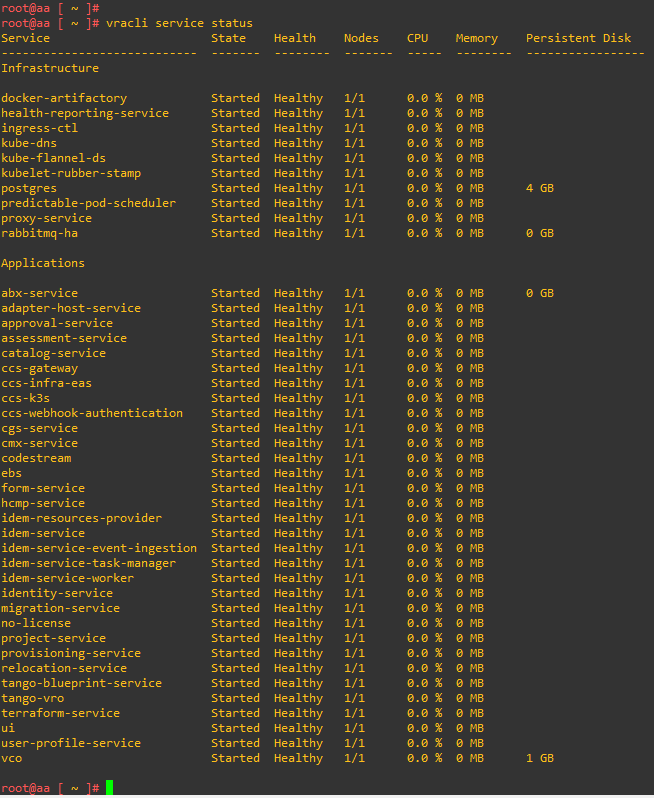

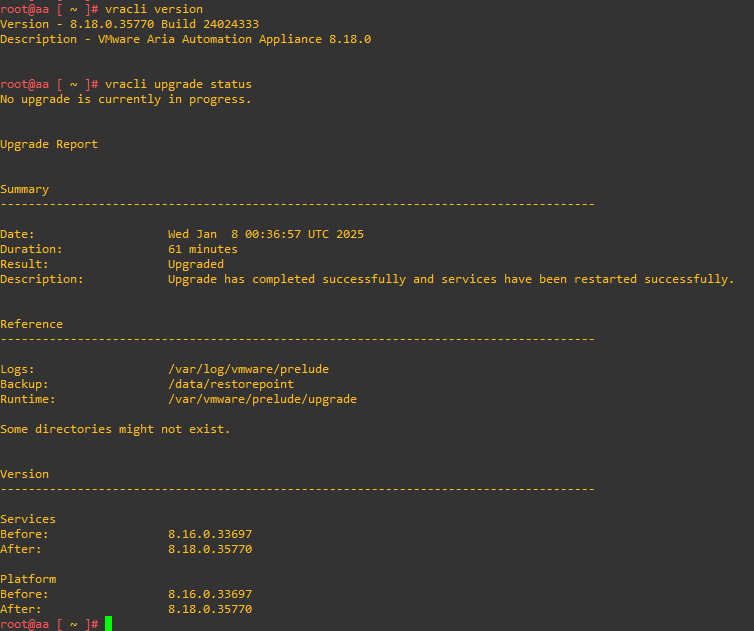

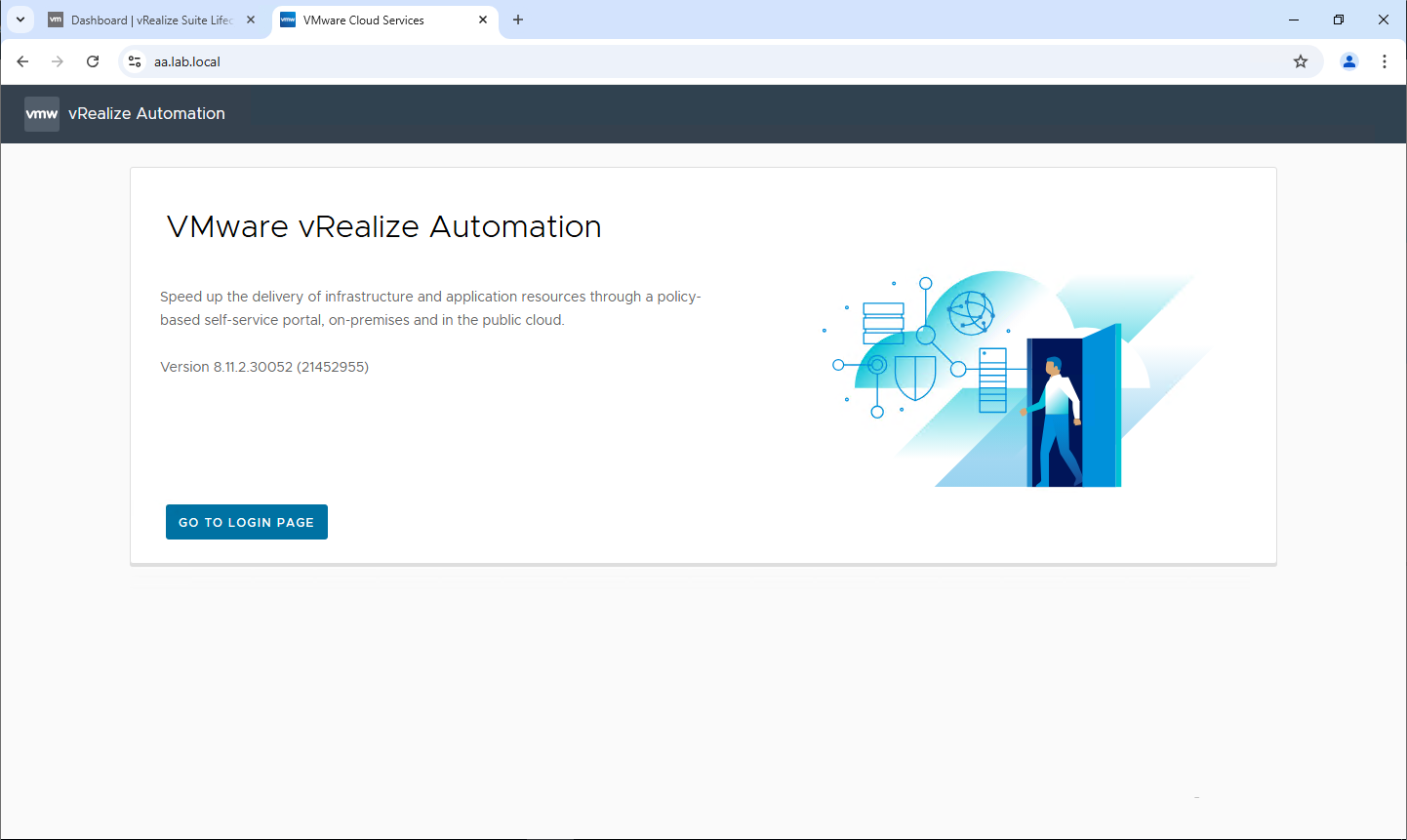

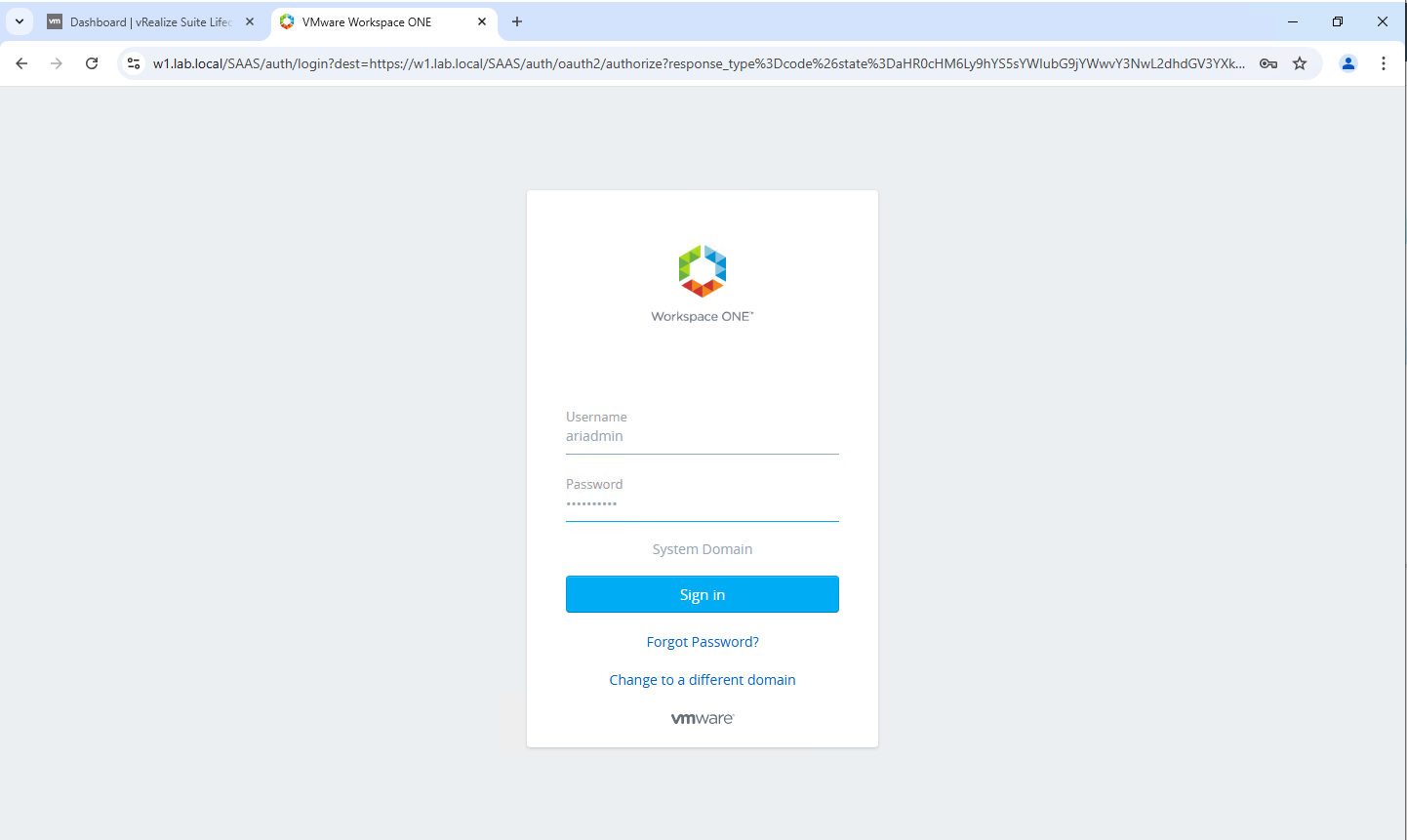

The whole process took about 1 hour, you can monitor the status of the services directly from the Aria Automation console. At the end of the upgrade our environment will be updated 🙂

At this point we have performed the first 2 steps of the upgrade path, in the same way it will be possible to perform all the other steps until reaching the 8.18 release of LCM and Aria Suite

NOTE: the Aria Automatin upgrade from release 8.16 to 8.18 requires 54GB of RAM as a prerequisite.

These are the files to download to perform all the upgrades

Prelude_VA-8.13.1.32340-22360938-updaterepo.iso

Prelude_VA-8.16.0.33697-23103949-updaterepo.iso

Prelude_VA-8.18.0.35770-24024333-updaterepo.iso

VMware-Aria-Suite-Lifecycle-Appliance-8.12.0.7-21628952-updaterepo.iso

VMware-Aria-Suite-Lifecycle-Appliance-8.14.0.4-22630472-updaterepo.iso

VMware-Aria-Suite-Lifecycle-Appliance-8.16.0.4-23377566-updaterepo.iso

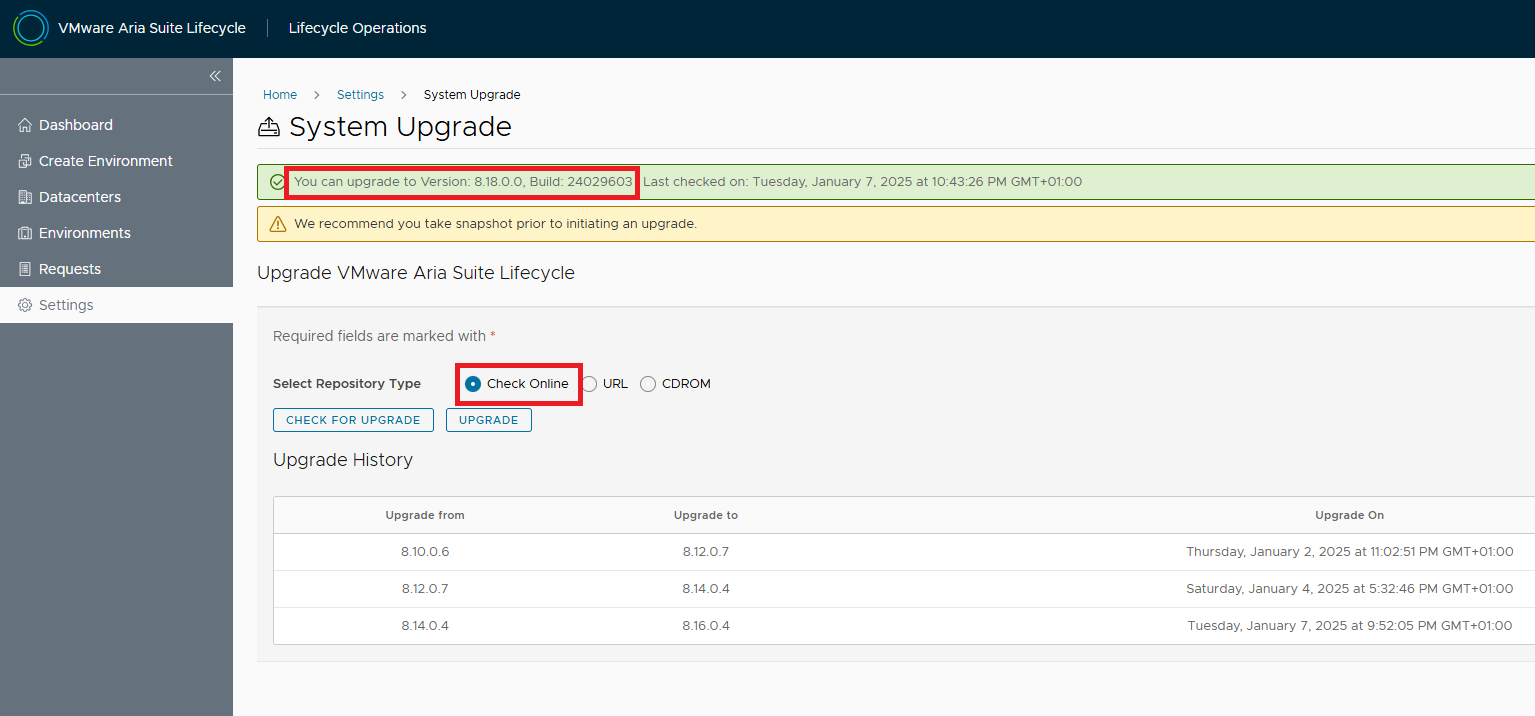

NOTE: The upgrade to LCM 8.18 can be done directly from the online repository 🙂

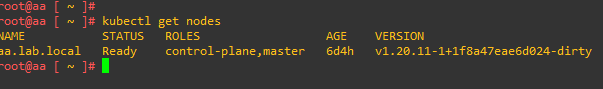

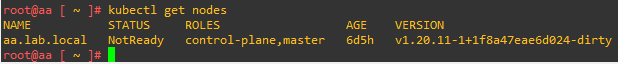

In the next article I will tell you how to solve some problems encountered during the upgrade and how to monitor the status of Aria Automation services via CLI

The VMware Product Interoperability Matrix is available at this

The VMware Product Interoperability Matrix is available at this